By the calendar, ChatGPT was released just a few months ago. But subjectively, it feels as though 600 years have passed since we all read “as a large language model…” for the first time.

The pace of new innovations is staggering, but we at Quiq like to help our audience in the customer experience and contact center industries stay ahead of the curve (even when that requires faster-than-light travel).

Today, we will look at what’s new in generative AI, and what will be coming down the line in the months ahead.

Where will Generative AI be applied?

First, let’s start with industries that will be strongly impacted by generative AI. As we noted in an earlier article, training a large language model (LLM) like ChatGPT mostly boils down to showing it tons of examples of text until it learns a statistical representation of human language well enough to generate sonnets, email copy, and many other linguistic artifacts.

There’s no reason the same basic process (have it learn it from many examples and then create its own) couldn’t be used elsewhere, and in the next few sections, we’re going to look at how generative AI is being used in a variety of different industries to brainstorm structures, new materials, and a billion other things.

Generative AI in Building and Product Design

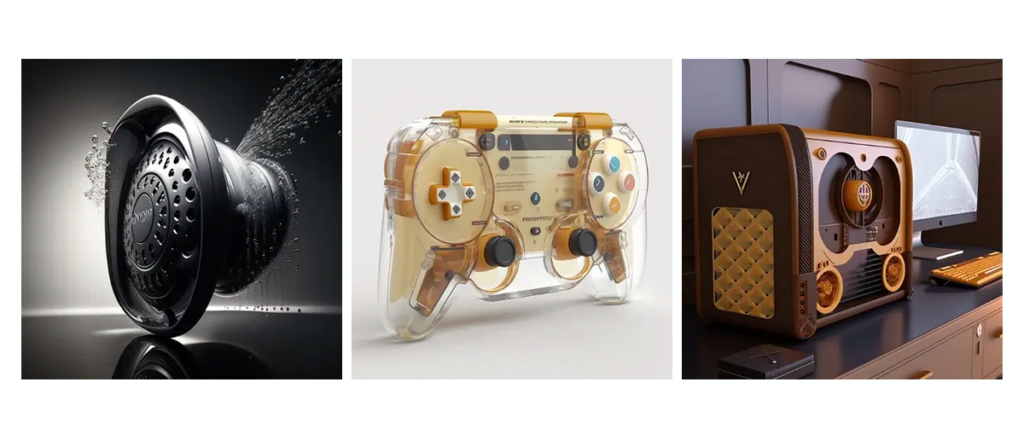

If you’ve had a chance to play around with DALL-E, Midjourney, or Stable Diffusion, you know that the results can be simply remarkable.

It’s not a far leap to imagine that it might be useful for quickly generating ideas for buildings and products.

The emerging field of AI-generated product design is doing exactly this. With generative image models, designers can use text prompts to rough out ideas and see them brought to life. This allows for faster iteration and quicker turnaround, especially given that creating a proof of concept is one of the slower, more tedious parts of product design.

For the same reason, these tools are finding use among architects who are able to quickly transpose between different periods and styles, see how better lighting impacts a room’s aesthetic, and plan around themes like building with eco-friendly materials.

There are two things worth pointing out about this process. First, there’s often a learning curve because it can take a while to figure out prompt engineering well enough to get a compelling image. Second, there’s a hearty dose of serendipity. Often the resulting image will not be quite what the designer had in mind, but it’ll be different in new and productive ways, pushing the artist along fresh trajectories that might never have occurred to them otherwise.

Generative AI in Discovering New Materials

To quote one of America’s most renowned philosophers (Madonna), we’re living in a material world. Humans have been augmenting their surroundings since we first started chipping flint axes back in the Stone Age; today, the field of materials science continues the long tradition of finding new stuff that expands our capabilities and makes our lives better.

This can take the form of something (relatively) simple like researching a better steel alloy, or something incredibly novel like designing a programmable nanomaterial.

There’s just one issue: it’s really, really difficult to do this. It takes a great deal of time, energy, and effort to even identify plausible new materials, to say nothing of the extensive testing and experimenting that must then follow.

Materials scientists have been using machine learning (ML) in their process for some time, but the recent boom in generative AI is driving renewed interest. There are now a number of projects aimed at e.g. using variational autoencoders, recurrent neural networks, and generative adversarial networks to learn a mapping between information about a material’s underlying structure and its final properties, then using this information to create plausible new materials.

It would be hard to overstate how important the use of generative AI in materials science could be. If you imagine the space of possible molecules as being like its own universe, we’ve explored basically none of it. What new fabrics, medicines, fuels, fertilizers, conductors, insulators, and chemicals are waiting out there? With generative AI, we’ve got a better chance than ever of finding out.

Generative AI in Gaming

Gaming is often an obvious place to use new technology, and that’s true for generative AI as well. The principles of generative design we discussed two sections ago could be used in this context to flesh out worlds, costumes, weapons, and more, but it can also be used to make character interactions more dynamic.

From Navi trying to get our attention in Ocarina of Time to GlaDOS’s continual reminders that “the cake is a lie” in Portal, non-playable characters (NPCs) have always added texture and context to our favorite games.

Powered by LLMs, these characters may soon be able to have open-ended conversations with players, adding more immersive realism to the gameplay. Rather than pulling from a limited set of responses, they’d be able to query LLMs to provide advice, answer questions, and shoot the breeze.

What’s Next in Generative AI?

As impressive as technologies like ChatGPT are, people are already looking for ways to extend their capabilities. Now that we’ve covered some of the major applications of generative AI, let’s look at some of the exciting applications people are building on top of it.

What is AutoGPT and how Does it Work?

ChatGPT can already do things like generate API calls and build simple apps, but as long as a human has to actually copy and paste the code somewhere useful, its capacities are limited.

But what if that weren’t an issue? What if it were possible to spin ChatGPT up into something more like an agent, capable of semi-autonomously interacting with software or online services to complete strings of tasks?

This is exactly what Auto-GPT is intended to accomplish. Auto-GPT is an application built by developer Toran Bruce Richards, and it is comprised of two parts: an LLM (either GPT-3.5 or GPT-4), and a separate “bot” that works with the LLM.

By repeatedly querying the LLM, the bot is able to take a relatively high-level task like “help me set up an online business with a blog and a website” or “find me all the latest research on quantum computing”, decompose it into discrete, achievable steps, then iteratively execute them until the overall objective is achieved.

At present, Auto-GPT remains fairly primitive. Just as ChatGPT can get stuck in repetitive and unhelpful loops, so too can Auto-GPT. Still, it’s a remarkable advance, and it’s spawned a series of other projects attempting to do the same thing in a more consistent way.

The creators of AssistGPT bill it as a “General Multi-modal Assistant that can Plan, Execute, Inspect, and Learn”. It handles multi-modal tasks (i.e. tasks that rely on vision or sound and not just text) better than Auto-GPT, and by integrating with a suite of tools it is able to achieve objectives that involve many intermediate steps and sub-tasks.

SuperAGI, in turn, is just as ambitious. It’s a platform that offers a way to quickly create, deploy, manage, and update autonomous agents. You can integrate them into applications like Slack or vector databases, and it’ll even ping you if an agent gets stuck somewhere and starts looping unproductively.

Finally, there’s LangChain, which is a similar idea. LangChain is a framework that is geared towards making it easier to build on top of LLMs. It features a set of primitives that can be stitched into more robust functionality (not unlike “for” and “while” loops in programming languages), and it’s even possible to build your own version of AutoGPT using LangChain.

What is Chain-of-Thought Prompting and How Does it Work?

In the misty, forgotten past (i.e. 5 months ago), LLMs were famously bad at simple arithmetic. They might be able to construct elegant mathematical proofs, but if you asked them what 7 + 4 is, there was a decent chance they’d get it wrong.

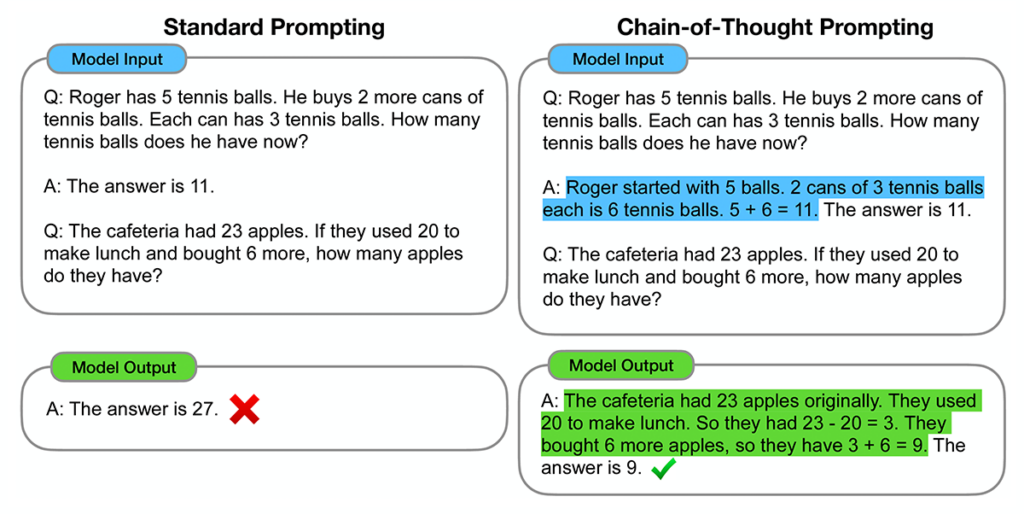

Chain-of-thought (COT) prompting refers to a few-shot learning method of eliciting output from an LLM that compels it to reason in a step-by-step way, and it was developed in part to help with this issue. This image from the original Wei et al. (2022) paper illustrates how:

As you can see, the model’s performance is improved because it’s being shown a chain of different thoughts, hence chain-of-thought.

This technique isn’t just useful for arithmetic, it can be utilized to get better output from a model in a variety of different tasks, including commonsense and symbolic reasoning.

In a way, humans can be prompt engineered in the same fashion. You can often get better answers out of yourself or others through a deliberate attempt to reason slowly, step-by-step, so it’s not a terrible shock that a large model trained on human text would benefit from the same procedure.

The Ecosystem Around Generative AI

Though cutting-edge models are usually the stars of the show, the truth is advanced technologies aren’t worth much if you have to be deeply into the weeds to use them. Machine learning, for example, would surely be much less prevalent if tools like sklearn, Tensorflow, and Keras didn’t exist.

Though we’re still in the early days of LLMs, AutoGPT, and everything else we’ve discussed, we suspect the same basic dynamic will play out. Since it’s now clear that these models aren’t toys, people will begin building infrastructure around them that streamlines the process of training them for specific use cases, integrating them into existing applications, etc.

Let’s discuss a few efforts in this direction that are already underway.

Training and Education

Among the simplest parts of the emerging generative AI value chain is exactly what we’re doing now: talking about it in an informed way. Non-specialists will often lack the time, context, and patience required to sort the real breakthroughs from the hype, so putting together blog posts, tutorials, and reports that make this easier is a real service.

Making Foundation Models Available

“Foundation models” is a new term that refers to the actual algorithms that underlie LLMs. ChatGPT, for example, is not a foundation model. GPT-4 is the foundation model, and ChatGPT is a specialized application of it (more on this shortly).

Companies like Anthropic, Google, and OpenAI can train these gargantuan models and then make them available through an API. From there, developers are able to access their preferred foundation model over an API.

This means that we can move quickly to utilize their remarkable functionality, which wouldn’t be the case if every company had to train their own from scratch.

Building Applications Around Specific Use Cases

One of the most striking properties of ChatGPT is how amazingly general they are. They are capable of “…generating functioning web apps with just a few prompts, writing Spanish-language children’s stories about the blockchain in the style of Dr. Suess, [and] opining on the virtues and vices of major political figures”, to name but a few examples.

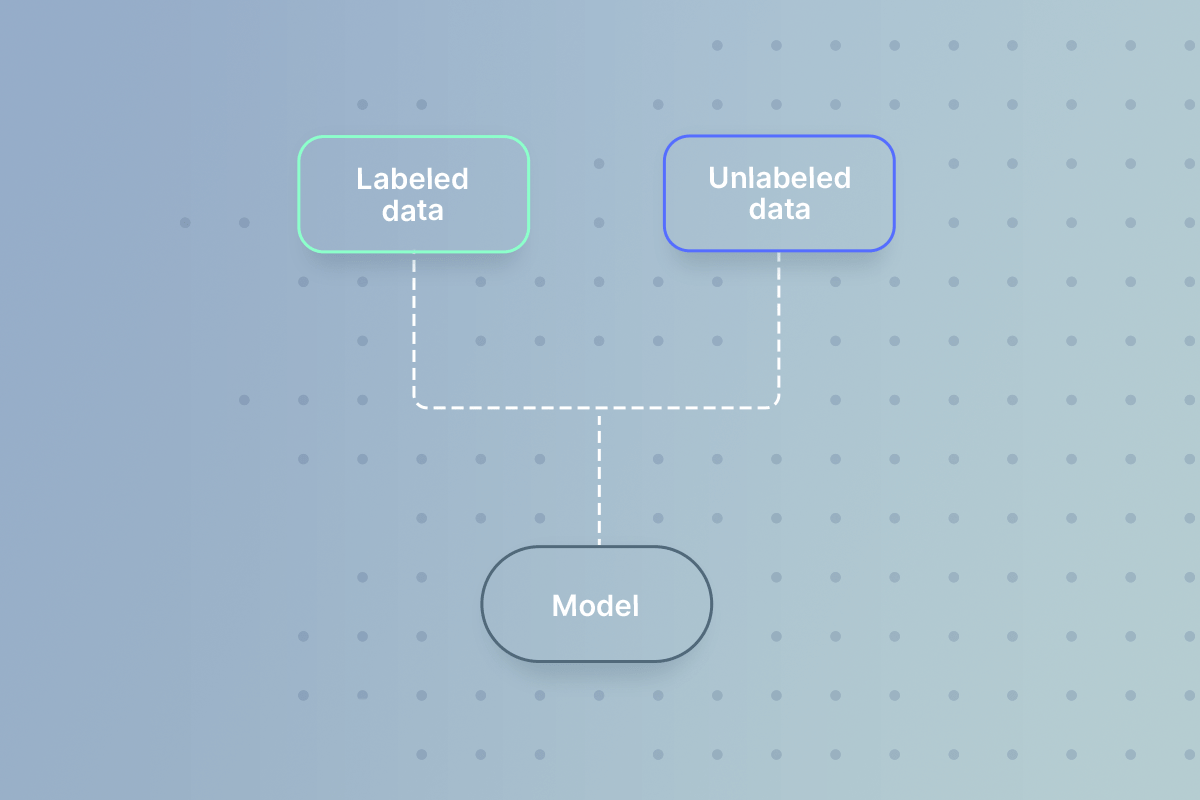

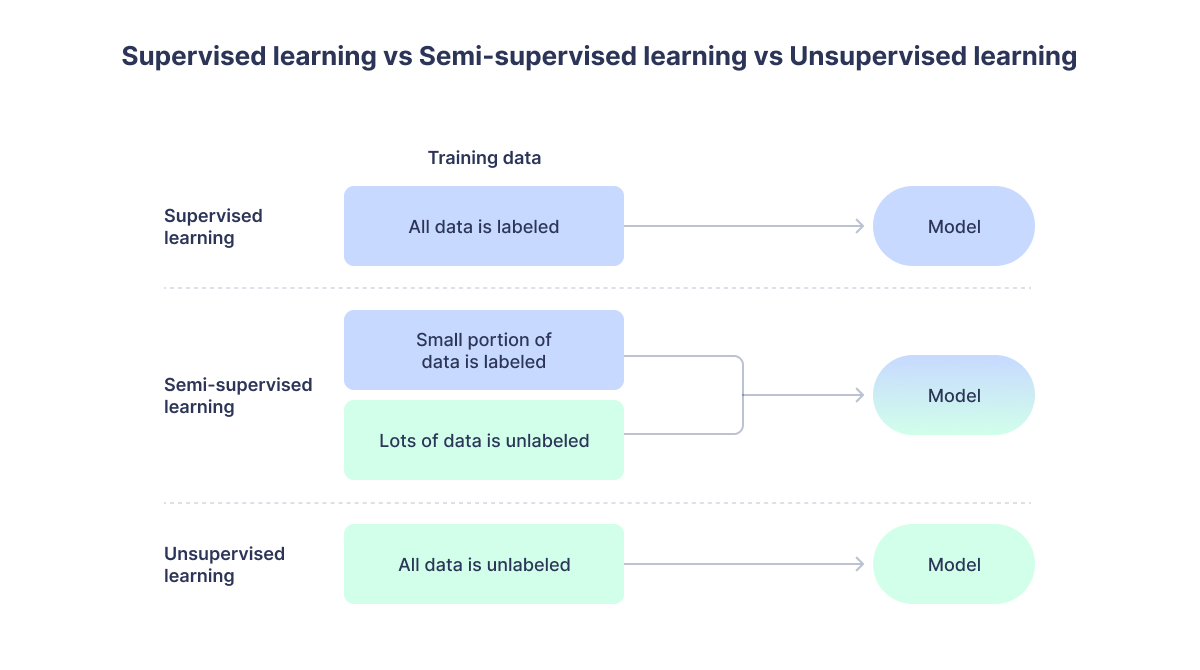

General-purpose models often have to be fine-tuned to perform better on a specific task, especially if they’re doing something tricky like summarizing medical documents with lots of obscure vocabulary. Alas, there is a tradeoff here, because in most cases these fine-tuned models will afterward not be as useful for generic tasks.

The issue, however, is that you need a fair bit of technical skill to set up a fine-tuning pipeline, and you need a fair bit of elbow grease to assemble the few hundred examples a model needs in order to be fine-tuned. Though this is much simpler than training a model in the first place it is still far from trivial, and we expect that there will soon be services aimed at making it much more straightforward.

LLMOps and Model Hubs

We’d venture to guess you’ve heard of machine learning, but you might not be familiar with the term “MLOps”. “Ops” means “operations”, and it refers to all the things you have to do to use a machine learning model besides just training it. Once a model has been trained it has to be monitored, for example, because sometimes its performance will begin to inexplicably degrade.

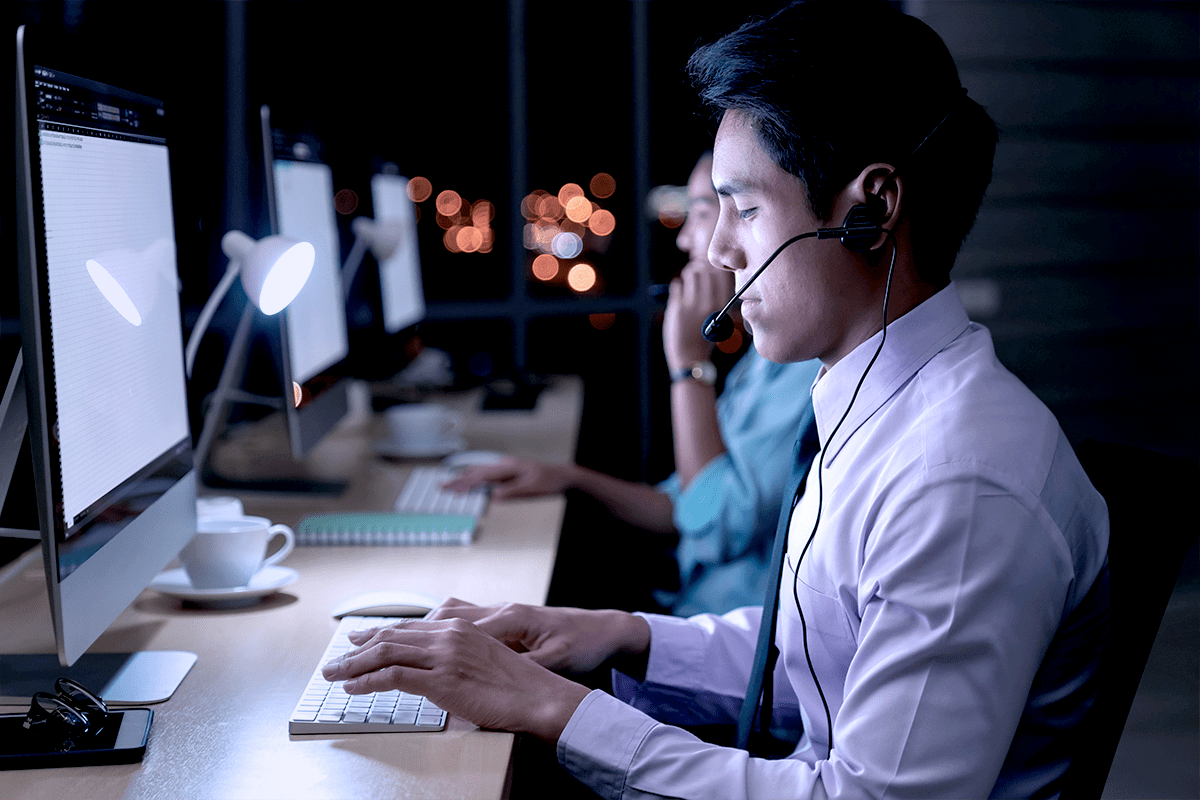

The same will be true of LLMs. You’ll need to make sure that the chatbot you’ve deployed hasn’t begun abusing customers and damaging your brand, or that the deep learning tool you’re using to explore new materials hasn’t begun to spit out gibberish.

Another phenomenon from machine learning we think will be echoed in LLMs is the existence of “model hubs”, which are places where you can find pre-trained or fine-tuned models to use. There certainly are carefully guarded secrets among technologists, but on the whole, we’re a community that believes in sharing. The same ethos that powers the open-source movement will be found among the teams building LLMs, and indeed there are already open-sourced alternatives to ChatGPT that are highly performant.

Looking Ahead

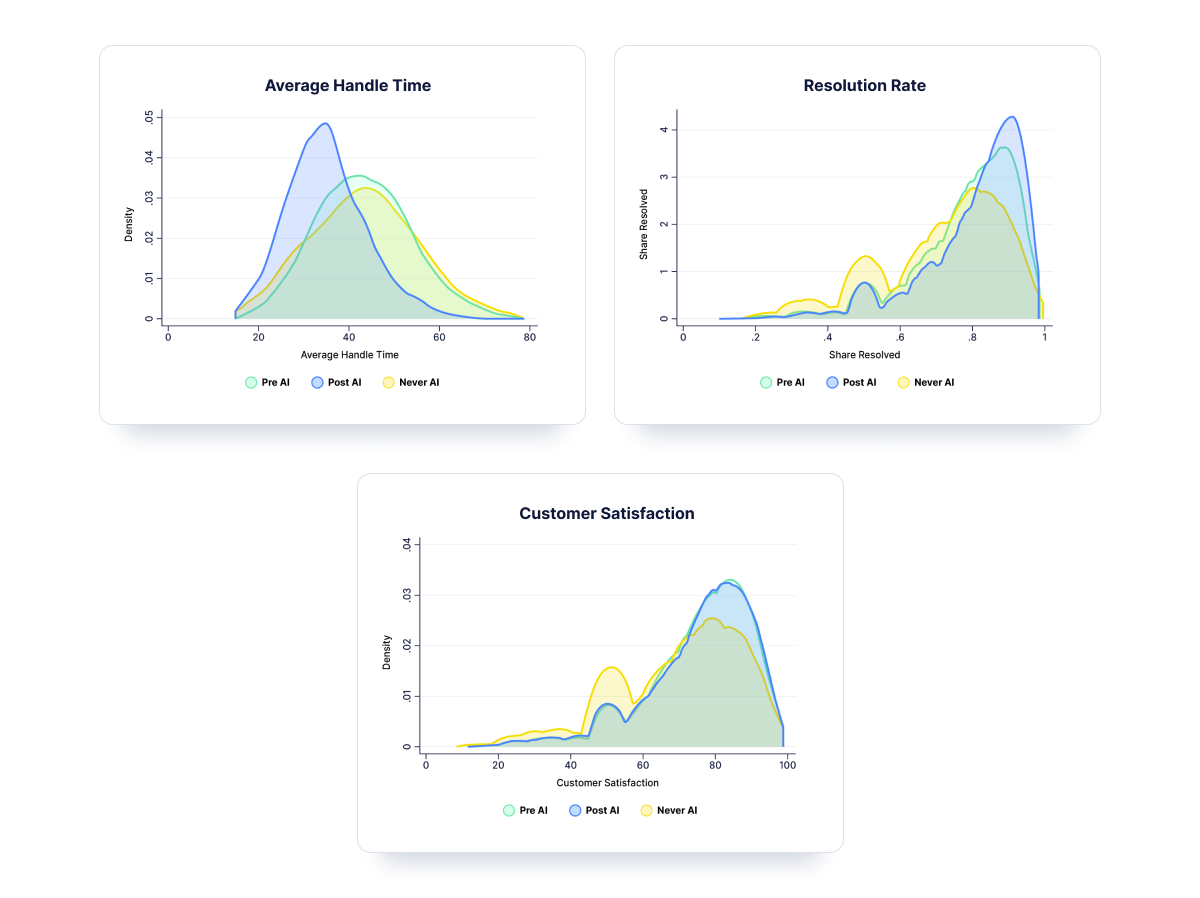

As they’re so fond of saying on Twitter, “ChatGPT is just the tip of the iceberg.” It’s already begun transforming contact centers, boosting productivity among lower-skilled workers while reducing employee turnover, but research into even better tools is screaming ahead.

Frankly, it can be enough to make your head spin. If LLMs and generative AI are things you want to incorporate into your own product offering, you can skip the heady technical stuff and skip straight to letting Quiq do it for you. The Quiq conversational AI platform is a best-in-class product suite that makes it much easier to utilize these technologies. Schedule a demo to see how we can help you get in on the AI revolution.