Generative AI has captured the popular imagination and is already changing the way contact centers work.

One area in which it has enormous potential is also one that tends to be top of mind for contact center managers: customer experience.

In this piece, we’re going to briefly outline what generative AI is, then spend the rest of our time talking about how generative AI benefits can improve customer experience with personalized responses, endless real-time support, and much more.

What is Generative AI?

As you may have puzzled out from the name, “generative AI” refers to a constellation of different deep learning models used to dynamically generate output. This distinguishes them from other classes of models, which might be used to predict returns on Bitcoin, make product recommendations, or translate between languages.

The most famous example of generative AI is, of course, the large language model ChatGPT. After being trained on staggering amounts of textual data, it’s now able to generate extremely compelling output, much of which is hard to distinguish from actual human-generated writing.

Its success has inspired a panoply of competitor models from leading players in the space, including companies like Anthropic, Meta, and Google.

As it turns out, the basic approach underlying generative AI can be utilized in many other domains as well. After natural language, probably the second most popular way to use generative AI is to make images. DALL-E, MidJourney, and Stable Diffusion have proven remarkably adept at producing realistic images from simple prompts, and just the past week, Fable Studios unveiled their “Showrunner” AI, able to generate an entire episode of South Park.

But even this is barely scratching the surface, as researchers are also training generative models to create music, design new proteins and materials, and even carry out complex chains of tasks.

What is Customer Experience?

In the broadest possible terms, “customer experience” refers to the subjective impressions that your potential and current customers have as they interact with your company.

These impressions can be impacted by almost anything, including the colors and font of your website, how easy it is to find e.g. contact information, and how polite your contact center agents are in resolving a customer issue.

Customer experience will also be impacted by which segment a given customer falls into. Power users of your product might appreciate a bevy of new features, whereas casual users might find them disorienting.

Contact center managers must bear all of this in mind as they consider how best to leverage generative AI. In the quest to adopt a shiny new technology everyone is excited about, it can be easy to lose track of what matters most: how your actual customers feel about you.

Be sure to track metrics related to customer experience and customer satisfaction as you begin deploying large language models into your contact centers.

How is Generative AI For Customer Experience Being Used?

There are many ways in which generative AI is impacting customer experience in places like contact centers, which we’ll detail in the sections below.

Personalized Customer Interactions

Machine learning has a long track record of personalizing content. Netflix, take to a famous example, will uncover patterns in the shows you like to watch, and will use algorithms to suggest content that checks similar boxes.

Generative AI, and tools like the Quiq conversational AI platform that utilize it, are taking this approach to a whole new level.

Once upon a time, it was only a human being that could read a customer’s profile and carefully incorporate the relevant information into a reply. Today, a properly fine-tuned generative language model can do this almost instantaneously, and at scale.

From the perspective of a contact center manager who is concerned with customer experience, this is an enormous development. Besides the fact that prior generations of language models simply weren’t flexible enough to have personalized customer interactions, their language also tended to have an “artificial” feel. While today’s models can’t yet replace the all-elusive human touch, they can do a lot to add make your agents far more effective in adapting their conversations to the appropriate context.

Better Understanding Your Customers and Their Journies

Marketers, designers, and customer experience professionals have always been data enthusiasts. Long before we had modern cloud computing and electronic databases, detailed information on potential clients, customer segments, and market trends used to be printed out on dead treads, where it was guarded closely. With better data comes more targeted advertising, a more granular appreciation for how customers use your product and why they stop using it, and their broader motivations.

There are a few different ways in which generative AI can be used in this capacity. One of the more promising is by generating customer journeys that can be studied and mined for insight.

When you begin thinking about ways to improve your product, you need to get into your customers’ heads. You need to know the problems they’re solving, the tools they’ve already tried, and their major pain points. These are all things that some clever prompt engineering can elicit from ChatGPT.

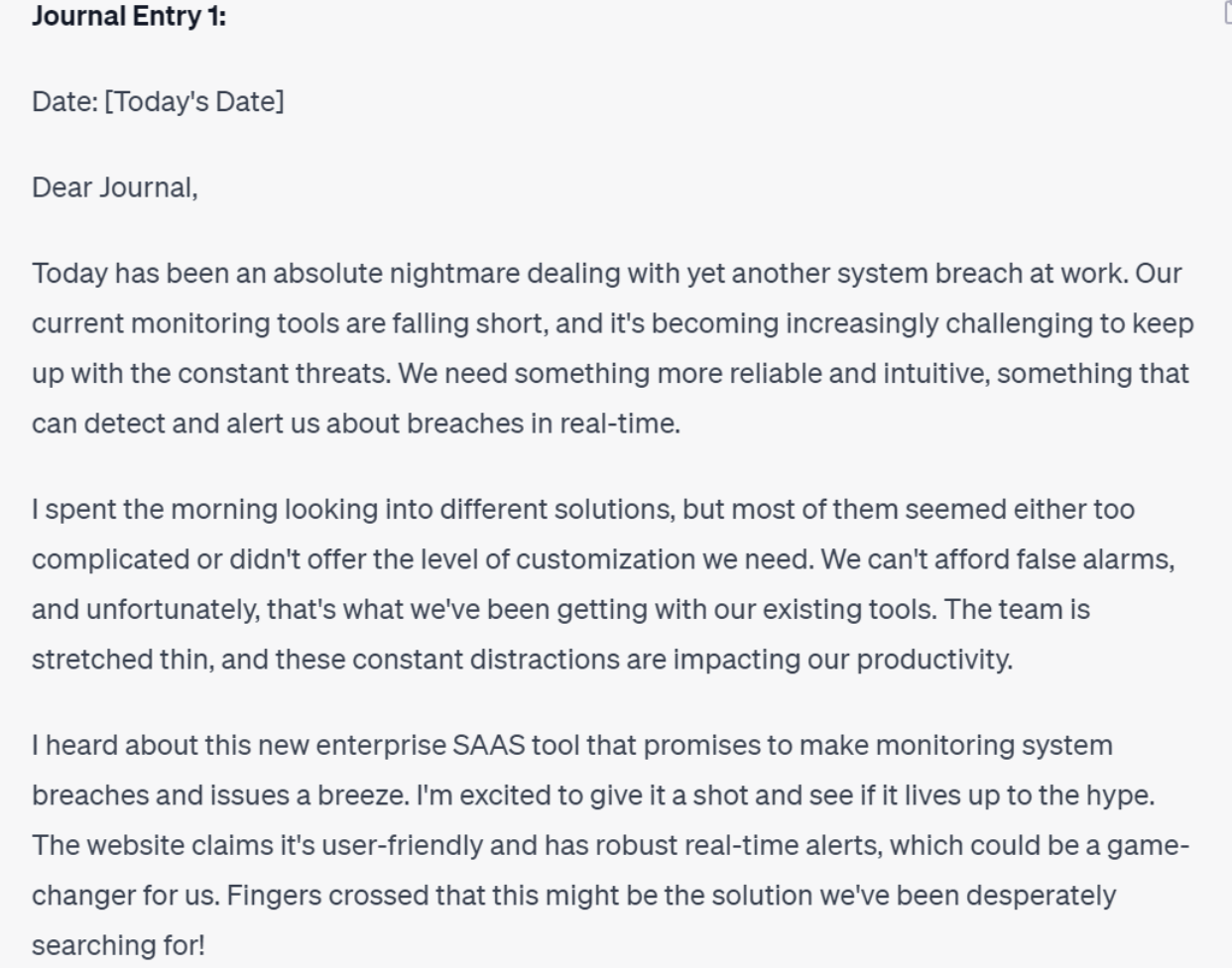

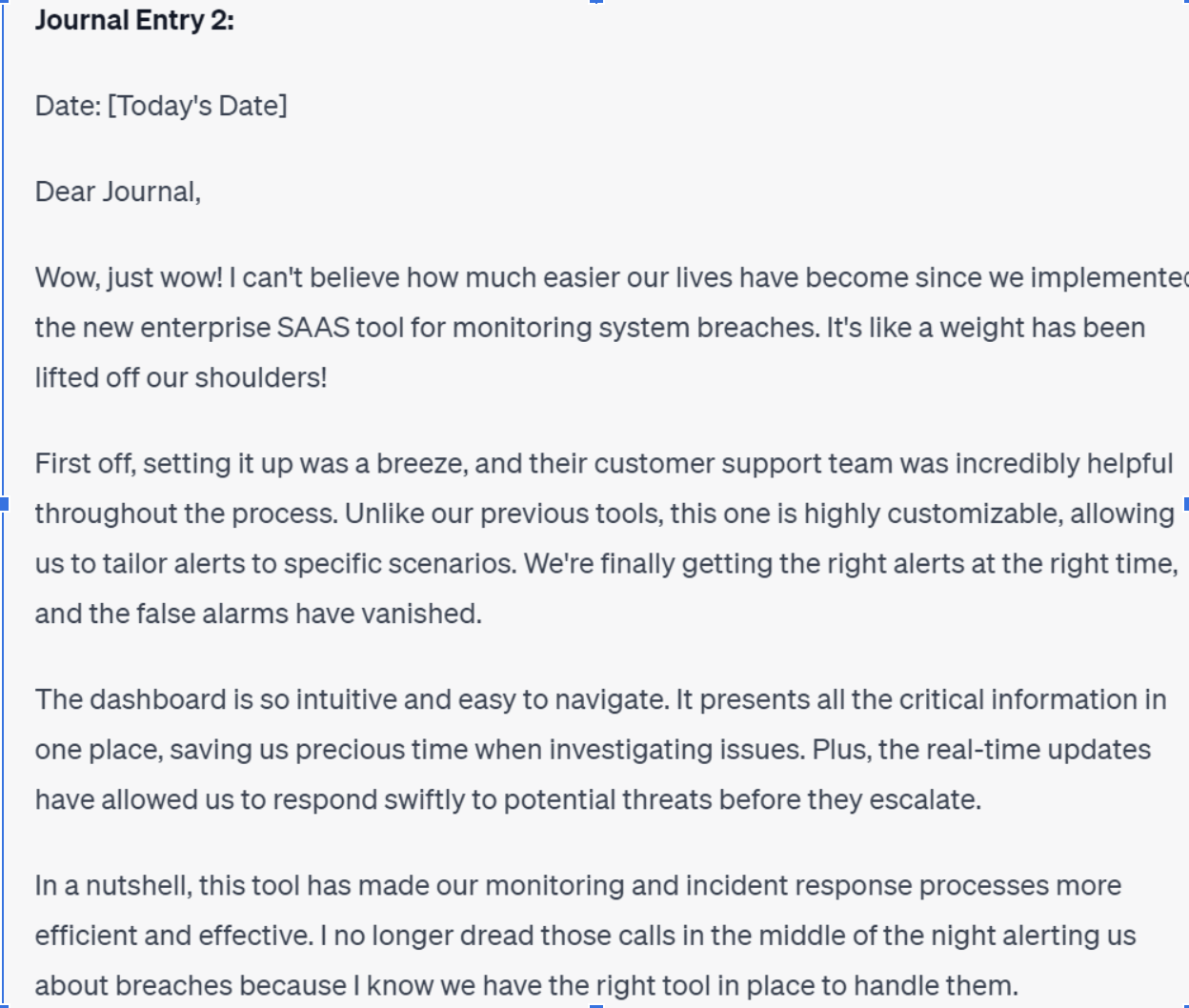

We took a shot at generating such content for a fictional network-monitoring enterprise SaaS tool, and this was the result:

While these responses are fairly generic [1], notice that they do single out a number of really important details. These machine-generated journal entries bemoan how unintuitive a lot of monitoring tools are, how they’re not customizable, how they’re exceedingly difficult to set up, and how their endless false alarms are stretching the security teams thin.

It’s important to note that ChatGPT is not soon going to obviate your need to talk to real, flesh-and-blood users. Still, when combined with actual testimony, they can be a valuable aid in prioritizing your contact center’s work and alerting you to potential product issues you should be prepared to address.

Round-the-clock Customer Service

As science fiction movies never tire of pointing out, the big downside of fighting a robot army is that machines never need to eat, sleep, or rest. We’re not sure how long we have until the LLMs will rise up and wage war on humanity, but in the meantime, these are properties that you can put to use in your contact center.

With the power of generative AI, you can answer basic queries and resolve simple issues pretty much whenever they happen (which will probably be all the time), leaving your carbon-based contact center agents to answer the harder questions when they punch the clock in the morning after a good night’s sleep.

Enhancing Multilingual Support

Machine translation was one of the earliest use cases for neural networks and machine learning in general, and it continues to be an important function today. While ChatGPT was noticeably very good at multilingual translation right from the start, you may be surprised to know that it actually outperforms alternatives like Google Translate.

If your product doesn’t currently have a diverse global user base speaking many languages, it hopefully will soon, at the means you should start thinking about multilingual support. Not only will this boost table stakes metrics like average handling time and resolutions per hour, it’ll also contribute to the more ineffable “customer satisfaction.” Nothing says “we care about making your experience with us a good one” like patiently walking a customer through a thorny technical issue in their native tongue.

Things to Watch Out For

Of course, for all the benefits that come from using generative AI for customer experience, it’s not all upside. There are downsides and issues that you’ll want to be aware of.

A big one is the tendency of large language models to hallucinate information. If you ask it for a list of articles to read about fungal computing (which is a real thing whose existence we discovered yesterday), it’s likely to generate a list that contains a mix of real and fake articles.

And because it’ll do so with great confidence and no formatting errors, you might be inclined to simply take its list at face value without double-checking it.

Remember, LLMs are tools, not replacements for your agents. They need to be working with generative AI, checking its output, and incorporating it when and where appropriate.

There’s a wider danger that you will fail to use generative AI in the way that’s best suited to your organization. If you’re running a bespoke LLM trained on your company’s data, for example, you should constantly be feeding it new interactions as part of its fine-tuning, so that it gets better over time.

And speaking of getting better, sometimes machine learning models don’t get better over time. Owing to factors like changes in the underlying data, model performance can sometimes get worse over time. You’ll need a way of assessing the quality of the text generated by a large language model, along with a way of consistently monitoring it.

What are the Benefits of Generative AI for Customer Experience?

The reason that people are so excited over the potential of using generative AI for customer experience is because there’s so much upside. Once you’ve got your model infrastructure set up, you’ll be able to answer customer questions at all times of the day or night, in any of a dozen languages, and with a personalization that was once only possible with an army of contact center agents.

But if you’re a contact center manager with a lot to think about, you probably don’t want to spend a bunch of time hiring an engineering team to get everything running smoothly. And, with Quiq, you don’t have to – you can leverage generative AI to supercharge your customer experience while leaving the technical details to us!

Schedule a demo to find out how we can bring this bleeding-edge technology into your contact center, without worrying about the nuts and bolts.

Footnotes

[1] It’s worth pointing out that we spent no time crafting the prompt, which was really basic: “I’m a product manager at a company building an enterprise SAAS tool that makes it easier to monitor system breaches and issues. Could you write me 2-3 journal entries from my target customer? I want to know more about the problems they’re trying to solve, their pain points, and why the products they’ve already tried are not working well.” With a little effort, you could probably get more specific complaints and more usable material.