If by chance you haven’t heard of this new frontier in text-based customer communication, your first question is probably, “what is rich messaging?”

Well, you’re in luck! We wrote this piece specifically to get to the bottom of this subject. Here, we offer a deep dive into rich messaging, the capabilities it unlocks, and its implications for CX. By the time they’re done, CX directors will better understand why rich messaging should be central to their customer outreach strategy, and the many ways in which it can make their job easier.

What Is Rich Messaging?

Rich messaging aims to support person-to-person or business-to-person communication with upgraded, interactive messages. Senders can attach high-resolution photos, videos, audio messages, GIFs, and an array of other media to enhance the receiver’s experience while conveying a lot more information with each message.

Before going any further, we should specify that “rich messaging” refers to an umbrella of modern messaging applications, but it’s not the same as rich messaging protocols on offer. Google’s Rich Communication Services (RCS), for example, is one approach to rich messaging, but it is not the same thing as rich messaging in general.

That said, you might still be wondering what is a rich communication service message, exactly? As you can guess, a rich communication service message is just a rich message sent over some appropriate protocol with all the advantages that it offers.

For a number of reasons, rich messaging applications have supplanted SMS in both personal and professional outreach. SMS messages simply do not support many staples of modern communication, such as group chats or “read” receipts. What’s more, the reach of SMS will remain limited because it requires a cellular connection, whereas rich messages can be sent over the internet.

Though SMS will probably be around for a while, rich messaging is becoming increasingly popular as companies have been trending toward greater use of applications like WhatsApp. Armed with these and similar channels, CX directors can now:

- More easily capture new customers with compelling outreach;

- Resolve customer issues directly via text, chat, or social media messaging (a huge advantage given how obsessed we’ve all become with our phones);

- Interact with customers in real-time, which is a capability more and more people are looking for when seeking help;

- Gather and act on analytics;

- Scale their communications while simultaneously reducing the burden on contact center agents;

Given these facts, it’s no surprise that more and more CX leaders are making texting a key component of building lasting customer relationships.

Why is Expanding Communication Channels Important?

Expanding communication channels is important for the same reason the Internet and the fax machine were important; businesses must work to stay relevant, which is why they are constantly looking for the next technology to improve and expand interactions with customers and provide an edge over the competition. Let’s drill into this a little more.

As you must know, customer expectations change over time. It is startling to think that the first text message was sent nearly 30 years ago, but in that time, texting has become the default way of carrying on many different kinds of interactions. This is why it is crucial to develop communication channels that meet your customers where they are.

Modern consumers are incredibly busy, tech-savvy, and have a massive amount of information at their fingertips. They are not interested in calling a company for help unless there is absolutely no other choice, which is why they’ve been gravitating more toward text messaging for a while.

In the ongoing battle for limited customer attention, therefore, the forward-thinking CX director would be wise to put resources into this channel – along with any platforms that make that channel more fertile.

What is Rich Messaging on Different Platforms?

Now that you have more perspective on what rich messaging is and what it offers, let’s spend some time talking about which platforms you should focus on.

There are a few major providers of rich messaging, but we’ll focus on Apple and WhatsApp. Apple has long been a communication giant, but with billions of users worldwide, Facebook’s WhatsApp has certainly earned its spot at the table.

The sections below provide more details about how rich messaging works on each.

What is Rich Messaging on Apple?

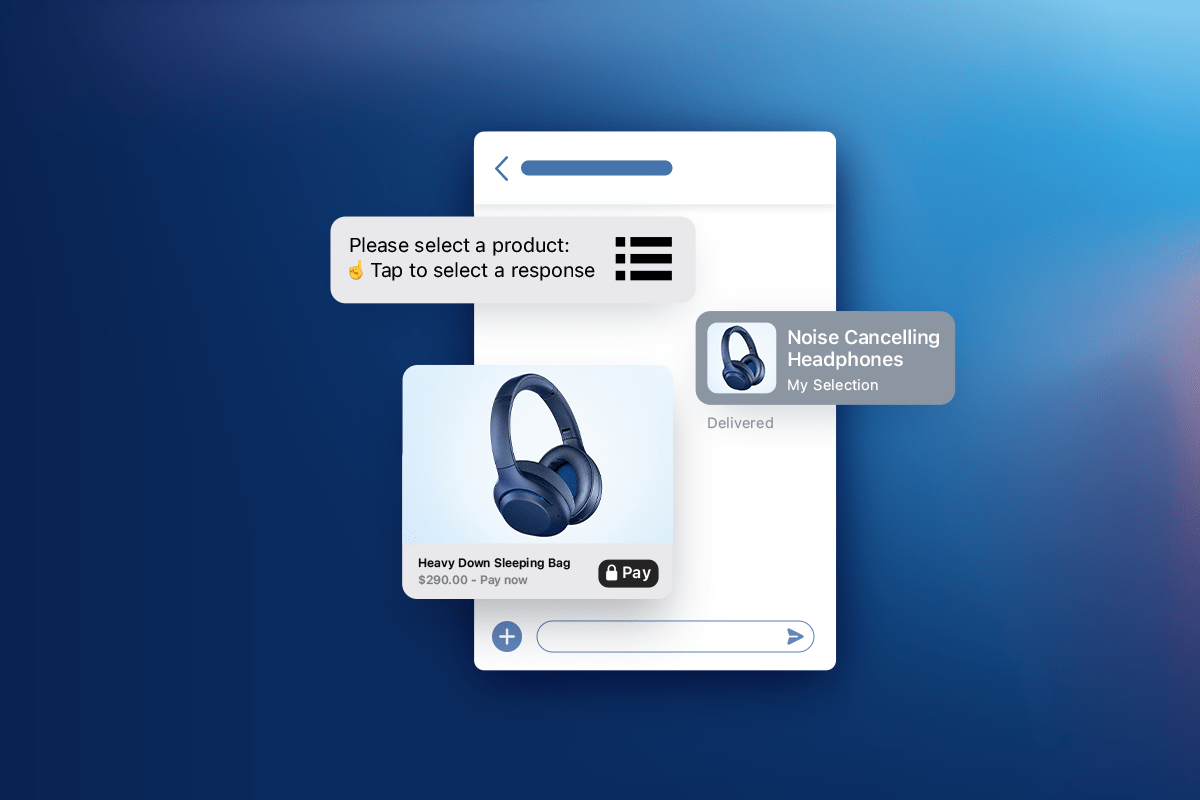

Through Apple Messages for Business, contact centers can offer their customers a direct line of communication. This allows for far greater speed and convenience, to say nothing of the personalization opportunities opened up by artificial intelligence (more on this shortly).

For more information, check out our dedicated article on rich communication with Apple messages for Business.

What is Rich Messaging on WhatsApp?

WhatsApp is a widely-used application that uses rich messaging for texts, voice messages, and video calling for over two billion users worldwide. Utilizing a simple internet connection for its services, WhatsApp allows users to bypass the traditional costs associated with global communication, making it a cost-effective choice.

Given its vast user base, many international brands are adopting WhatsApp to connect with their customers. WhatsApp Business is an extension of WhatsApp, and it offers enhanced features tailored for business use.

It supports integration with tools like the Quiq conversational AI platform, which can automatically transcribe voice messages and allows for the export of these conversations for analysis using technologies like natural language processing.

For more information, check out our dedicated article on WhatsApp Business.

The Benefits of Rich Messages for Businesses

Engaging with consumers in more meaningful ways is one of the keys to driving sales and repeat purchases. Whether on Apple, WhatsApp, or another channel, rich messaging is one of the best ways of interacting with customers; it’s convenient and powerful enough to help a CX leader rise above their competition.

Below, we will get into more specifics about the advantages to be had from using rich messaging.

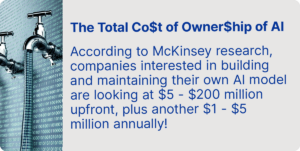

1. Cost-Effectiveness

It may be called “the bottom line,” but let’s face it, your budget probably ranks pretty highly on your list of priorities. Because it works over the internet, rich messaging is a great way for CX directions to connect with customers without breaking the bank.

But it can also help your organization save money by reducing customer support costs. When consumers need to talk to someone at your business, they can speak to knowledgeable agents (or a large language model trained on those agents’ output) through your rich messaging platform. You will reduce the need to provide the hardware and staffing required to run a full contact center, and you will be able to use those savings to invest in other areas of your business.

In this same vein, rich messaging makes it far easier to engage in asynchronous communications. This means agents are able to handle multiple conversations at the same time, resulting in further savings.

Finally, rich messaging is far more scalable than almost any other approach to customer outreach, especially when you effectively leverage AI. Once you’ve figured out what you want your message to be, communicating it to ten times as many people is relatively straightforward with rich messaging.

2. Real-Time Insights

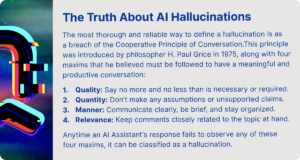

When they integrate rich messaging with a platform offering excellent support for real-time analytics, companies gain access to conversation analytics that provide the insights they need to improve contact center performance.

They can generate reports on click rates and other helpful interaction metrics, for instance, giving CX leaders a feedback loop they can use to test changes and see what improves customer satisfaction, loyalty, and lifetime value.

3. Rich Messaging is Native to the Devices Customers are Already Using

You could pay for the most compelling billboard in the history of marketing, but if it’s on the moon where no one will see it, it’s not going to do you much good. For this reason, we’ve long pointed out that it’s important to meet your customers where they are – and these days, they’re on their phones.

When combined with the statistics in the following section, we think that the case for rich messaging as a central pillar in the CX director’s communications strategy is very strong.

4. Increased Engagement

When developing a customer communication strategy, it’s important to evaluate the potential engagement level of various channels. As it turns out, text messaging consistently achieves higher open and response rates compared to other methods.

The data supporting this is quite strong: in a 2018 survey, fully three-quarters of respondents indicated that they’d prefer to interact with brands through rich messages.

This high level of engagement demonstrates the significant potential of text messaging as a communication strategy. Considering that only about 25% of emails are opened and read, it becomes clear that investing in text messaging as a primary communication channel is a wise decision for effectively reaching and engaging customers.

5. The Human Touch (but with AI!)

Customers expect more personalization these days, and rich messaging gives businesses a way to customize communication with unprecedented scale and sophistication.

This customization is facilitated by machine learning, a technology at the cutting edge of automated content customization. A familiar example is Netflix, which uses algorithms to detect viewer preferences and recommend corresponding shows. Now, thanks to advancements in generative AI, this same technology is being integrated into text messaging.

Previously, language models lacked the necessary flexibility for personalized customer interactions, often sounding mechanical and inauthentic. However, today’s models have greatly enhanced agents’ abilities to adapt their conversations to fit specific contexts. While these models haven’t replaced the unique qualities of human interaction, they mark a significant improvement for CX directors aiming to improve the customer experience, keep customers loyal, and boost their lifetime value. What’s more, when used over time, these innovations will help a CX leader stand out in a crowded marketplace while making better decisions.

To make use of this, though, it helps to partner with a platform that offers this functionality out of the box.

6. Security

In addition to streamlining connections between your organization and its consumers, rich messaging may offer dependable security and peace of mind.

Trust and transparency have always been important, but with deep fakes and data breaches on the rise, they’re more crucial than ever. Some rich messaging applications, like WhatsApp, support end-to-end encryption, meaning your customers can interact with you knowing full well that their information is safe.

But, to reiterate, this is not the case for all rich messaging services, so be sure to do your own research first.

What is Rich Messaging? It’s the Future!

Businesses across every industry need to update their approach to messaging to remain relevant with consumers, but that’s especially true for CX leaders. Significant data shows that traditional customer communication channels, like phone, email, and web chat, have already fallen to the bottom of the preference list, and you need a plan in place that allows you to react to changes in customer desires.

Rich messaging is the technology that makes this possible, and it’s even more impactful when you partner with a platform like Quiq that enables personalization, analytics, and better engagement with your customers. Read more here to learn about the communication channels we support!

Request A Demo