Many customer experience leaders are considering how generative AI might impact their businesses. Naturally, this has led to an explosion of related questions, such as whether it’s worth training a model in-house or working with a conversational AI platform, whether generative AI might hallucinate in harmful ways, and how generative AI can enhance agent performance.

One especially acute source of confusion centers on AI’s data reliance, or the role that data—including your internal data—plays in AI systems. This is understandable, as there remains a great deal of misunderstanding about how large language models are trained and how they can be used to create an accurate, helpful AI assistant.

If you count yourself among the confused, don’t worry. This article will provide a careful look at the relationship between AI and your CX data, equipping you to decide whether you have everything you need to support the use of generative AI, and how to efficiently gather more, if you need to.

Let’s dive in!

What’s the Role of CX Data in Teaching AI?

In our deep dive into large language models, we spent a lot of time covering how public large language models are trained to predict the end of some text. They’ll be shown many sentences with the last word or two omitted (“My order is ___”), and from this, they learn that the last word in is something “missing” or “late.”

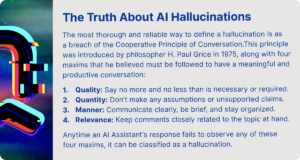

The latest CX solutions have done an excellent job leveraging these capabilities, but the current generation of language models still tends to hallucinate (i.e., make up) information.

To get around this, savvy CX directors have begun utilizing a technique known as “retrieval augmented generation,” also known as “RAG.”

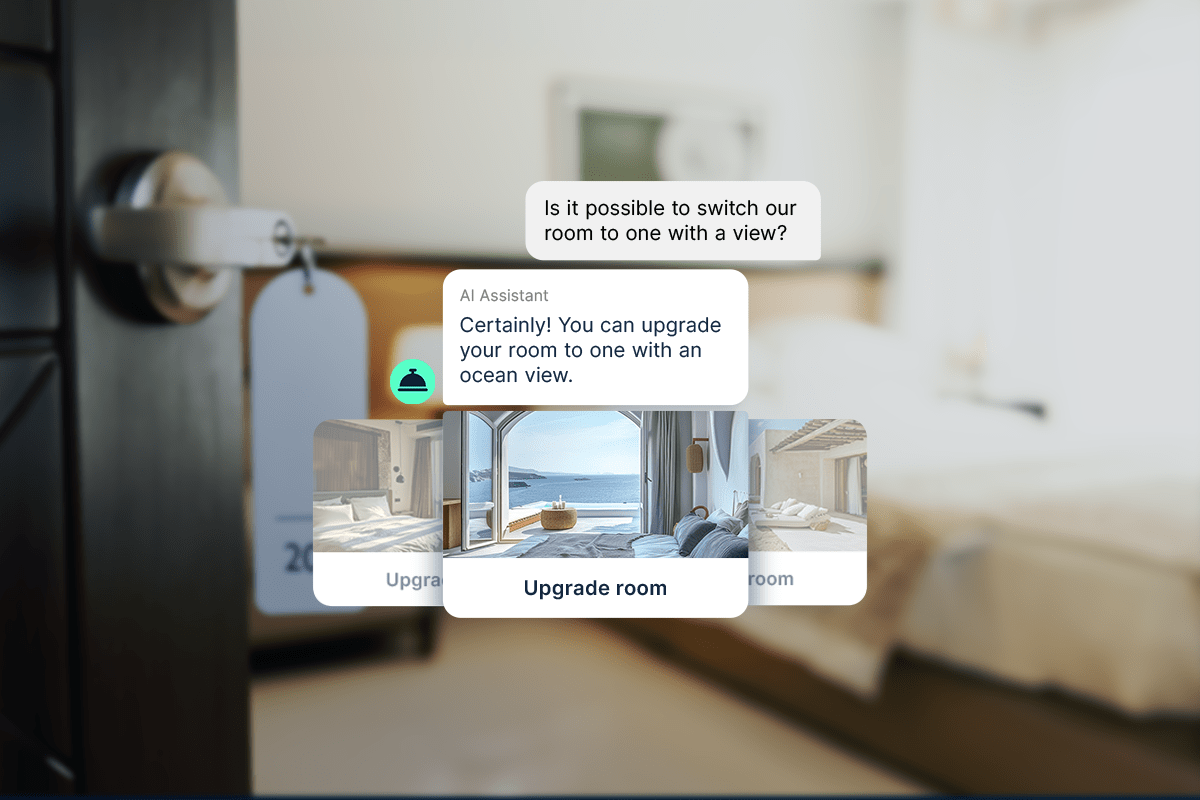

With RAG, models are given access to additional data sources that they can use when generating a reply. You could hook an AI assistant up to an order database, for example, which would allow it to accurately answer questions like “Does my order still qualify for a refund?”

RAG also plays an important part in managing language models’ well-known tendency to hallucinate. By drawing on the data contained within an authoritative source, these models become much less likely to fabricate information.

How Do I Know If I Have the Right Data for AI?

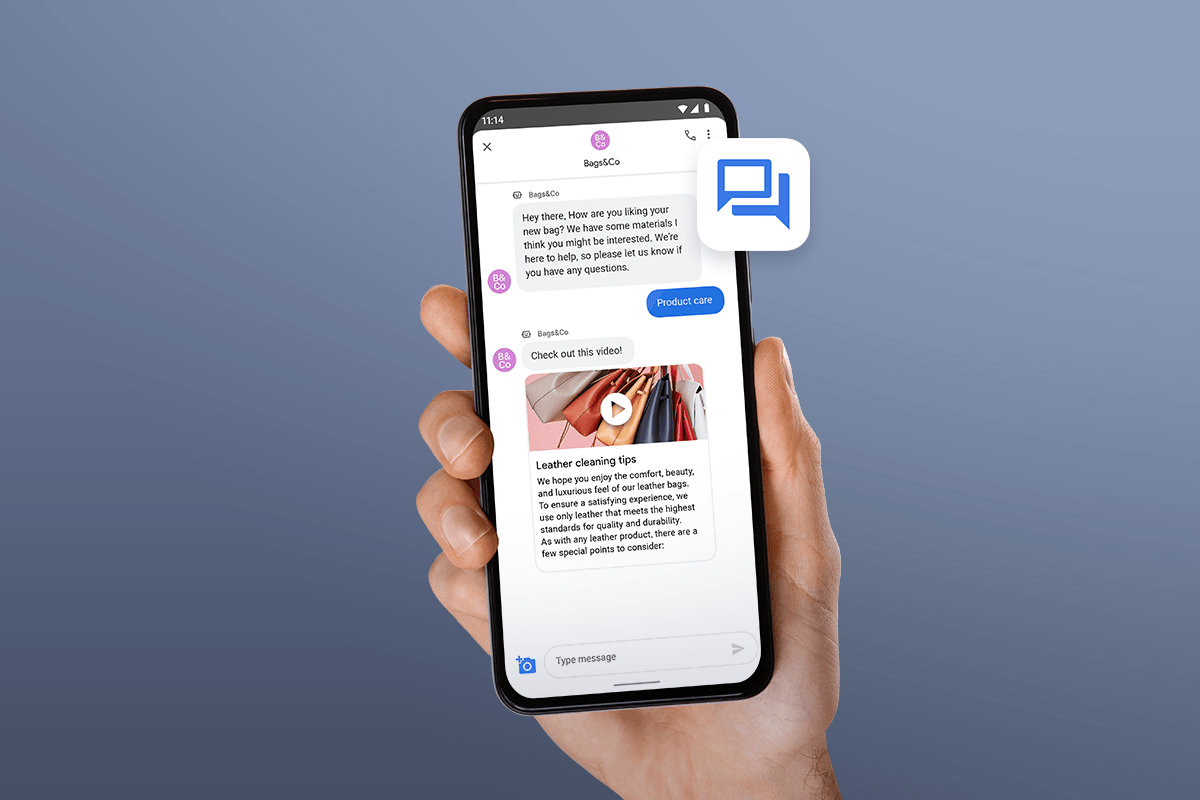

CX data tends to fall into two broad categories:

- Knowledge, like training manuals and PDFs

- Data from internal systems, like issue tickets, chats, call transcripts, etc.

Luckily for CX leaders, there’s usually enough of both lying around to meet an AI assistant’s need for data. Dozens of tools exist for tracking important information – customer profiles, information related to payment and shipping, and the like – and nearly all offer API endpoints that allow them to integrate with your existing technology stack.

What’s more, it’s best if this data looks and feels just like the data your human agents see, so you don’t need to curate a bespoke data repository. All of this is to say that you might already have everything you need for optimal AI performance, even if your sources are scattered or need to be updated.

Processing Data for Generative AI

Data processing work is far from trivial, and outsourcing it to a dedicated set of tools is often the wiser choice. A conversational AI platform built for generative AI should make it easy for you to program instructions for data processing.

That said, you might still need to work on cleaning and formatting the data, which can take some effort.

Understanding the steps involved in preparing data for AI is a big subject, but you’ll almost certainly need to do a mix of the following:

- Extract: 80% of enterprise data exists in various unstructured formats, such as HTML pages, PDFs, CSV files, and images. This data has to be gathered, and you may have to “clean” it by removing unwanted content and irrelevant sections, just as you would for a human agent.

- Transform: Your AI assistant will likely support answering various kinds of questions. If you’re using retrieval augmented generation, you may need to create a language “embedding” to answer those questions effectively, or you may need to prepare and enrich your answers so your assistant can find them more effectively.

- Load: Finally, you will need to “feed” your AI assistant the answers stored in (say) a vector database.

Remember: The GenAI data process isn’t trivial, but it’s also easier than you think, especially if you find the right partner. Quiq’s native “dataset transformation” functionality, for example, facilitates rewriting text, scrubbing unwanted characters, augmenting a dataset (by generating a summary of it), structuring it in new ways, and much more.

What Do I Need to Create Additional Data for AI?

As we said above, your existing data may already be sufficient for optimal AI performance. This isn’t always the case, however, and it’s worth saying a few words about when you will need to create a new resource for a model.

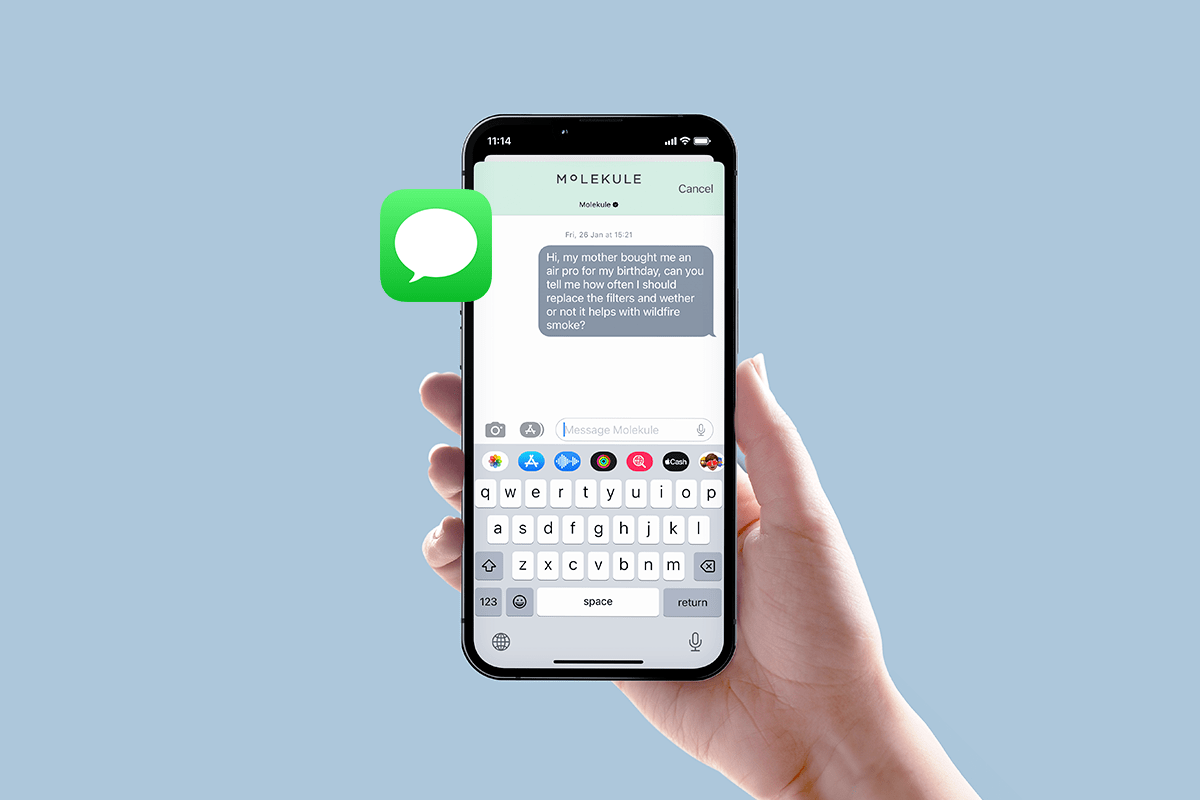

In our experience, the most common data gaps occur when common or important questions are not addressed anywhere in your documentation. Start by creating text about them that a model can use to generate replies, and then work your way out to questions that are less frequent.

One idea our clients use successfully is to ask human agents what questions they see most frequently. Here’s an example of an awesome, simple FAQ from LOOP auto insurance:

When you’re doing this, remember: it’s fine to start small. The quality of your supplementary content is more important than the quantity, and a few sentences in a single paragraph will usually do the trick.

The most important task is to make sure you have a framework to understand what data gaps you have so that you can improve. This could include analyzing previous questions or proactively labeling existing questions you don’t have answers for.

Wrapping Up

There’s no denying the significance of relevant data in AI advancements, but as we’ve hopefully made clear, you probably have most of what you already need—and the process to prepare it for AI is a lot more straightforward than many people think.

If you’re interested in learning more about optimal AI performance and how to achieve it, check out our free e-book addressing the misconceptions surrounding generative AI. Armed with the insights it contains, you can figure out how much AI could impact your contact center, and how to proceed.