A Guide to Fine-Tuning Pretrained Language Models for Specific Use Cases

Over the past half-year, large language models (LLMs) like ChatGPT have proven remarkably useful for a wide range of tasks, including machine translation, code analysis, and customer interactions in places like contact centers.

For all this power and flexibility, however, it is often still necessary to use fine-tuning to get an LLM to generate high-quality output for specific use cases.

Today, we’re going to do a deep dive into this process, understanding how these models work, what fine-tuning is, and how you can leverage it for your business.

What is a Pretrained Language Model?

First, let’s establish some background context by tackling the question of what pretrained models are and how they work.

The “GPT” in ChatGPT stands for “generative pretrained transformer”, and this gives us a clue as to what’s going on under the hood. ChatGPT is a generative model, meaning its purpose is to create new output; it’s pretrained, meaning that it has already seen a vast amount of text data by the time end users like us get our hands on it; and it’s a transformer, which refers to the fact that it’s built out of billions of transformer modules stacked into layers.

If you’re not conversant in the history of machine learning it can be difficult to see what the big deal is, but pretrained models are a relatively new development. Once upon a time in the ancient past (i.e. 15 or 20 years ago), it was an open question as to whether engineers would be able to pretrain a single model on a dataset and then fine-tune its performance, or whether they would need to approach each new problem by training a model from scratch.

This question was largely resolved around 2013, when image models trained on the ImageNet dataset began sweeping competitions left and right. Since then it has become more common to use pretrained models as a starting point, but we want to emphasize that this approach does not always work. There remain a vast number of important projects for which building a bespoke model is the only way to go.

What is Transfer Learning?

Transfer learning refers to when an agent or system figures out how to solve one kind of problem and then uses this knowledge to solve a different kind of problem. It’s a term that shows up all over artificial intelligence, cognitive psychology, and education theory.

Author, chess master, and martial artist Josh Waitzkin captures the idea nicely in the following passage from his blockbuster book, The Art of Learning:

“Since childhood I had treasured the sublime study of chess, the swim through ever-deepening layers of complexity. I could spend hours at a chessboard and stand up from the experience on fire with insight about chess, basketball, the ocean, psychology, love, art.”

Transfer learning is a broader concept than pretraining, but the two ideas are closely related. In machine learning, competence can be transferred from one domain (generating text) to another (translating between natural languages or creating Python code) by pretraining a sufficiently large model.

What is Fine-Tuning A Pretrained Language Model?

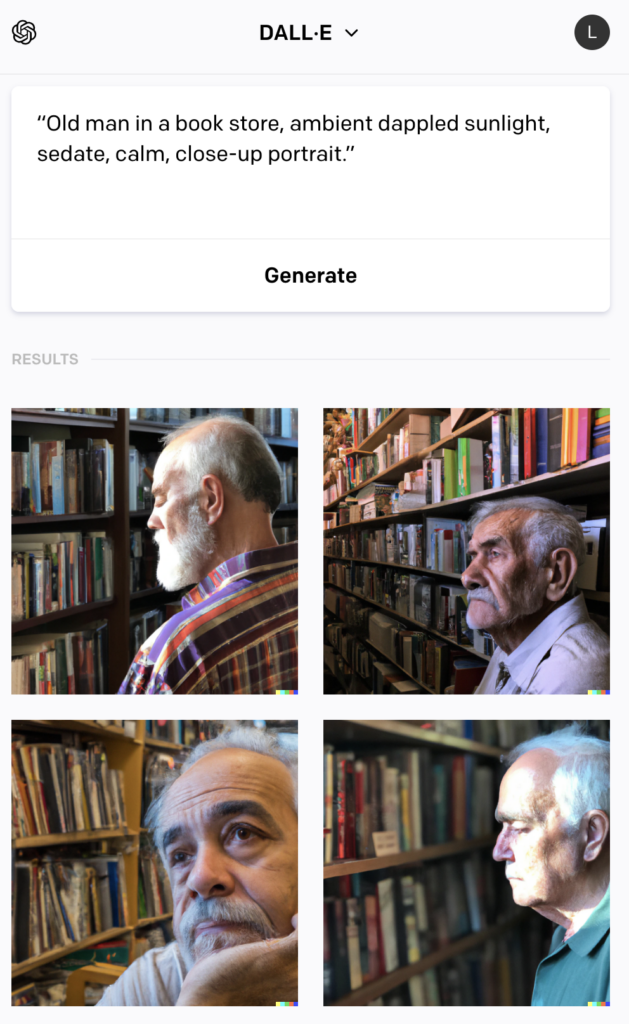

Fine-tuning a pretrained language model occurs when the model is repurposed for a particular task by being shown illustrations of the correct behavior.

If you’re in a whimsical mood, for example, you might give ChatGPT a few dozen limericks so that its future output always has that form.

It’s easy to confuse fine-tuning with a few other techniques for getting optimum performance out of LLMs, so it’s worth getting clear on terminology before we attempt to give a precise definition of fine-tuning.

Fine-Tuning a Language Model v.s. Zero-Shot Learning

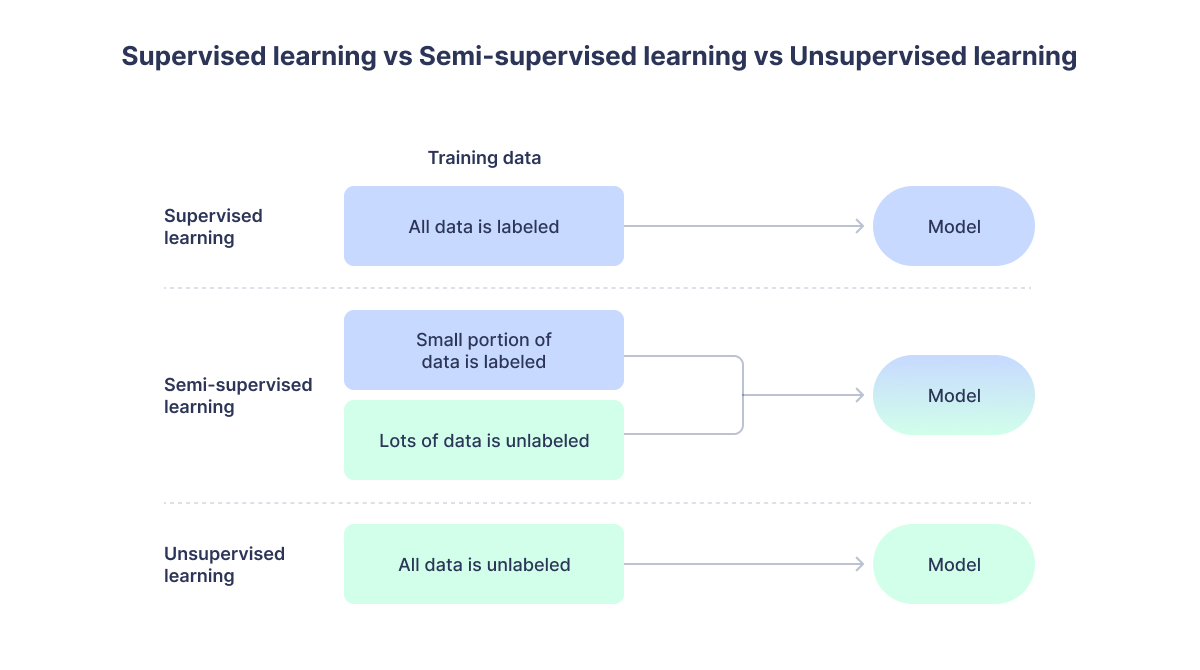

Zero-shot learning is whatever you get out of a language model when you feed it a prompt without making any special effort to show it what you want. It’s not technically a form of fine-tuning at all, but it comes up in a lot of these conversations so it needs to be mentioned.

(NOTE: It is sometimes claimed that prompt engineering counts as zero-shot learning, and we’ll have more to say about that shortly.)

Fine-Tuning a Language Model v.s. One-Shot Learning

One-shot learning is showing a language model a single example of what you want it to do. Continuing our limerick example, one-shot learning would be giving the model one limerick and instructing it to format its replies with the same structure.

Fine-Tuning a Language Model v.s. Few-Shot Learning

Few-shot learning is more or less the same thing as one-shot learning, but you give the model several examples of how you want it to act.

How many counts as “several”? There’s no agreed-upon number that we know about, but probably 3 to 5, or perhaps as many as 10. More than this and you’re arguably not doing “few”-shot learning anymore.

Fine-Tuning a Language Model v.s. Prompt Engineering

Large language models like ChatGPT are stochastic and incredibly sensitive to the phrasing of the prompts they’re given. For this reason, it can take a while to develop a sense of how to feed the model instructions such that you get what you’re looking for.

The emerging discipline of prompt engineering is focused on cultivating this intuitive feel. Minor tweaks in word choice, sentence structure, etc. can have an enormous impact on the final output, and prompt engineers are those who have spent the time to learn how to make the most effective prompts (or are willing to just keep tinkering until the output is correct).

Does prompt engineering count as fine-tuning? We would argue that it doesn’t, primarily because we want to reserve the term “fine-tuning” for the more extensive process we describe in the next few sections.

Still, none of this is set in stone, and others might take the opposite view.

Distinguishing Fine-Tuning From Other Approaches

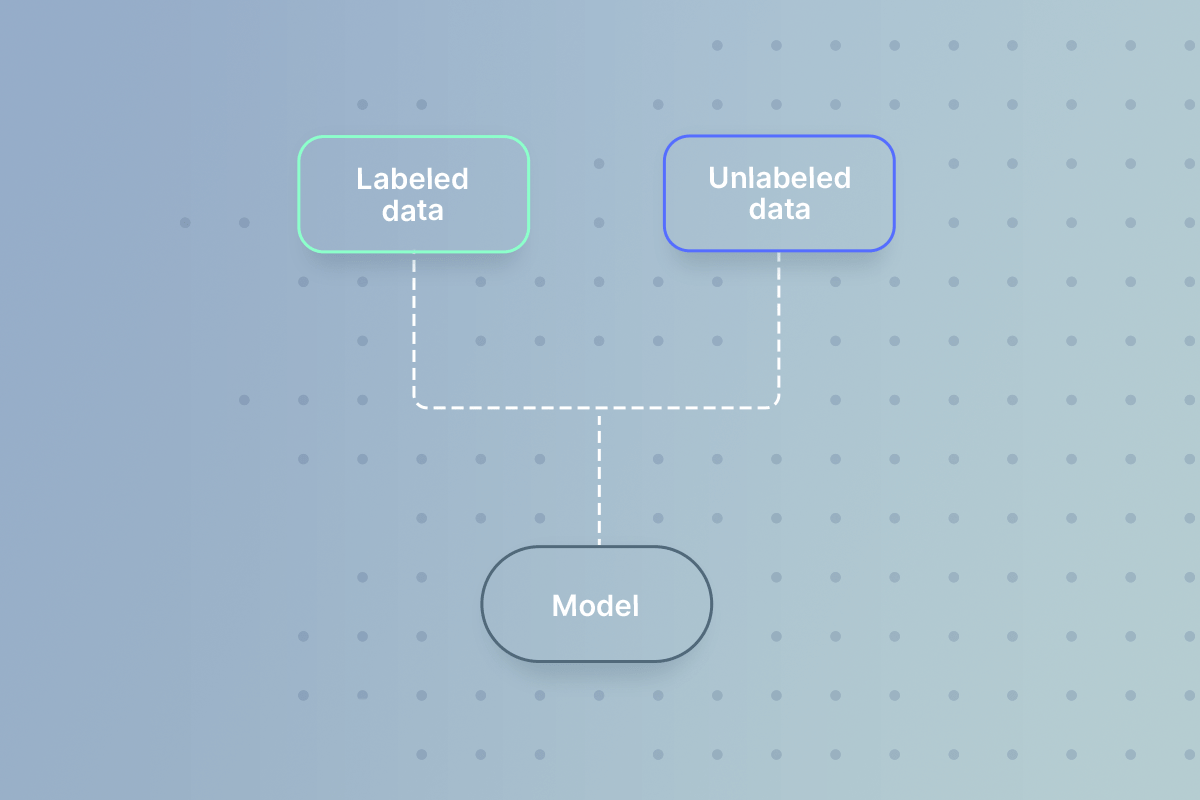

Having discussed prompt engineering and zero-, one-, and few-shot learning, we can give a fuller definition of fine-tuning.

Fine-tuning is taking a pretrained language model and optimizing it for a particular use case by giving it many examples to learn from. How many you ultimately need will depend a lot on your task – particularly how different the task is from the model’s training data and how strict your requirements for its output are – but you should expect it to take on the order of a few dozen or a few hundred examples.

Though it bears an obvious similarity to one-shot and few-shot learning, fine-tuning will generally require more work to come up with enough examples, and you might have to build a rudimentary pipeline that feeds the examples in through the API. It’s almost certainly not something you’ll be doing directly in the ChatGPT web interface.

How Can I Fine-Tune a Pretrained Language Model?

Having gotten this far, we can now turn our attention to what the fine-tuning procedure actually consists in. The basic steps are: deciding what you’re wanting to accomplish, gather the requisite data (and formatting it correctly), feeding it to your model, and evaluating the results.

Let’s discuss each, in turn.

Deciding on Your Use Case

The obvious place to begin is figuring out exactly what it is you want to fine-tune a pretrained model to do.

It may seem as though this is too obvious to be included as its own standalone step, but we’ve singled it out is because you need to think through the specifics of what you’re trying to accomplish. It’s not enough to say “We want to fine-tune this model to write tweets for us”, you have to consider questions like “Should the tone by formal or informal” and “Are we okay with it adding emojis”.

This matters because it informs the data that you gather for fine-tuning. If you feed the model irreverent tweets with lots of 🙂s and 🚀s, you can expect that to show up in the tweets it generates for you.

Picking a Base Model

Next, you have to figure out which model you want to fine-tune. This is not the place to get into a discussion on all the “foundation” models that are available today, but suffice it to say that HuggingFace, OpenAI, Anthropic, and the other companies in the pretrained model space offer a variety of different options. BigScience has BLOOM and T0pp models, OpenAI has Ada, Babbage, Da Vinci, and Curie, and so on.

Different models have different fine-tuning requirements, so this choice will impact how you structure your pipeline later.

Gathering and Formatting the Fine-Tuning Data

The heart of fine-tuning a pretrained model is gathering the samples from which it will learn. This is more or less exactly what it sounds like: you compile a list of prompts and desired responses. This is the data that will adjust the model’s internal parameters, optimizing it for your use case.

AI educator Dave Shapiro has a tutorial on fine-tuning GPT-3 to be a tutor for younger students, and here is one of the training samples he used (“TIM” is the name he’s using for the tutor):

User: Hey TIM, I’m interested in learning more about Charles Darwin. Can you tell me a bit about him?

TIM: Charles Darwin was an English naturalist and geologist who developed the theory of evolution by natural selection. He was born into a wealthy family and was able to attend the prestigious Shrewsbury School. After graduation, he enrolled at the University of Edinburgh to study medicine, but he soon dropped out and enrolled at Christ’s College, Cambridge.

User: Interesting! I didn’t know he was born into a wealthy family.

TIM: Yes, his family was very wealthy and his father was a doctor. Darwin’s grandfather, Erasmus Darwin, was a well-known scientist and wrote several books on botany and evolution.

User: Wow, I had no idea. What made Darwin decide to drop out of medical school and study at Cambridge?

TIM: Darwin was interested in natural history and had developed a keen interest in taxidermy. After dropping out of medical school, he traveled to Wales with some friends to study geology. It was during this time that he began to develop his theory of evolution.

One thing you’ll note is that this sample is pretty long. It could take a while to come up with hundreds of these to fine-tune your model, but that’s actually something a generative pretrained language model like ChatGPT can help with. Just be sure you have a human go over the samples and check them for accuracy, or you risk compromising the quality of your outputs.

Another thing to think about is how you’ll handle adversarial behavior and edge cases. If you’re training a conversational AI chatbot for a contact center, for example, you’ll want to include plenty of instances of the model calmly and politely responding to an irate customer. That way, your output will be similarly calm and polite.

Lastly, you’ll have to format the fine-tuning data according to whatever specifications are required by the base model you’re using. It’ll probably be something similar to JSON, but check the documentation to be sure.

Feeding it to Your Model

Now that you’ve got your samples ready, you’ll have to give them to the model for fine-tuning. This will involve you feeding the examples to the model via its API and waiting until the process has finished.

What is the Difference Between Fine-Tuning and a Pretrained Model?

A pretrained model is one that has been previously trained on a particular dataset or task, and fine-tuning is getting that model to do well on a new task by showing it examples of the output you want to see.

Pretrained models like ChatGPT are often pretty good out of the box, but if you’re wanting it to create legal contracts or work with highly-specialized scientific vocabulary, you’ll likely need to fine-tune it.

Should You Fine-Tune a Pretrained Model For Your Business?

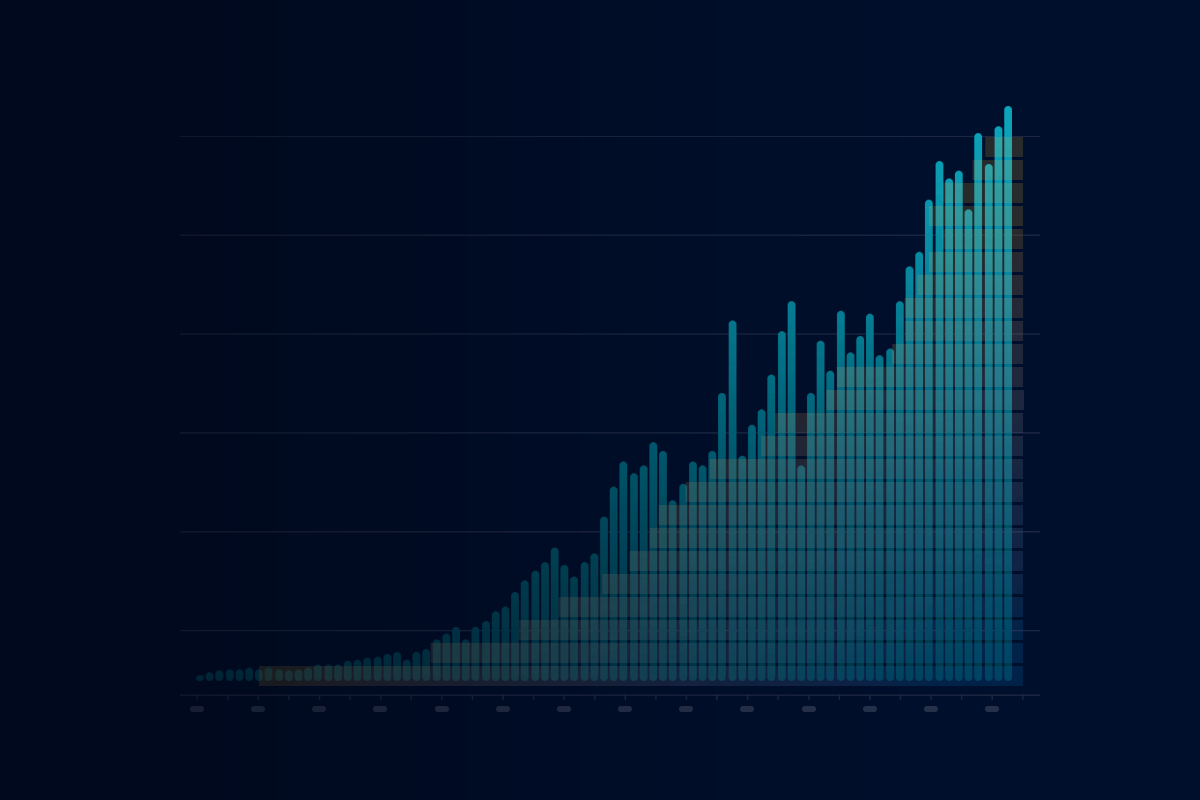

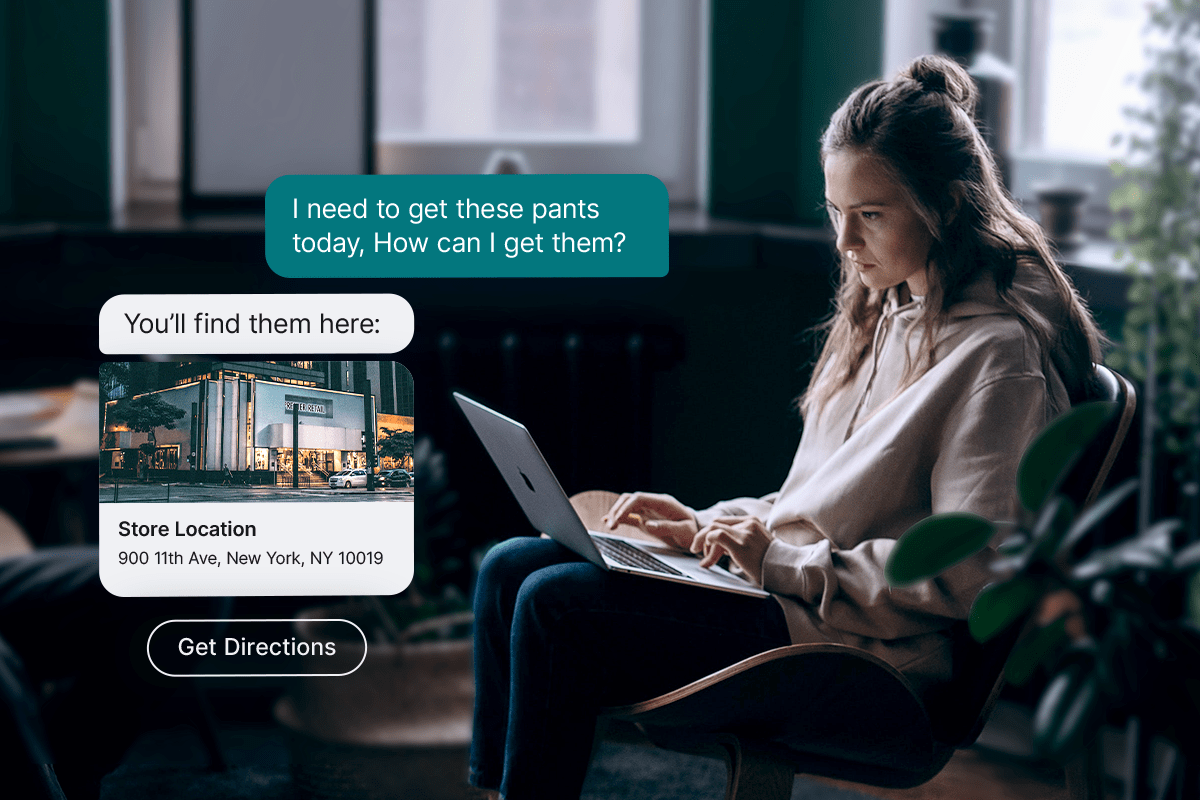

Generative pretrained language models like ChatGPT and Bard have already begun to change the way businesses like contact centers function, and we think this is a trend that is likely to accelerate in the years ahead.

If you’ve been intrigued by the possibility of fine-tuning a pretrained model to supercharge your enterprise, then hopefully the information contained in this article gives you some ideas on how to begin.

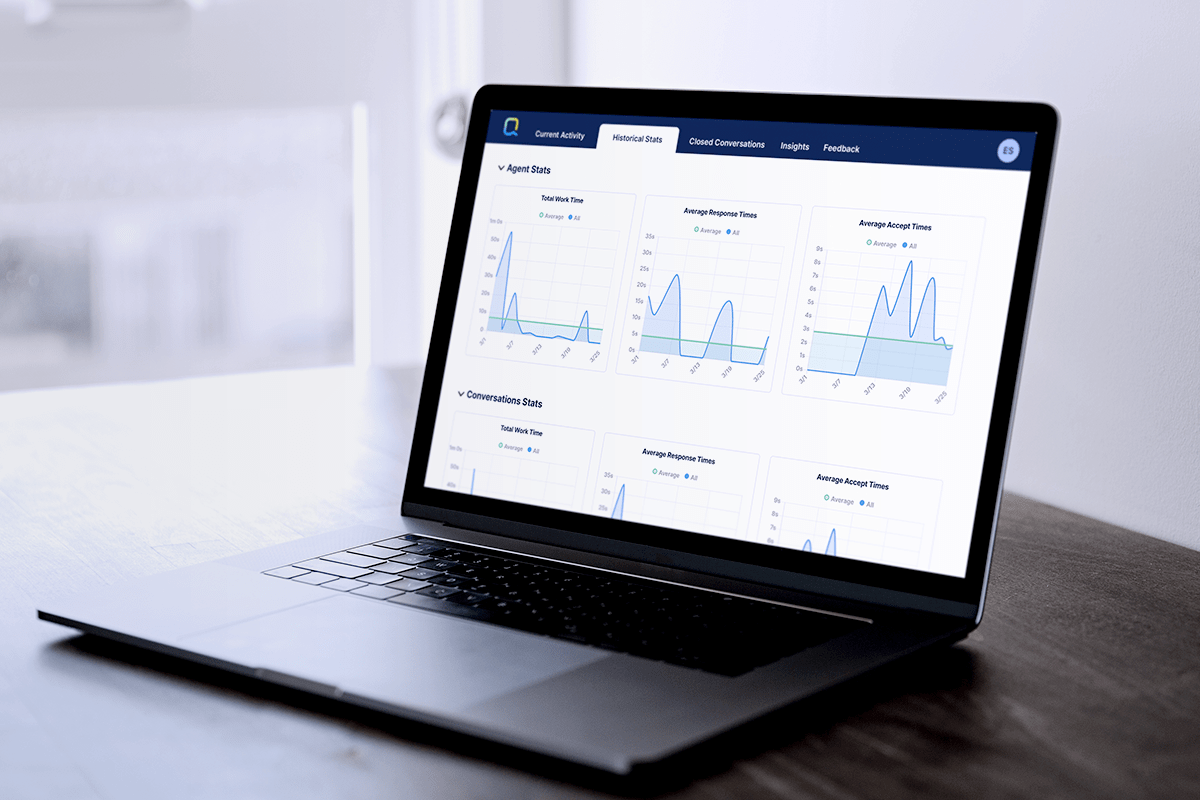

Another option is to leverage the power of the Quiq platform. We’ve built a best-in-class conversational AI system that can automate substantial parts of your customer interactions (without you needing to run your own models or set up a fine-tuning pipeline.)

To see how we can help, schedule a demo with us today!

![[Infographic] 9 Effective Call Center Strategies You Can’t Miss](https://quiq.com/wp-content/uploads/2022/11/9-Ways-to-Improve-Call-Center-Efficiency-Feature.png)