A lot has changed since the advent of large language models a little over a year ago. But, incredibly, there are already many attempts at extending the functionality of the underlying technology.

One broad category of these attempts is known as “tool use”, and consists of augmenting language models by giving them access to things like calculators. Stories of these models failing at simple arithmetic abound, and the basic idea is that we can begin to shore up their weaknesses by connecting them to specific external resources.

Because these models are famously prone to “hallucinating” incorrect information, the technique of retrieval augmented generation (RAG) has been developed to ground model output more effectively. So far, this has shown promise as a way of reducing hallucinations and creating much more trustworthy replies to queries.

In this piece, we’re going to discuss what retrieval augmented generation is, how it works, and how it can make your models even more robust.

Understanding Retrieval Augmented Generation

To begin, let’s get clear on exactly what we’re talking about. The next few sections will overview retrieval augmented generation, break down how it works, and briefly cover its myriad benefits.

What is Retrieval Augmented Generation?

Retrieval augmented generation refers to a large and growing cluster of techniques meant to help large language models ground their output in facts obtained from an external source.

By now, you’re probably aware that language models can do a remarkably good job of generating everything from code to poetry. But, owing to the way they’re trained and the way they operate, they’re also prone to simply fabricating confident-sounding nonsense. If you ask for a bunch of papers about the connection between a supplement and mental performance, for example, you might get a mix of real papers and ones that are completely fictitious.

If you could somehow hook the model up to a database of papers, however, then perhaps that would ameliorate this tendency. That’s where RAG comes in.

We will discuss some specifics in the next section, but in the broadest possible terms, you can think of RAG as having two components: the generative model, and a retrieval system that allows it to augment its outputs with data obtained from an authoritative external source.

The difference between using a foundation model and using a foundation model with RAG has been likened to the difference between taking a closed-book and an open-booked test – the metaphor is an apt one. If you were to poll all your friends about their knowledge of photosynthesis, you’d probably get a pretty big range of replies. Some friends would remember a lot about the process from high school biology, while others would barely even know that it’s related to plants.

Now, imagine what would happen if you gave these same friends a botany textbook and asked them to cite their sources. You’d still get a range of replies, of course, but they’d be far more comprehensive, grounded, and replete with up-to-date details. [1]

How RAG Works

Now that we’ve discussed what RAG is, let’s talk about how it functions. Though there are many subtleties involved, there are only a handful of overall steps.

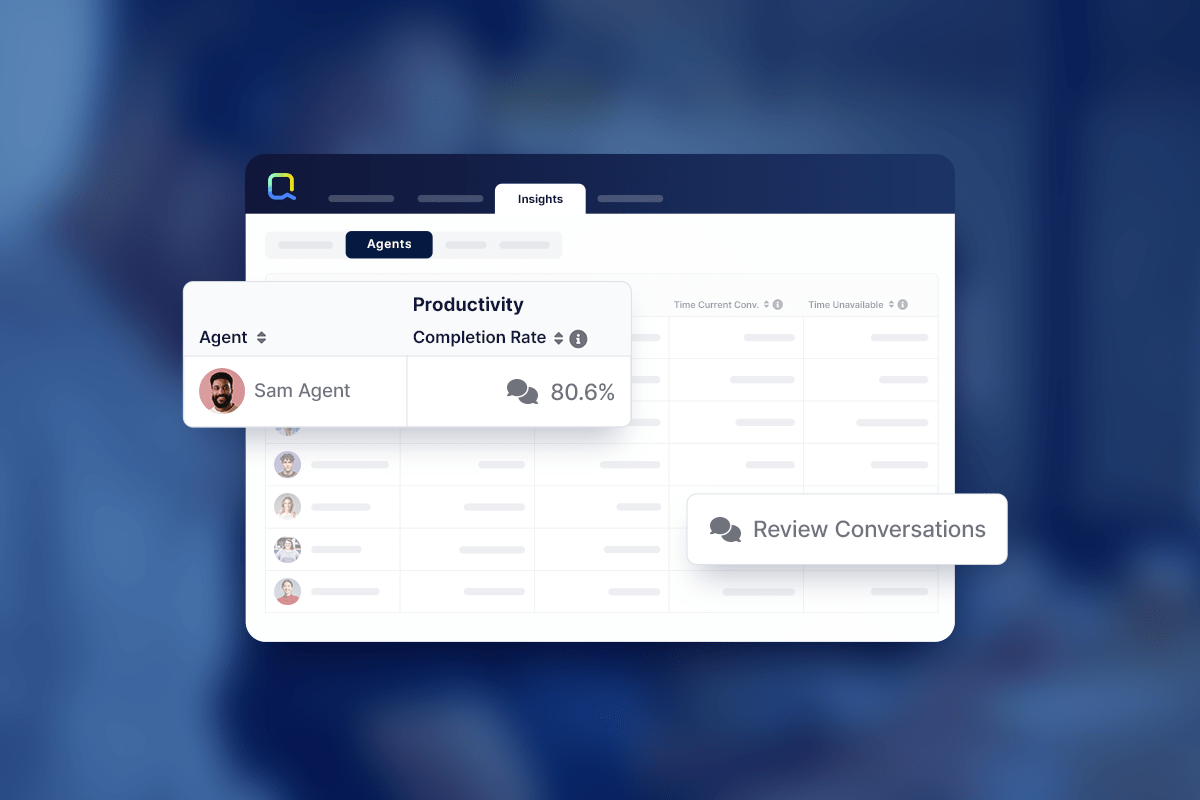

First, you have to create a source of external data or utilize an existing one. There are already many such external resources, including databases filled with scientific papers, genomics data, time series data on the movements of stock prices, etc., which are often accessible via an API. If there isn’t already a repository containing the information you’ll need, you’ll have to make one. It’s also common to hook generative models up to internal technical documentation, of the kind utilized by e.g. contact center agents.

Then, you’ll have to do a search for relevancy. This involves converting queries into vectors, or numerical representations that capture important semantic information, then matching that representation against the vectorized contents of the external data source. Don’t worry too much if this doesn’t make a lot of sense, the important thing to remember is that this technique is far better than basic keyword matching at turning up documents related to a query.

With that done, you’ll have to augment the original user query with whatever data came up during the relevancy search. In the systems we’ve seen this all occurs silently, behind the scenes, with the user being unaware that any such changes have been made. But, with the additional context, the output generated by the model will likely be much more grounded and sensible. Modern RAG systems are also sometimes built to include citations to the specific documents they drew from, allowing a user to fact-check the output for accuracy.

And finally, you’ll need to think continuously about whether the external data source you’ve tied your model to needs to be updated. It doesn’t do much good to ground a model’s reply if the information it’s using is stale and inaccurate, so this step is important.

The Benefits of RAG

Language models equipped with retrieval augmented generation have many advantages over their more fanciful, non-RAG counterparts. As we’ve alluded to throughout, such RAG models tend to be vastly more accurate. RAG, of course, doesn’t guarantee that a model’s output will be correct. They can still hallucinate, just as one of your friends reading a botany book might misunderstand or misquote a passage. Still, it makes hallucinations far less prevalent and, if the model adds citations, gives you what you need to rectify any errors.

For this same reason, it’s easier to trust a RAG-powered language model, and they’re (usually) easier to use. As we said above a lot of the tricky technical detail is hidden from the end user, so all they see is a better-grounded output complete with a list of documents they can use to check that the output they’ve gotten is right.

Applications of Retrieval Augmented Generation

We’ve said a lot about how awesome RAG is, but what are some of its primary use cases? That will be our focus here, over the next few sections.

Enhancing Question Answering Systems

Perhaps the most obvious way RAG could be used is to supercharge the function of question-answering systems. This is already a very strong use case of generative AI, as attested to by the fact that many people are turning to tools like ChatGPT instead of Google when they want to take a first stab at understanding a new subject.

With RAG, they can get more precise and contextually relevant answers, enabling them to overcome hurdles and progress more quickly.

Of course, this dynamic will also play out in contact centers, which are more often leaning on question-answering systems to either make their agents more effective, or to give customers the resources they need to solve their own problems.

Chatbots and Conversational Agents

Chatbots are another technology that could be substantially upgraded through RAG. Because this is so closely related to the previous section we’ll keep our comments brief; suffice it to say, a chatbot able to ground its replies in internal documentation or a good external database will be much better than one that can’t.

Revolutionizing Content Creation

Because generative models are so, well, generative, they’ve already become staples in the workflows of many creative sorts, such as writers, marketers, etc. A writer might use a generative model to outline a piece, paraphrase their own earlier work, or take the other side of a contentious issue.

This, too, is a place where RAG shines. Whether you’re tinkering with the structure of a new article or trying to build a full-fledged research assistant to master an arcane part of computer science, it can only help to have more factual, grounded output.

Recommendation Systems

Finally, recommendation systems could see a boost from RAG. As you can probably tell from their name, recommendation systems are machine-learning tools that find patterns in a set of preferences and use them to make new recommendations that fit that pattern.

With grounding through RAG, this could become even better. Imagine not only having recommendations, but also specific details about why a particular recommendation was made, to say nothing of recommendations that are tied to a vast set of external resources.

Conclusion

For all the change we’ve already seen from generative AI, RAG has yet more more potential to transform our interaction with AI. With retrieval augmented generation, we could see substantial upgrades in the way we access information and use it to create new things.

If you’re intrigued by the promise of generative AI and the ways in which it could supercharge your contact center, set up a demo of the Quiq platform today!

Footnotes

[1] This assumes that the book you’re giving them is itself up-to-date, and the same is true with RAG. A generative model is only as good as its data.