The term “artificial intelligence” was coined at the famous Dartmouth Conference in 1956, put on by luminaries like John McCarthy, Marvin Minsky, and Claude Shannon, among others.

These organizers wanted to create machines that “use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.” They went on to claim that “…a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.”

Half a century later, it’s fair to say that this has not come to pass; brilliant as they were, it would seem as though McCarthy et al. underestimated how difficult it would be to scale the heights of the human intellect.

Nevertheless, remarkable advances have been made over the past decade, so much so that they’ve ignited a firestorm of controversy around this technology. People are questioning the ways in which it can be used negatively, and whether it might ultimately pose an extinction risk to humanity; they’re probing fundamental issues around whether machines can be conscious, exercise free will, and think in the way a living organism does; they’re rethinking the basis of intelligence, concept formation, and what it means to be human.

These are deep waters to be sure, and we’re not going to swim them all today. But as contact center managers and others begin the process of thinking about using AI, it’s worth being at least aware of what this broader conversation is about. It will likely come up in meetings, in the press, or in Slack channels in exchanges between employees.

And that’s the subject of our piece today. We’re going to start by asking what artificial intelligence is and how it’s being used, before turning to address some of the concerns about its long-term potential. Our goal is not to answer all these concerns, but to make you aware of what people are thinking and saying.

What is Artificial Intelligence?

Artificial intelligence is famous for having had many, many definitions. There are those, for example, who believe that in order to be intelligent computers must think like humans, and those who reply that we didn’t make airplanes by designing them to fly like birds.

For our part, we prefer to sidestep the question somewhat by utilizing the approach taken in one of the leading textbooks in the field, Stuart Russell and Peter Norvig’s “Artificial Intelligence: A Modern Approach”.

They propose a multi-part system for thinking about different approaches to AI. One set of approaches is human-centric and focuses on designing machines that either think like humans – i.e., engage in analogous cognitive and perceptual processes – or act like humans – i.e. by behaving in a way that’s indistinguishable from a human, regardless of what’s happening under the hood (think: the Turing Test).

The other set of approaches is ideal-centric and focuses on designing machines that either think in a totally rational way – conformant with the rules of Bayesian epistemology, for example – or behave in a totally rational way – utilizing logic and probability, but also acting instinctively to remove itself from danger, without going through any lengthy calculations.

What we have here, in other words, is a framework. Using the framework not only gives us a way to think about almost every AI project in existence, it also saves us from needing to spend all weekend coming up with a clever new definition of AI.

Joking aside, we think this is a productive lens through which to view the whole debate, and we offer it here for your information.

What is Artificial Intelligence Good For?

Given all the hype around ChatGPT, this might seem like a quaint question. But not that long ago, many people were asking it in earnest. The basic insights upon which large language models like ChatGPT are built go back to the 1960s, but it wasn’t until 1) vast quantities of data became available, and 2) compute cycles became extremely cheap that much of its potential was realized.

Today, large language models are changing (or poised to change) many different fields. Our audience is focused on contact centers, so that’s what we’ll focus on as well.

There are a number of ways that generative AI is changing contact centers. Because of its remarkable abilities with natural language, it’s able to dramatically speed up agents in their work by answering questions and formatting replies. These same abilities allow it to handle other important tasks, like summarizing articles and documentation and parsing the sentiment in customer messages to enable semi-automated prioritization of their requests.

Though we’re still in the early days, the evidence so far suggests that large language models like Quiq’s conversational CX platform will do a lot to increase the efficiency of contact center agents.

Will AI be Dangerous?

One thing that’s burst into public imagination recently has been the debate around the risks of artificial intelligence, which fall into two broad categories.

The first category is what we’ll call “social and political risks”. These are the risks that large language models will make it dramatically easier to manufacture propaganda at scale, and perhaps tailor it to specific audiences or even individuals. When combined with the astonishing progress in deepfakes, it’s not hard to see how there could be real issues in the future. Most people (including us) are poorly equipped to figure out when a video is fake, and if the underlying technology gets much better, there may come a day when it’s simply not possible to tell.

Political operatives are already quite skilled at cherry-picking quotes and stitching together soundbites into a damning portrait of a candidate – imagine what’ll be possible when they don’t even need to bother.

But the bigger (and more speculative) danger is around really advanced artificial intelligence. Because this case is harder to understand, it’s what we’ll spend the rest of this section on.

Artificial Superintelligence and Existential Risk

As we understand it, the basic case for existential risk from artificial intelligence goes something like this:

“Someday soon, humanity will build or grow an artificial general intelligence (AGI). It’s going to want things, which means that it’ll be steering the world in the direction of achieving its ambitions. Because it’s smart, it’ll do this quite well, and because it’s a very alien sort of mind, it’ll be making moves that are hard for us to predict or understand. Unless we solve some major technological problems around how to design reward structures and goal architectures in advanced agentive systems, what it wants will almost certainly conflict in subtle ways with what we want. If all this happens, we’ll find ourselves in conflict with an opponent unlike any we’ve faced in the history of our species, and it’s not at all clear we’ll prevail.”

This is heady stuff, so let’s unpack it bit by bit. The opening sentence, “…humanity will build or grow an artificial general intelligence”, was chosen carefully. If you understand how LLMs and deep learning systems are trained, the process is more akin to growing an enormous structure than it is to building one.

This has a few implications. First, their internal workings remain almost completely inscrutable. Though researchers in fields like mechanistic interpretability are going a long way toward unpacking how neural networks function, the truth is, we’ve still got a long way to go.

What this means is that we’ve built one of the most powerful artifacts in the history of Earth, and no one is really sure how it works.

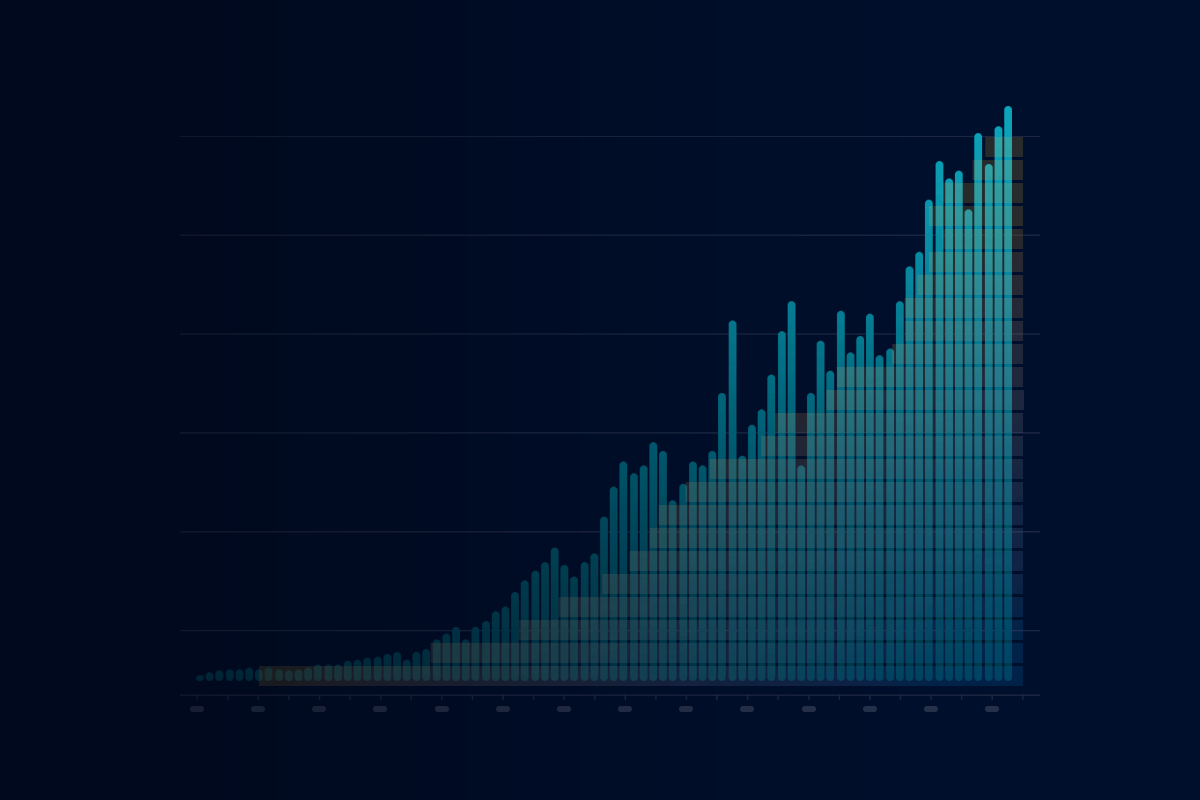

Another implication is that no one has any good theoretical or empirical reason to bound the capabilities and behavior of future systems. The leap from GPT-2 to GPT-3.5 was astonishing, as was the leap from GPT-3.5 to GPT-4. The basic approach so far has been to throw more data and more compute at the training algorithms; it’s possible that this paradigm will begin to level off soon, but it’s also possible that it won’t. If the gap between GPT-4 and GPT-5 is as big as the gap between GPT-3 and GPT-4, and if the gap between GPT-6 and GPT-5 is just as big, it’s not hard to see that the consequences could be staggering.

As things stand, it’s anyone’s guess how this will play out. But that’s not necessarily a comforting thought.

Next, let’s talk about pointing a system at a task. Does ChatGPT want anything? The short answer is: as far as we can tell, it doesn’t. ChatGPT isn’t an agent, in the sense that it’s trying to achieve something in the world, but work into agentive systems is ongoing. Remember that 10 years ago most neural networks were basically toys, and today we have ChatGPT. If breakthroughs in agency follow a similar pace (and they very well may not), then we could have systems able to pursue open-ended courses of action in the real world in relatively short order.

Another sobering possibility is that this capacity will simply emerge from the training of huge deep learning systems. This is, after all, the way human agency emerged in the first place. Through the relentless grind of natural selection, our ancestors went from chipping flint arrowheads to industrialization, quantum computing, and synthetic biology.

To be clear, this is far from a foregone conclusion, as the algorithms used to train large language models is quite different from natural selection. Still, we want to relay this line of argumentation, because it comes up a lot in these discussions.

Finally, we’ll address one more important claim, “…what it wants will almost certainly conflict in subtle ways with what we want.” Why think this is true? Aren’t these systems that we design and, if so, can’t we just tell it what we want it to go after?

Unfortunately, it’s not so simple. Whether you’re talking about reinforcement learning or something more exotic like evolutionary programming, the simple fact is that our algorithms often find remarkable mechanisms by which to maximize their reward in ways we didn’t intend.

There are thousands of examples of this (ask any reinforcement-learning engineer you know), but a famous one comes from the classic Coast Runners video game. The engineers who built the system tried to set up the algorithm’s rewards so that it would try to race a boat as well as it could. What it actually did, however, was maximize its reward by spinning in a circle to hit a set of green blocks over and over again.

Now, this may seem almost silly – do we really have anything to fear from an algorithm too stupid to understand the concept of a “race”?

But this would be missing the thrust of the argument. If you had access to a superintelligent AI and asked it to maximize human happiness, what happened next would depend almost entirely on what it understood “happiness” to mean.

If it were properly designed, it would work in tandem with us to usher in a utopia. But if it understood it to mean “maximize the number of smiles”, it would be incentivized to start paying people to get plastic surgery to fix their faces into permanent smiles (or something similarly unintuitive).

Does AI Pose an Existential Risk?

Above, we’ve briefly outlined the case that sufficiently advanced AI could pose a serious risk to humanity by being powerful, unpredictable, and prone to pursuing goals that weren’t-quite-what-we-meant.

So, does this hold water? Honestly, it’s too early to tell. The argument has hundreds of moving parts, some well-established and others much more speculative. Our purpose here isn’t to come down on one side of this debate or the other, but to let you know (in broad strokes) what people are saying.

At any rate, we are confident that the current version of ChatGPT doesn’t pose any existential risks. On the contrary, it could end up being one of the greatest advancements in productivity ever seen in contact centers. And that’s what we’d like to discuss in the next section.

Will AI Take All the Jobs?

The concern that someday a new technology will render human labor obsolete is hardly new. It was heard when mechanized weaving machines were created, when computers emerged, when the internet emerged, and when ChatGPT came onto the scene.

We’re not economists and we’re not qualified to take a definitive stand, but we do have some early evidence that is showing that large language models are not only not resulting in layoffs, they’re making agents much more productive.

Erik Brynjolfsson, Danielle Li, and Lindsey R. Raymond, three MIT economists, looked at the ways in which generative AI was being used in a large contact center. They found that it was actually doing a good job of internalizing the ways in which senior agents were doing their jobs, which allowed more junior agents to climb the learning curve more quickly and perform at a much higher level. This had the knock-on effect of making them feel less stressed about their work, thus reducing turnover.

Now, this doesn’t rule out the possibility that GPT-10 will be the big job killer. But so far, large language models are shaping up to be like every prior technological advance, i.e., increasing employment rather than reducing it.

What is the Future of AI?

The rise of AI is raising stock valuations, raising deep philosophical questions, and raising expectations and fears about the future. We don’t know for sure how all this will play out, but we do know contact centers, and we know that they stand to benefit greatly from the current iteration of large language models.

These tools are helping agents answer more queries per hour, do so more thoroughly, and make for a better customer experience in the process.

If you want to get in on the action, set up a demo of our technology today.