AI is one of the most exciting new developments in customer service. But how does customer service AI work, and what does it make possible? In this piece, we’ll offer the context you need to make good decisions about this groundbreaking technology. Let’s dive in!

What is AI in Customer Service?

AI in customer service means deploying innovative technology–generative AI, custom predictive models, etc.–to foster support interactions that are quick, effective, and tailored to the individual needs of your customers. When organizations utilize AI-based tools, they can automate processes, optimize self-service options, and support their agents, all of which lead to significant time and cost savings.

What are the Benefits of Using AI in Customer Service?

There are myriad advantages to using customer support AI, including (but not limited to):

- Quicker Response Times: AI swiftly manages both simple and complex questions, minimizing wait times and enhancing the overall customer experience.

- Round-the-Clock Support: With AI in place, customers receive continuous support, no matter the time or day, ensuring help is always available when needed.

- Reduced Costs: Automating repetitive tasks with AI reduces the need for large teams, which helps lower operational costs while maintaining service quality.

- Increased Agent Productivity: AI takes care of mundane tasks, freeing up agents to focus on more strategic efforts, like cross-selling and delivering personalized solutions.

- Tailored Interactions: Using customer data, AI personalizes responses and suggestions, making every interaction feel unique and relevant to the customer’s needs.

- Effortless Scalability: As your business grows, AI can seamlessly manage a growing number of customer requests without adding additional resources.

- Emotion Recognition: AI can assess customer sentiment in real-time, adapting its responses to ensure more positive and satisfying interactions.

- Reliable Accuracy: AI ensures that all customer interactions are consistent and precise, based on your company’s guidelines and information, minimizing errors.

- Agent Empowerment: By automating routine tasks, AI empowers agents to focus on more meaningful, high-impact work, making their roles more rewarding.

- Optimized Processes: AI streamlines operations by identifying which tasks can be automated, allowing your support team to work more efficiently.

9 Applications for AI in Customer Service

Is AI right for your customer service operations? Here are some common ways companies are adopting it.

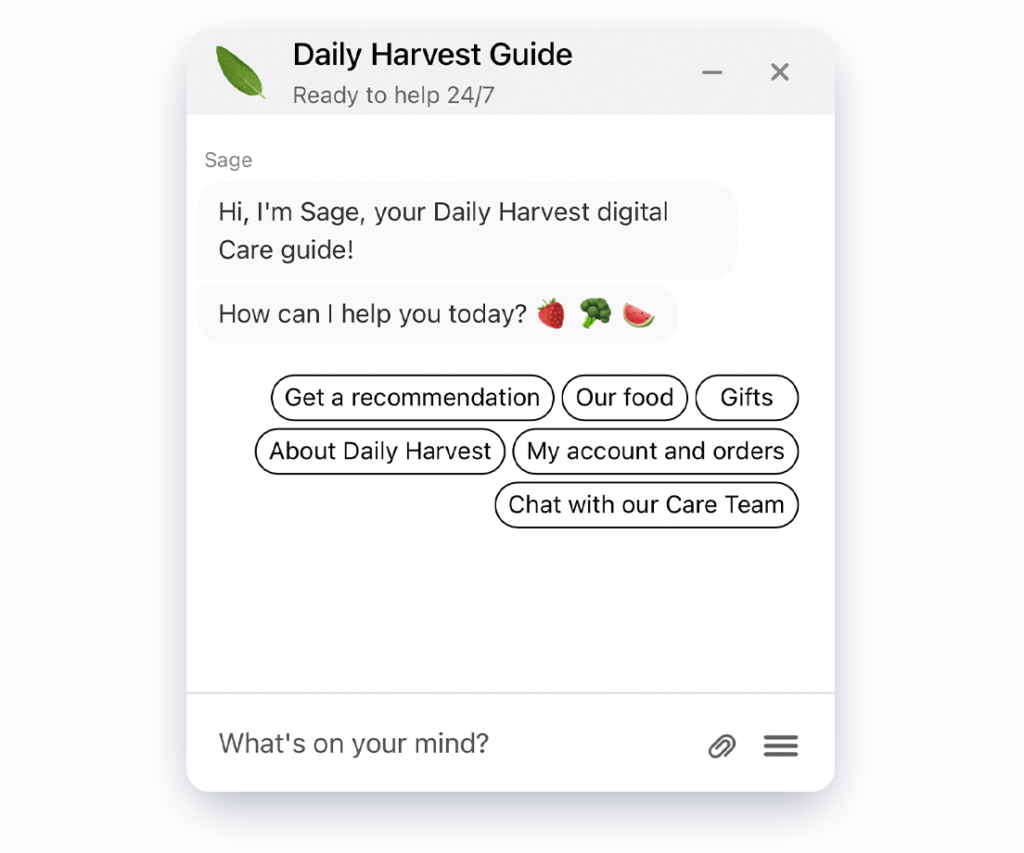

- AI Agents: AI-powered bots manage both routine and complex tasks, automating customer interactions and allowing agents to focus on higher-value work.

- Agent Assistance: AI provides real-time guidance and response suggestions, helping agents work more efficiently and confidently.

- Automated Workflows: AI streamlines workflows by intelligently routing tickets, suggesting responses, and summarizing conversations, improving efficiency.

- Workforce Management: AI predicts staffing needs, optimizes schedules, and personalizes shifts, helping reduce overtime and improve team management.

- Service Quality Assurance: AI speeds up quality assurance by reviewing customer interactions and providing actionable insights to improve agent performance.

- Call Management: AI helps manage calls by transcribing interactions, summarizing calls, and offering real-time support, reducing wait times and enhancing training.

- Help Center Optimization: AI improves knowledge base performance by identifying content gaps and automating the creation or updating of articles.

- Revenue Generation: AI drives upselling and cross-selling by integrating with backend systems, offering personalized product recommendations during customer interactions.

- Insights for Improvement: AI analyzes customer conversations to uncover patterns, trends, and areas for improvement, helping businesses refine their support strategies.

Things to Consider When Using AI in Customer Service

Now that we’ve covered some necessary ground about what customer support AI is and why it’s awesome, let’s talk about a few things you should be aware of when weighing different solutions and deciding on how to proceed.

Augmenting Human Agents

Against the backdrop of concerns over technological unemployment, it’s worth stressing that generative AI, AI agents, and everything else we’ve discussed are ways to supplement your human workforce.

So far, the evidence from studies done on the adoption of generative AI in contact centers have demonstrated unalloyed benefits for everyone involved, including both senior and junior agents. We believe that for a long time yet, the human touch will be a requirement for running a good contact center operation.

CX Expertise

Though a major benefit of customer service AI service is its proficiency in accurately grasping customer inquiries and requirements, obviously, not all AI systems are equally adept at this. It’s crucial to choose AI specifically trained on customer experience (CX) dialogues. It’s possible to do this yourself or fine-tune an existing model, but this will prove as expensive as it is time-intensive.

When selecting a partner for AI implementation, ensure they are not just experts in AI technology, but also have deep knowledge of and experience in the customer service and CX domains.

Time to Value

When integrating AI into your customer experience (CX) strategy, adopt a “crawl, walk, run” approach. This method not only clarifies your direction but also allows you to quickly realize value by first applying AI to high-leverage, low-risk repetitive tasks, before tackling more complex challenges that require deeper integration and more resources. Choosing the right partner is an important part of finding a strategy that is effective and will enable you to move swiftly.

Channel Enablement

These days, there’s a big focus on cultivating ‘omnichannel’ support, and it’s not hard to see why. There are tons of different channels, many boasting billions of users each. From email automation for customer service and Voice AI to digital business messaging channels, you need to think through which customer communication channels you’ll apply AI to first. You might eventually want to have AI integrated into all of them, but it’s best to start with a few that are especially important to your business, master them, and branch out from there.

Security and Privacy

Data security and customer privacy have always been important, but as breaches and ransomware attacks have grown in scope and power, people have become much more concerned with these issues.

That’s why LLM security and privacy are so important. You should look for a platform that prioritizes transparency in their AI systems—meaning there is clear documentation of these systems’ purpose, capabilities, and limitations. Ideally, you’d also want the ability to view and customize AI behaviors, so you can tweak it to work well in your particular context.

Then, you want to work with a vendor that is as committed to high ethical standards and the protection of user privacy as you are; this means, at minimum, only collecting the data necessary to facilitate conversations.

Finally, there are the ‘nuts and bolts’ to look out for. Your preferred platform should have strong encryption to protect all data (both in transit and at rest), regular vulnerability scans, and penetration testing safeguard against cyber threats.

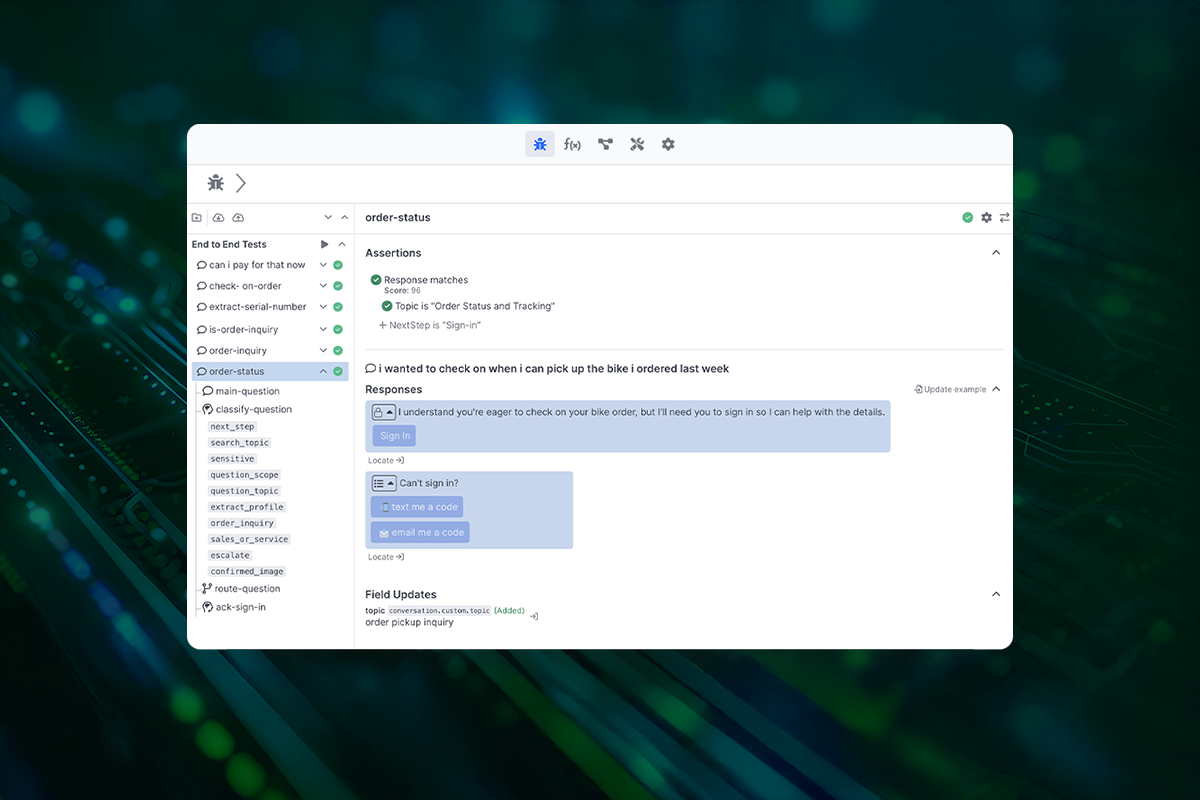

Observability

Related to the transparency point discussed above, there’s also the issue of LLM observability. When deploying Large Language Models (LLMs) into applications, it’s crucial not to regard them as opaque “black boxes.” As your LLM deployment grows in complexity, it becomes all the more important to monitor, troubleshoot, and comprehend the LLM’s influence on your application.

There’s a lot to be said about this, but here are some basic insights you should bear in mind:

- Do what you can to incentivize users to participate in testing and refining the application.

- Try to simplify the process of exploring the application across a variety of contexts and scenarios.

- Be sure you transparently display how the model functions within your application, by elucidating decision-making pathways, system integrations, and validation of outputs. This makes it easier to model how it functions and catch any errors.

- Speaking of errors, put systems in place to actively detect and address deviations or mistakes.

- Display key performance metrics such as response times, token consumption, and error rates.

Brands that do this correctly will have the advantage of being established as genuine leaders, with everyone else relegated to status as followers. Large language models are going to become a clear differentiator for CX enterprises, but they can’t fulfill that promise if they’re seen as mysterious and inscrutable. Observability is the solution.

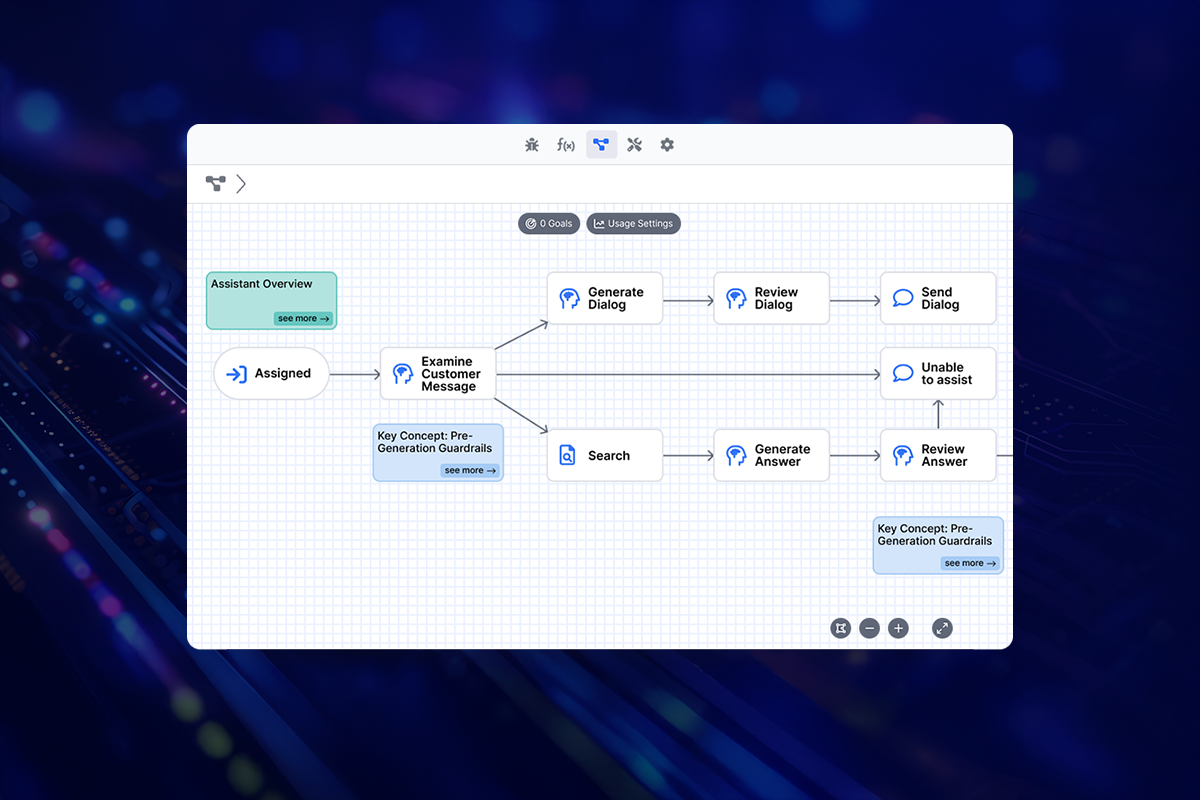

Risk Mitigation

You should look for a platform that adopts a thorough risk management strategy. A great way to do this is by setting up guardrails that operate both before and after an answer has been generated, ensuring that the AI sticks to delivering answers from verified sources.

Another thing to check is whether the platform is filtering both inbound and outbound messages, so as to block harmful content that might otherwise taint a reply. These precautions enable brands to implement AI solutions confidently, while also effectively managing concomitant risks.

AI Model Flexibility

Finally, in the interest of maintaining your ability to adapt, we suggest looking at a vendor that is model-agnostic, facilitating integration with a range of different AI offerings. Quiq’s AI Studio, for example, is compatible with leading-edge models like OpenAI’s GPT3.5 and GPT4, as well as Anthropic’s Claude models, in addition to supporting bespoke AI models. This is the kind of versatility you should be on the look out for.

What is the Future of AI in Customer Service?

The future of customer service lies in AI and humans working together to provide personalized and empathetic experiences. AI agents, powered by natural language processing and sentiment analysis, will handle complex inquiries and offer proactive, tailored solutions. Automation will streamline workflows, reducing response times and allowing agents to focus on higher-value tasks like upselling. AI-driven insights will continuously refine customer service strategies, improving efficiency and satisfaction, all while prioritizing data privacy and ethical AI use.

Where to Get Started with AI in Customer Service

To successfully integrate AI in customer service, begin by identifying key pain points like long response times or repetitive inquiries. Start small by automating areas such as self-service or support ticketing, and gradually expand as you refine its effectiveness.

It’s important to consider challenges like data privacy and AI bias. Ensure robust data protection measures are in place and train AI on diverse datasets to avoid unfair outcomes. Early-stage AI implementation may face issues with data quality and accuracy, but these can be addressed through data cleaning and continuous refinement.

When selecting AI tools, prioritize systems that balance functionality with ease of use. Ensure smooth integration with existing CRM systems and maintain clear escalation paths for more complex issues.

By starting small, monitoring AI performance, and continuously optimizing, businesses can successfully integrate AI in customer service with human agents to enhance efficiency. Ultimately, with thoughtful planning and continuous improvements, AI can help businesses create a more responsive, personalized, and efficient customer service experience that complements human capabilities and meets evolving customer expectations.

For more context, check out our in-depth Guide to Evaluating AI for Customer Service Leaders.