Key Takeaways

- Visibility matters: If users can’t see or know about your live chat, they won’t use it. Promote the chat option via your website, email campaigns, phone hold messages, and other touchpoints.

- Remove friction in access: Make initiating chat as painless as possible. Minimize form fields, allow conversational data collection before routing to agents, and reduce extra steps that discourage use.

- Personalize interactions: Use branding, agent names/pictures, or context from prior interactions to tailor the chat experience. The more it feels human and relevant, the more comfortable customers will be using it.

- Leverage AI & automation smartly: Use AI to automate answers to routine queries, freeing human agents for more complex tasks. At the same time, ensure smooth escalation from AI to humans and maintain continuity

When customer experience directors float the idea of investing more heavily in live chat for customer service, it’s not uncommon for them to get pushback. One of the biggest motivations for such reticence is uncertainty over whether anyone will actually want to use such support channels—and whether investing in them will ultimately prove worth it.

An additional headwind comes from the fact that many CX directors are laboring under the misapprehension that they need an elaborate plan to push customers into a new channel. However, one thing we consistently hear from our enterprise customers is that it’s surprising how customers naturally start using a new channel when they realize it exists. To borrow a famous phrase from Field of Dreams, “If you build it, they will come.” Or, to paraphrase a bit, “If you build it (and make it easy for them to engage with you), they will come.” You don’t have to create a process that diverts them to the new channel.

What is Live Chat?

Live chat is a real-time messaging tool on a website or app that lets customers quickly communicate with a business. It typically appears as a small chat window and allows users to ask questions, get support, or receive guidance instantly while browsing. Live chat improves customer experience by reducing wait times and offering immediate, personalized help.

Why is Live Chat Important for Contact Centers?

Live chat has a clear impact on customer engagement. When businesses offer real-time messaging, customers are more likely to return, explore confidently, and move toward a purchase because they can get quick answers without waiting on hold.

It’s also a channel customers genuinely prefer. Live chat feels easier and more convenient than phone or email, lets users multitask, and gives them a written record of the conversation—all of which contribute to consistently strong satisfaction. Support teams benefit, too. Handling conversations through chat reduces the emotional strain of frequent phone calls and allows agents to manage more interactions efficiently, which can improve morale and retention.

Overall, live chat stands out as an effective communication channel that supports better customer satisfaction and stronger outcomes for support teams—making it a smart choice for contact centers and customer service today and in the future.

Benefits of Live Chat Support Services

Real-Time Support

When customers need help, they don’t want to wait. Live chat support services provide instant solutions, cutting down resolution times and getting customers the answers they need—fast. And when customers get quick answers, they stick around. Faster replies lead to higher satisfaction, increased trust, and more repeat business. The quicker the response, the better the experience,— and that’s a win for both customers and businesses.

Increased Customer Satisfaction

Today’s customers expect immediate support, and live chat support services deliver exactly that. When customers know they can rely on your support team for quick, clear, and helpful answers, they feel confident in your brand. That confidence translates into loyalty, repeat purchases, and positive word-of-mouth—turning a one-time buyer into a long-term customer.

Efficiency

Live chat isn’t just better for customers,— it’s a game-changer for support teams too. Unlike phone calls, where agents can only help one person at a time, live chat lets them handle multiple conversations at once. That means fewer bottlenecks, faster resolutions, and better overall efficiency. Plus, fewer phone calls = lower costs. With live chat, businesses can reduce phone expenses, optimize staffing, and minimize hold times—all without sacrificing customer experience.

Omnichannel Integration

Customers don’t just stick to one channel—they bounce between email, social media, SMS, and your website. Live chat support services integrate seamlessly into this mix, creating a unified experience. Whether a customer starts a conversation on social media and follows up via chat or asks a question through SMS, they get the same consistent service. Even better, integrating chat across channels keeps all customer interactions in one place, so your team has a complete history of past conversations. That means no more repeating issues, fewer dropped interactions, and a smoother customer journey from start to finish.

Live Chat Support Best Practices

Prompt Response Times

Speed matters when it comes to live chat support services. The faster you respond, the more valued customers feel—and that leads to higher satisfaction and loyalty. Nobody likes waiting, especially when they have a quick question standing between them and a purchase. Whether a customer is asking about shipping costs, return policies, or product details, meeting them in the moment with real-time support keeps them engaged.

Professional Communication

Live chat is fast, but that doesn’t mean it should feel rushed. A professional and friendly tone makes all the difference in building trust and keeping conversations productive. Customers want clear, concise, and helpful responses—not robotic scripts or vague answers. Miscommunication can create frustration, so keep things simple, polite, and to the point. Use proper grammar, avoid jargon, and personalize interactions with the customer’s name. A great chat experience feels like talking to a knowledgeable friend—someone who understands the problem and knows exactly how to help. The smoother the conversation, the more confident customers feel about your brand.

24/7 Availability

Customers shop on their own time, whether that’s during a lunch break, late at night, or halfway across the world. Offering live chat support services 24/7 means you’re always there when they need help. This is especially valuable for global businesses, ensuring customers in different time zones get real-time answers instead of waiting for office hours. Plus, round-the-clock availability isn’t just about support—it’s a sales booster too. A shopper with a question at 2 AM might just leave if they can’t get an answer. But if live chat is available? That hesitation disappears, and the sale happens.

6 Tips for Encouraging Customers to Use Live Chat

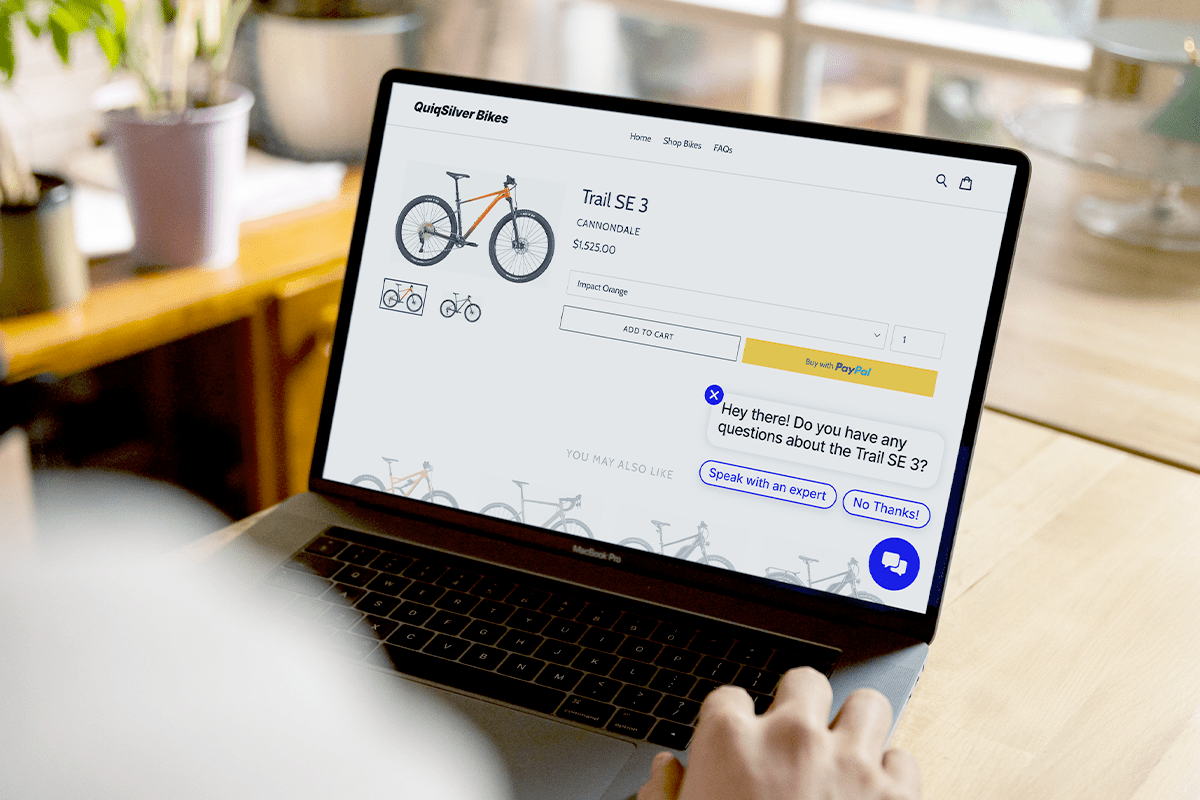

1. Make Sure People Know You have Live Chat Services

One of the simplest ways to increase live chat adoption is to make it highly visible. Promote it across your usual channels—your support page, social media, order confirmation emails, and other customer touchpoints so people know it’s available.

You can also shift customers from phone to messaging by mentioning live chat in your IVR or hold messages. Since customers dislike waiting on hold, offering a quick alternative like web chat, SMS, WhatsApp, or Apple Messages can encourage them to switch. A prompt as straightforward as “Press 2 to chat with an agent online or by text” can significantly reduce call volume.

Highlighting live chat benefits everyone. Agents can manage multiple conversations at once, leading to quicker resolutions and higher overall satisfaction. And the more places you link to live chat on post-purchase emails, product pages, hero pages, and other high-intent parts of your website, the easier it is for customers to get help in the moment, which can also boost conversions.

2. Minimize the Hassle of Using Live Chat

One of the better ways of boosting engagement with any feature, including live chat, is to make it as pain-free as possible.

Take contact forms, for example, which can speed up time to resolution by organizing all the basic information a service agent needs. This is great when a customer has a complex issue, but if they only have a quick question, filling out even a simple contact form may be onerous enough to prevent them from asking it. Every additional second of searching or fiddling means another lost opportunity.

There’s a bit of a balancing act here, but, in general, the fewer fields a contact form has, the more likely someone is to fill it out.

The emergence of large language models (LLMs) has made it possible to use an AI agent to collect information about customers’ specific orders or requests. When such an agent detects that a request is complex and needs human attention, it can ask for the necessary information to pass along to an agent. This turns the traditional contact form into a conversation, placing it further along in the customer service journey, so only those customers who need to fill it out will have to use it.

3. Personalize Your Chat

Another way to make live chat for customer service more attractive is to personalize your interactions. Personalization can be anything from including an agent’s name and picture in the chat interface displayed on your webpage to leveraging an LLM to craft a whole bespoke context for each conversation.

For our purposes, the two big categories of personalization are brand-specific personalization and customer-specific personalization. Let’s discuss each.

Brand-specific personalization

Marketing and contact teams should collaborate to craft notifications, greetings, etc., to fit their brand’s personality. Chat icons often feature an introductory message such as “How can I help you?” to let browsers know their questions are welcome. This is a place for you to set the tone for the rest of the conversation, and such friendly wording can encourage people to take the next step and type out a message.

More broadly, these departments should also develop a general tone of voice for their service agents. While there may be some scripted language in customer service interactions, most customers expect human support specialists to act like humans. And, since every request or concern is a little different, agents often need to change what they say or how they say it.

Customer-specific personalization

Customer-specific personalization, which might involve something as simple as using their name, or extend to drawing from their purchase history to include the specifics of the order they’re asking about.

Among the many things that today’s LLMs excel at is personalization. Machine learning has long been used to personalize recommendations, but when LLMs are turbo-charged with a technique like retrieval-augmented generation (which allows them to use validated data sources to inform their replies to questions), the results can be astonishing.

Machine-based personalization and retrieval-augmented generation are both big subjects, and you can read through the links for more context. But the high-level takeaway is that, together, they facilitate the creation of a seamless and highly personalized experience across your communication channels using the latest advances in AI. Customers will feel more comfortable using your live chat feature, and will grow to feel a connection with your brand over time.

4. Include Privacy and Data Usage Messages

By taking privacy seriously, you can distinguish yourself and thereby build trust. Customers visiting your website want an assurance that you will take every precaution with their private information, and this can be provided through easy-to-understand data privacy policies and customizable cookie preferences.

Live messaging tools can cause concerns because they are often powered by third-party software. Customer service messaging can also require a lot of personal information, making some users hesitant to use these tools.

You can quell these concerns by elucidating how you handle private customer data. When a message like this appears at the start of a new chat, it is always accessible via the header, or persists in your chat menu, customers can see how their data is safeguarded and feel secure while entering personal details.

5. Use Rich Messages

Smartphones have become a central hub for browsing the internet, shopping, socializing, and managing daily activities. As text messaging gradually supplemented most of our other ways of communicating, it became obvious that an upgrade was needed.

This led to the development of rich messaging applications and protocols such as Apple Messages for Business and WhatsApp, which use Rich Communication Services (RCS). RCS features enhancements like buttons, quick replies, and carousel cards—all designed to make interactions easier and faster for the customer.

Using rich messaging in live chat with customers will likely help boost engagement. Customers are accustomed to seeing emojis now, and you can include them as a way of humanizing and personalizing your interactions. There might be contexts in which they need to see or even send graphics or images, which is very difficult with the old Short Messaging Service (SMS).

6. Separating Chat and Agent Availability

Once upon a time, ‘chat availability’ simply meant the same thing as ‘agent availability,’ but today’s language models are rapidly becoming capable enough to resolve a wide variety of issues on their own. In fact, one of the major selling points of AI agents is that they provide round-the-clock service because they don’t need to eat, sleep, or take bathroom breaks.

This doesn’t mean that they can be left totally alone, of course. Humans still need to monitor their interactions to make sure they’re not being rude or hallucinating false information. But this is also something that becomes much easier when you pair with an industry-leading conversational AI for CX platform that has robust safeguards, monitoring tools, and the ability to switch between different underlying models (in case one starts to act up).

Having said that, there are still a wide variety of tasks for which a living agent is still the best choice. For this reason, many companies have specific time windows when live chat for customer service is available. When it’s not, some choose to let customers know when live chat is an option by communicating the next availability window.

Employing these two strategies means that your ability to service customers is decoupled from operational constraints of agent availability, and you are always ready to seize the opportunity to serve customers when they are eager to engage with your brand

Creating Greater CX Outcomes with Live Web Chat is Just the Start.

Live web chat remains one of the strongest ways to resolve issues quickly while building trust and elevating the customer experience. The key to driving higher engagement is making chat visible, easy to use, and personalized while using AI to handle routine questions and fill in gaps when agents aren’t available.

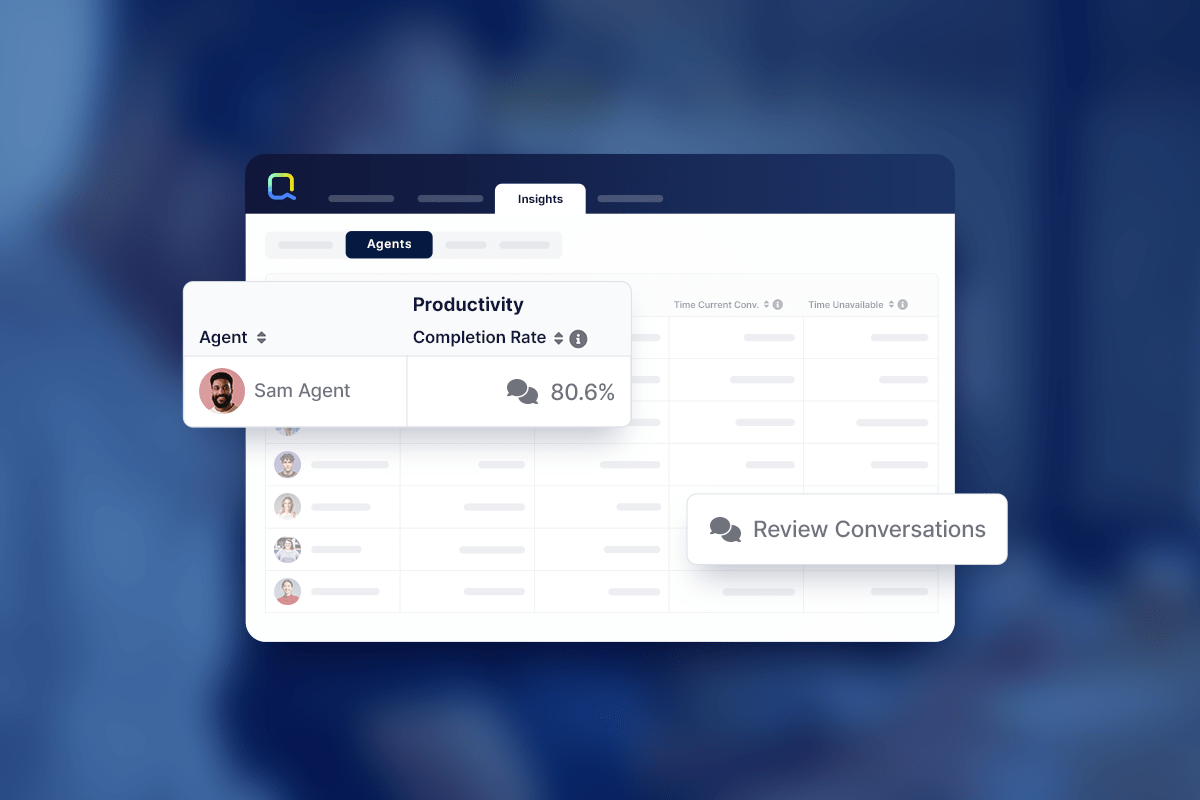

With Quiq, these strategies become even more effective. Quiq helps teams blend AI, automation, and human agents across chat, messaging, and web channels so customers always get fast, reliable support.

If you’re interested in taking additional steps and learning how to use live chat more effectively within your customer-service strategy, be sure to explore our Agentic AI for CX Buyers Kit. It breaks down practical, actionable ways to elevate your support experience—covering automation, AI-driven workflows, and the evolving role of messaging. Inside, you’ll find clear guidance on how to use live chat alongside modern AI capabilities to boost satisfaction, streamline operations, and drive more meaningful customer outcomes.

Frequently Asked Questions (FAQs)

Why should businesses offer live chat support?

Live chat provides instant, convenient communication – reducing wait times and improving customer satisfaction while lowering operational costs.

How can I encourage customers to use live chat?

Make the chat widget visible, promote it across touchpoints (like emails or social), and ensure it’s easy to access without long forms or redirects.

How does live chat benefit support teams?

AI agents can handle multiple chats simultaneously, improving efficiency, reducing call volume, and boosting job satisfaction.

Can live chat integrate with other channels?

Absolutely. Live chat can be part of an omnichannel strategy that connects web, SMS, and social interactions for a seamless customer experience.

What metrics should I track to measure chat success?

Monitor chat volume, first-response time, resolution time, CSAT scores, and conversion rates to understand performance and customer satisfaction.