From ChatGPT and Bard to BLOOM and Claude, there is now a veritable ocean of different large language models (LLMs) for you to choose from. Some of them are specialized for specific use cases, some are open-source, and there’s a huge variance in the number of parameters they contain.

If you find yourself fascinated by this technology and interested in using it in your contact center, it can be hard to know how to choose the right tool for the job.

Today, we’re going to tackle this issue head-on. After a brief discussion of the history of LLMs, we’ll talk about specific criteria you can use to evaluate LLMs, sources of additional information, and some of the better-known options.

Let’s get going!

A Brief History of Generative AI

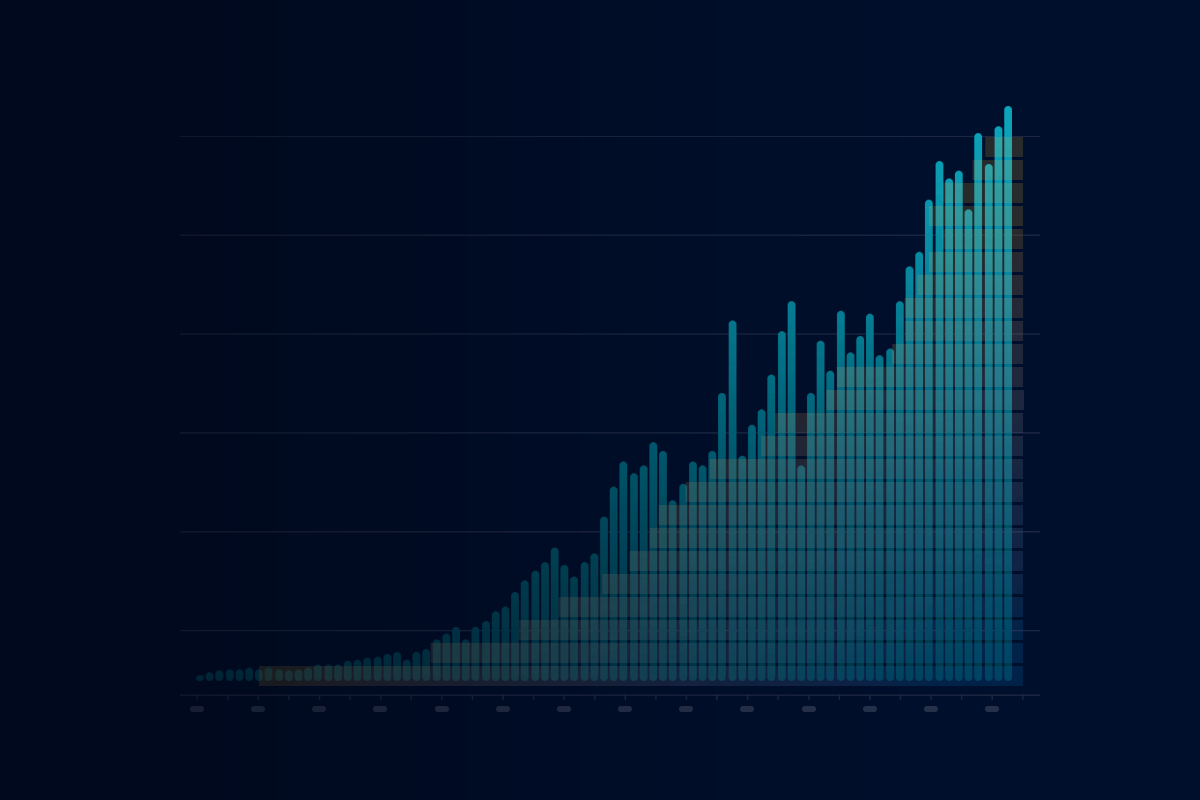

Though it may feel like LLMs and generative AI exploded onto the scene all of half a year ago, in fact, the basic research powering these advances goes back much further.

Way back in the 1940s, Walter Pitts and Warren McCulloch drew upon early research on the brain to design artificial neurons. Though these worked, they couldn’t be deployed for anything particularly useful until the invention of the backpropagation algorithm in 1985. This allowed larger neural networks to be trained effectively, and in 1989 Yann LeCun built a convolutional system able to identify handwritten numbers.

Around this same time, there were architectural discoveries like long short-term memory networks that made it possible for machine learning algorithms to learn far more complex relationships within data, laying the foundations for them to eventually be able to revolutionize work in places like contact centers.

What’s more, the opening decade of the 2000s marked the beginning of the big data era. For all their power, generative pre-trained models like ChatGPT are not terribly efficient learners. To be able to output language or images, they must be shown many, many examples from which to derive the statistical function that allows them to create surprising new output later.

Once researchers began the practice of publishing enormous datasets a key obstacle to building large, useful systems was removed. When combined with the preceding six decades of foundational conceptual work, this was enough to allow us here in 2023 to witness the birth of generative AI and large language models.

How to Compare Large Language Models?

If you’re shopping around for a large language model for a particular application, it makes sense to first get clear on the evaluation criteria you should be using. That’s what we’ll cover in the sections below.

Evaluating LLMs Based on Industry

One of the more remarkable aspects of ChatGPT is that it’s so good at so many things. Out of the box (or sometimes with a little fine-tuning) it can perform very well at answering questions, summarizing text, translating between natural languages, and much more.

However, there may well be situations in which you’d want to use a domain-specific LLM, one that has been trained on medical or legal text, financial data, etc. The basic process of training a generative model is now being used to build neural networks for material design, protein synthesis, and music, among other things.

So, if you’re considering using a generative pre-trained model in your business, one thing you might want to think about early on is whether you want to try to find a domain-specific model, or a general model that you train on your own data.

If you do look for a domain-specific model, be aware that the space is very new and there might not be one available yet (though given how much attention is going into generative AI right now, there’s also a decent chance that one will be released in relatively short order).

Alternatively, you could try to fine-tune a pre-trained model. Getting into the nuances of fine-tuning, zero-shot learning, few-shot learning, and prompt engineering is beyond the scope of this article, but suffice it to say that there are many ways for you to get a generic LLM to be better at a smaller range of specific tasks.

If you’re an engineer designing circuits for quantum computers this might not be sufficient, but for those of us working in customer experience and contact centers, a well-honed prompt or a half-dozen examples might be more than enough for substantial performance boosts.

Evaluating LLMs By Language

Given that English is a sort of lingua franca (should it be lingua anglica?) for the tech community and makes up nearly 60% of the websites on the internet, it’s no surprise that it also comprises the bulk of the training data going into modern LLMs.

ChatGPT and other systems are often pretty good at multi-lingual tasks by default, but they don’t perform equally well in all languages. As you can probably guess, they’re best at “high-resource” languages (English, Spanish, Chinese), somewhat worse at “medium-resource” languages (Portuguese, Hindi), and much worse at “low-resource” languages (Haitian and Swahili).

If you’re serving customers with a medium- or low-resource language and need really high levels of accuracy, you’ll probably have to stick with human beings for a while. Otherwise, test ChatGPT or whatever system you end up going with for how well it can handle multi-lingual problems like question answering and translation.

Whether They’re Open-Source or Closed-Source

No doubt you’ve heard of “open-source” software, a term which refers to the practice of releasing source code to the public where it can be forked, modified, and scrutinized.

The open-source approach to software development has become incredibly popular, and this enthusiasm has partially bled over into artificial intelligence and machine learning. It’s is now fairly common to open source datasets, models, and even training frameworks like TensorFlow.

How does this translate to the realm of large language models? In truth, it’s a bit of a mixture. Some models are proudly open-sourced, while others jealously guard their model’s weights, training data, and source code.

This is one thing you might want to consider as you carry out your search for a large-language model. Some of the very best models, like ChatGPT, are closed-source. You won’t be able to fork the ChatGPT code base and modify it, you’ll be relegated to feeding queries into it via an API.

The advantage to going with a closed-source model, of course, is that you needn’t lay awake at night worrying about managing a codebase thousands of lines long, nor will you need to concern yourself with hiring the expensive engineers who know how to read and use it.

The downside, naturally, is that you’re entirely beholden to the team who builds and offers the LLM over their API. If they make updates or go bankrupt, you could be left scrambling last-minute to find an alternative solution.

There’s no one-size-fits-all approach here; if you have the in-house technical expertise to fork an open-source LLM and you want to modify it, open-source is probably the way to go. But be aware that this is a substantial commitment, and as things stand today, the very best generative pre-trained language models are closed-source, so there’s a performance penalty that you’ll have to account for.

Leaderboards and Comparison Websites for Large Language Models

Another route you can go in comparing current LLMs is to avail yourself of a service build for this purpose.

Whatever rumors you may have heard, programmers are human beings, and human beings have a fondness for ranking and categorizing pretty much everything – sports teams, guitar solos, classic video games, you name it.

Naturally, as LLMs have become better-known, leaderboards and websites have popped up comparing them along all sorts of different dimensions. Here are a few you can use as you search around for the best tooling.

Leaderboards

In the past couple of months, leaderboards have emerged which directly compare various LLMs.

One is AlpacaEval, which uses a custom dataset to compare ChatGPT, Claude, Cohere, and other LLMs on how well they’re able to follow instructions. AlpacaEval boasts high agreement with human evaluators, so in our estimation it’s probably a suitable way of initially screening for LLM tools, though more extensive checks might be required to settle on a final list.

Another good choice is Chatbot Arena, which pits two anonymous models side-by-side, has you rank which one is better, than aggregates all the scores into a leaderboard.

Finally, there is Hugging Face’s Open LLM Leaderboard, which is a similar endeavor. Anyone can submit a new model for evaluation, all of which are then assessed based on a small set of key benchmarks from the Eleuther AI Language Model Evaluation Harness. These capture how well the models do in answering simple science questions, common-sense queries, and more.

When combined with the criteria we discussed earlier, these leaderboards and comparison websites ought to give you everything you need to find a powerful generative pre-trained language model for your application.

What are the Currently-Available Large Language Models?

Okay! Now that we’ve worked through all this background material, let’s turn to discussing some of the major LLMs that are available today. We make no promises about these entries being comprehensive (and even if they were, there’d be new models out next week), but it should be sufficient to give you an idea as to the range of options you have.

ChatGPT and GPT

Obviously, the titan in the field is OpenAI’s ChatGPT, which is really just a version of GPT that has been fine-tuned through reinforcement learning from human feedback to be especially good at sustained dialogue.

ChatGPT and GPT have been used in many domains, including customer service, question answering, and many others.

LLaMA

In February of 2023, Facebook’s AI team released its Large Language Model Meta AI, or LLaMA. At 65 billion parameters it is not quite as big as GPT, and this is intentional, as it’s purpose is to aid researchers who may not have the budget or expertise required to provision a behemoth LLM.

LaMDA

Like GPT-4, Google’s LaMDA is based on the transformer architecture and is aimed squarely at dialogue. It is able to converse on a nearly infinite number of subjects, and from the beginning, the Google team has focused on having LaMDA produce interesting responses that are nevertheless absent of abuse and harmful language.

MT-NLG

The Megatron-Turing Natural Language Generation (MT-NLG) model from Nvidia sports a staggering half-trillion (530 billion) parameters, and excels at “…Completion prediction, Reading comprehension, Commonsense reasoning, Natural language inferences, Word sense disambiguation,” and more.

StableLM

StableLM is a lightweight, open-source language model built by Stability AI. It’s trained on a new dataset called “The Pile”, which is itself made up of over 20 smaller, high-quality datasets which together amount to over 825 GB of natural language.

GPT4All

What would you get if you trained an LLM on “…on a massive curated corpus of assistant interactions, which included word problems, multi-turn dialogue, code, poems, songs, and stories,” then released it to on an Apache 2.0 license? The answer is GPT4All, an open-source model whose purpose is to encourage research into what these technologies can accomplish.

Alpaca

The Alpaca LLM project developed by Stanford is designed around following instructions. As things stand, Alpaca isn’t considered safe yet, so it is intended to be used by research teams exploring the frontiers of LLMs.

BLOOM

The BigScience Large Open-Science Open-Access Multilingual Language Model (BLOOM) was released in late 2022. The team that put it together consisted of more than a thousand researchers from all over the worlds, and unlike the other models on this list, it’s specifically meant to be interpretable.

GATO

DeepMind is one of the leading players advancing the frontiers of AI, and their GATO LLM is correspondingly remarkable. Like GPT-4, GATO is multimodal, meaning it can work with text, images, games, and can even control a robot.

Pathways Language Model (PaLM)

Like LaMDA, PaLM is from Google, and is also enormous (540 billion parameters). It excels in many language-related tasks, and became famous when it produced really high-level explanations of tricky jokes.

Claude

Anthropic’s Claude is billed as a “next-generation AI assistant.” It’s not known how big the model is, but it does come in two modes: the full Claude, and Claude Instant, which is faster but produces lower-quality responses.

FAQs

Now, let’s turn to some common sources of confusion where comparing current LLMs are concerned.

Overcoming the Limitations of Large Language Models

Large language models are remarkable tools, but they nevertheless suffer from some well-known limitations. They tend to hallucinate facts, for example, sometimes fail at basic arithmetic, and can get lost in the course of lengthy conversations.

Overcoming the limitations of large language models is mostly a matter of fine-tuning and monitoring them. The fine-tuning data you use must be carefully curated in order to cover basic failure modes, and you must have a robust means of checking on their output in case they go off the rails somewhere along the line.

What are the Best Large Language Models?

Having read all of the foregoing content, it’s natural to wonder if there’s a single model that best suits your enterprise. The answer is probably “yes”, but which model is ultimately the best fit for you depends a lot on the specifics. You’ll have to think about whether you want an open-source model or your content with hitting an API, whether your use case is outside the scope of ChatGPT and better handled with a bespoke model, etc.

Choosing Among the Current Large Language Models

With all the different LLMs on offer, it’s hard to narrow the search down to the one that’s best for you. By carefully weighing the different metrics we’ve discussed in this article, you can choose an LLM that meets your needs with as little hassle as possible.

Another way to minimize your headaches is to use an industry-leading solution that works out of the box to deliver world-class functionality. That’s exactly what we’re achieving here at Quiq. Schedule a demo to see how our conversational AI platform can help you build a forward-facing contact center.