Generative AI, such as the large language model (LLM) ChatGPT and the image-generation tool DALL-E, are already having a major impact in places like marketing firms and contact centers. With their ability to create compelling blog posts, email blasts, YouTube thumbnails, and more, we believe they’re only going to become an increasingly integral part of the workflows of the future.

But for all their potential, there remain serious questions about the short- and long-term safety of generative AI. In this piece, we’re going to zero in on one particular constellation of dangers: those related to privacy.

We’ll begin with a brief overview of how generative AI works, then turn to various privacy concerns, and finish with a discussion of how these problems are being addressed.

Let’s dive in!

What is Generative AI (and How is it Trained)?

In the past, we’ve had plenty to say about how generative AI works under the hood. But many of the privacy implications of generative AI are tied directly to how these models are trained and how they generate output, so it’s worth briefly reviewing all of this theoretical material, for the sake of completeness and to furnish some much-needed context.

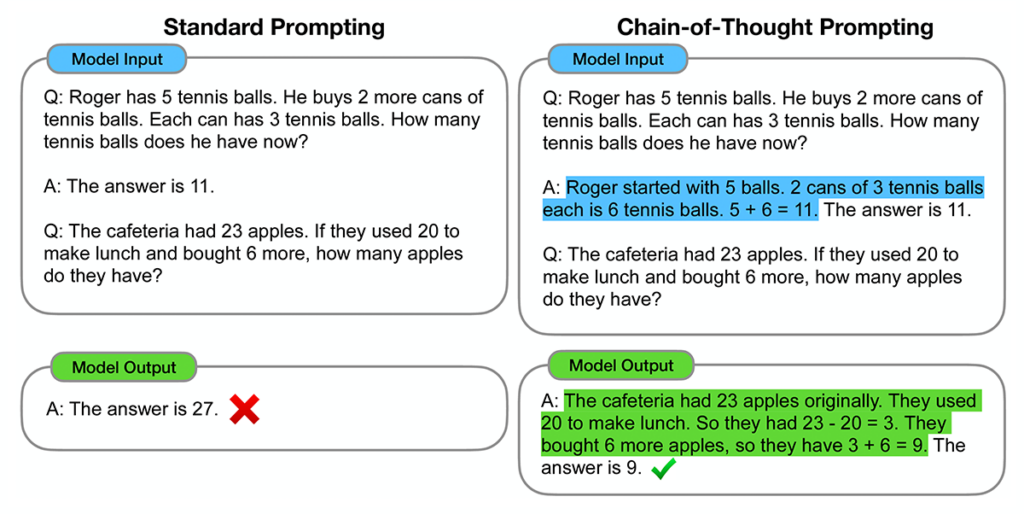

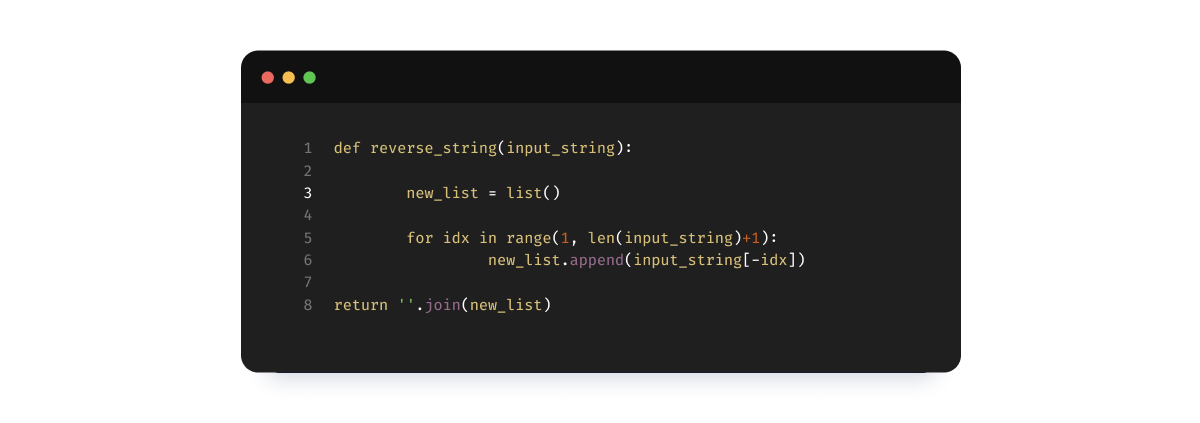

When an LLM is trained, it’s effectively fed huge amounts of text data, from the internet, from books, and similar sources of human-generated language. What it tries to do is predict how a sentence or paragraph will end based on the preceding words.

Let’s concretize this a bit. You probably already know some of these famous quotes:

- “You must be the change you wish to see in the world.” (Mahatma Gandhi)

- “You may say I’m a dreamer, but I’m not the only one.” (John Lennon)

- “The only thing we have to fear is fear itself.” (Franklin D. Roosevelt)

What ChatGPT does is try to predict what the italicized parts say based on everything that comes before. It’ll read “You must be the change you”, for example, and then try to predict “wish to see in the world.”

When the training process begins the model will basically generate nonsense, but as it develops a better and better grasp of English (and other languages), it gradually becomes the remarkable artifact we know today.

Generative AI Privacy Concerns

From a privacy perspective, two things about this process might concern us:

The first is what data are fed into the model, and the second is what kinds of output the models might generate.

We’ll have more to say about each of these in the next section, then cover some broader concerns about copyright law.

Generative AI and Sensitive Data

First, there’s real concern over the possibility that generative AI models have been shown what is usually known as “Personally Identifiable Information” (PII). This is data such as your real name, your address, etc., and can also include things like health records that might not have your name but which can be used to figure out who you are.

The truth is, we only have limited visibility into the data that LLMs are shown during training. Given how much of the internet they’ve ingested, it’s a safe bet that at least some sensitive information has been included. And even if it hasn’t seen a particular piece of PII, there are myriad ways in which it can be exposed to it. You can imagine, for example, someone feeding customer data into an LLM to produce tailored content for them, not realizing that, in many cases, the model will have permanently incorporated that data into its internal structure.

There isn’t a great way at present to remove data from an LLM, and finetuning it in such a way that it never exposes that data in the future is something no one knows how to do yet.

The other major concern around sensitive data in the context of generative AI is that they will simply hallucinate allegations about people that damage their reputations and compromise their privacy. We’ve written before about the now-infamous case of law professor Jonathan Turley, who was falsely accused of sexually harassing several of his students by ChatGPT. We imagine that in the future there will be many more such fictitious scandals, potentially ones that are very damaging to the reputations of the accused.

Generative AI, Intellectual Property, and Copyright Law

There have also been questions about whether some of the data fed into ChatGPT and similar models might be in violation of copyright law. Earlier this year, in fact, a number of well-known writers leveled a suit against both OpenAI (the creators of ChatGPT) and Meta (the creators of LLaMa).

The suit claims that these teams trained their models on proprietary data contained in the works of authors like Michael Chabon, “without consent, without credit, and without compensation.” Similar charges have been made against Midjourney and Stability AI, both of whom have created AI-based image generation models.

These are rather thorny questions of jurisprudence. Though copyright law is a fairly sophisticated tool for dealing with various kinds of human conflicts, no one has ever had to deal with the implications of enormous AI models training on this much data. Only time will tell how the courts will ultimately decide, but if you’re using customer-facing or agent-facing AI tools in a place like a contact center, it’s at least worth being aware of the controversy.

Mitigating Privacy Risks from Generative AI

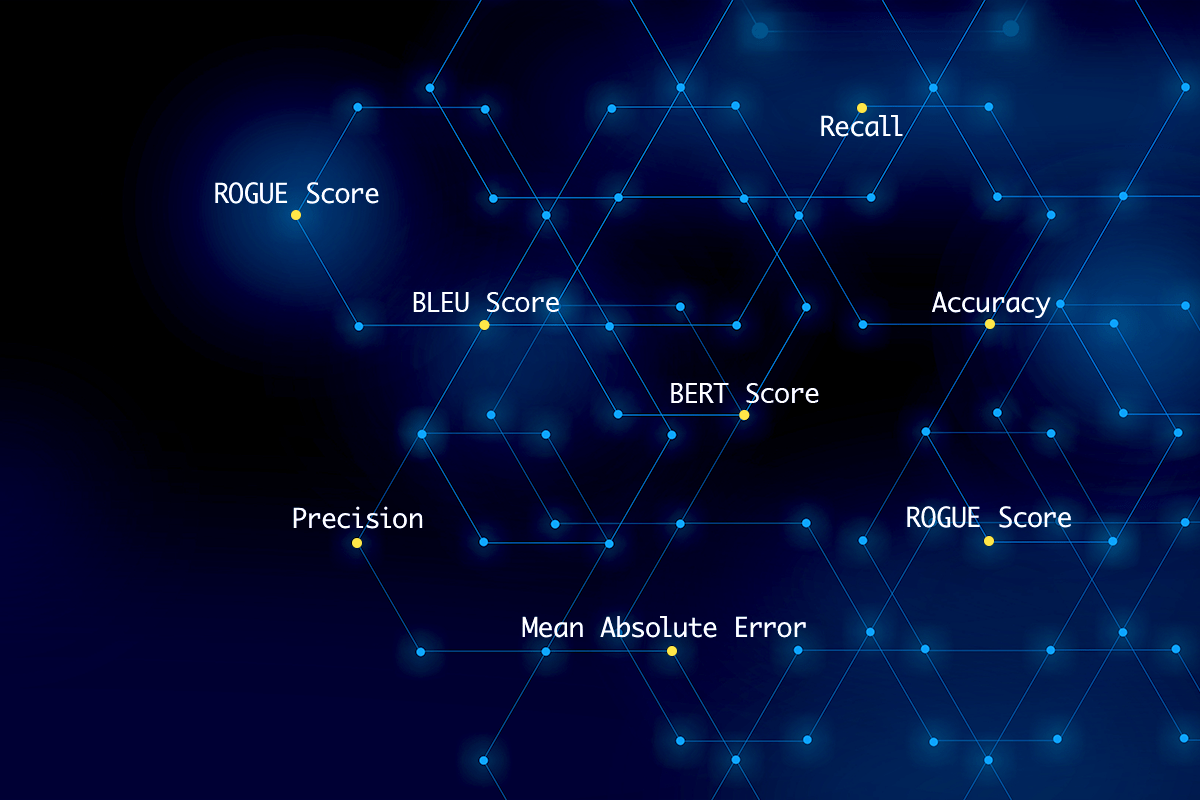

Now that we’ve elucidated the dimensions of the privacy concerns around generative AI, let’s spend some time talking about various efforts to address these concerns. We’ll focus primarily on data privacy laws, better norms around how data is collected and used, and the ways in which training can help.

Data Privacy Laws

First, and biggest, are attempts by different regulatory bodies to address data privacy issues with legislation. You’re probably already familiar with the European Union’s General Data Protection Regulation (GDPR), which puts numerous rules in place regarding how data can be gathered and used, including in advanced AI systems like LLMs.

Canada’s lesser-known Artificial Intelligence and Data Act (AIDA) mandates that anyone building a potentially disruptive AI system, like ChatGPT, must create guardrails to minimize the likelihood that their system will create biased or harmful output.

It’s not clear yet the extent to which laws like these will be able to achieve their objectives, but we expect that they’ll be just the opening salvo in a long string of legislative attempts to ameliorate the potential downsides of AI.

Robust Data Collection and Use Policies

There are also many things that private companies can do to address privacy concerns around data, without waiting for bureaucracies to catch up.

There’s too much to say about this topic to do it justice here, but we can make a few brief comments to guide you in your research.

One thing many companies are investing in is better anonymization techniques. Differential privacy, for example, is emerging as a promising way of simultaneously allowing for the collection of private data while anonymizing it enough to guard against LLMs accidentally exposing it at some point in the future.

Then, of course, there are myriad ways of securely storing data once you have it. This mostly boils down to keeping a tight lid on who is able to access private data – through i.e. encryption and a strict permissioning system – and carefully monitoring what they do with it once they access it.

Finally, it helps to be as public as possible about your data collection and use policies. Make sure they’re published somewhere that anyone can read them. Whenever possible, give users the ability to opt out of data collection, if that’s what they want to do.

Better Training for Those Building and Using Generative AI

The last piece of the puzzle is simply to train your workforce about data collection, data privacy, and data management. Sound laws and policies won’t do much good if the actual people who are interacting with private data don’t have a solid grasp of your expectations and protocols.

Because there are so many different ways in which companies collect and use data, there is no one-size-fits-all solution we can offer. But you might begin by sending your employees this article, as a way of opening up a broader conversation about your future data-privacy practices.

Data Privacy in the Age of Generative AI

In all its forms, generative AI is a remarkable technology that will change the world in many ways. Like the printing press, gunpowder, fire, and the wheel, these changes will be both good and bad.

The world will need to think carefully about how to get as many of the advantages out of generative AI as possible while minimizing its risks and dangers.

A good place to start with this is by focusing on data privacy. Because this is a relatively new problem, there’s a lot of work to be done in establishing legal frameworks, company policies, and best practices. But that also means there’s an enormous opportunity as well, to positively shape the long-term trajectory of AI technologies.