From movie recommendations to chatbots as customer service reps, it seems like machine learning (ML) is absolutely everywhere. But one thing you may not realize is just how much data is required to train these advanced systems, and how much time and energy goes into formatting that data appropriately.

Machine learning engineers have developed many ways of trying to cut down on this bottleneck, and one of the techniques that have emerged from these efforts is semi-supervised learning.

Today, we’re going to discuss semi-supervised learning, how it works, and where it’s being applied.

What is Semi-Supervised Learning?

Semi-supervised learning (SSL) is an approach to machine learning (ML) that is appropriate for tasks where you have a large amount of data that you want to learn from, only a fraction of which is labeled.

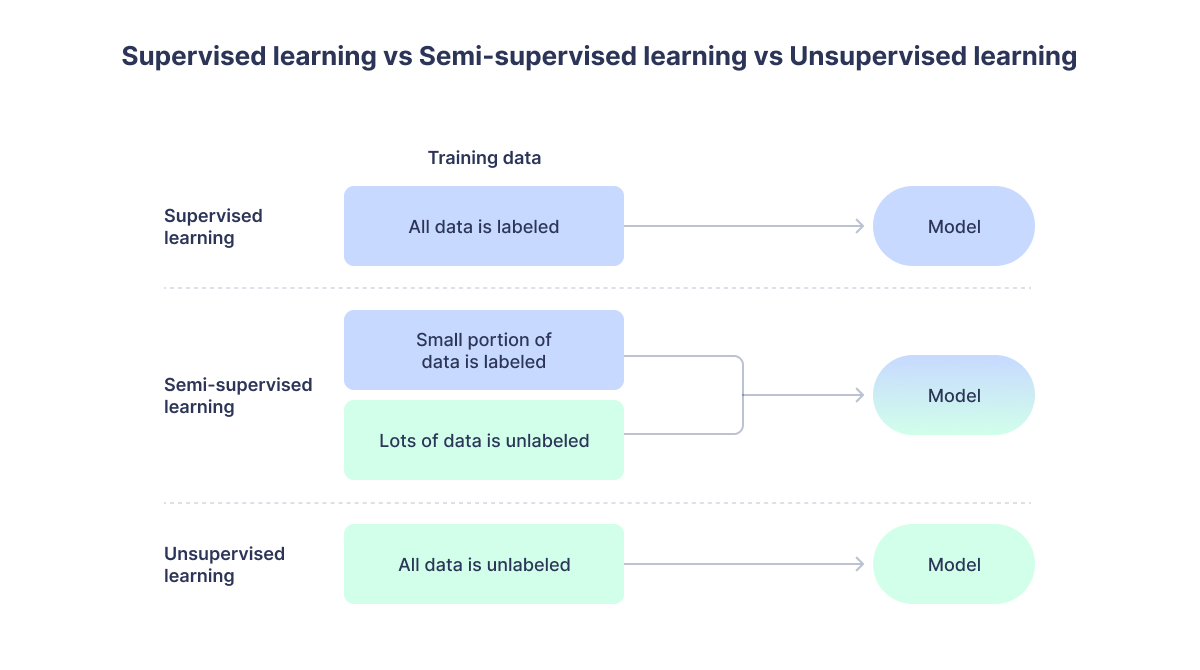

Semi-supervised learning sits somewhere between supervised and unsupervised learning, and we’ll start by understanding these techniques because that will make it easier to grasp how semi-supervised learning works.

Supervised learning refers to any ML setup in which a model learns from labeled data. It’s called “supervised” because the model is effectively being trained by showing it many examples of the right answer.

Suppose you’re trying to build a neural network that can take a picture of different plant species and classify them. If you give it a picture of a rose it’ll output the “rose” label, if you give it a fern it’ll output the “fern” label, and so on.

The way to start training such a network is to assemble many labeled images of each kind of plant you’re interested in. You’ll need dozens or hundreds of such images, and they’ll each need to be labeled by a human.

Then, you’ll assemble these into a dataset and train your model on it. What the neural network will do is learn some kind of function that maps features in the image (the concentrations of different colors, say, or the shape of the stems and leaves) to a label (“rose”, “fern”.)

One drawback to this approach is that it can be slow and extremely expensive, both in funds and in time. You could probably put together a labeled dataset of a few hundred plant images in a weekend, but what if you’re training something more complex, where the stakes are higher? A model trained to spot breast cancer from a scan will need thousands of images, perhaps tens of thousands. And not just anyone can identify a cancerous lump, you’ll need a skilled human to look at the scan to label it “cancerous” and “non-cancerous.”

Unsupervised learning, by contrast, requires no such labeled data. Instead, an unsupervised machine learning algorithm is able to ingest data, analyze its underlying structure, and categorize data points according to this learned structure.

Okay, so what does this mean? A fairly common unsupervised learning task is clustering a corpus of documents thematically, and let’s say you want to do this with a bunch of different national anthems (hey, we’re not going to judge you for how you like to spend your afternoons!).

A good, basic algorithm for a task like this is the k-means algorithm, so-called because it will sort documents into k categories. K-means begins by randomly initializing k “centroids” (which you can think of as essentially being the center value for a given category), then moving these centroids around in an attempt to reduce the distance between the centroids and the values in the clusters.

This process will often involve a lot of fiddling. Since you don’t actually know the optimal number of clusters (remember that this is an unsupervised task), you might have to try several different values of k before you get results that are sensible.

To sort our national anthems into clusters you’ll have to first pre-process the text in various ways, then you’ll run it through the k-means clustering algorithm. Once that is done, you can start examining the clusters for themes. You might find that one cluster features words like “beauty”, “heart” and “mother”, another features words like “free” and “fight”, another features words like “guard” and “honor”, etc.

As with supervised learning, unsupervised learning has drawbacks. With a clustering task like the one just described, it might take a lot of work and multiple false starts to find a value of k that gives good results. And it’s not always obvious what the clusters actually mean. Sometimes there will be clear features that distinguish one cluster from another, but other times they won’t correspond to anything that’s easily interpretable from a human perspective.

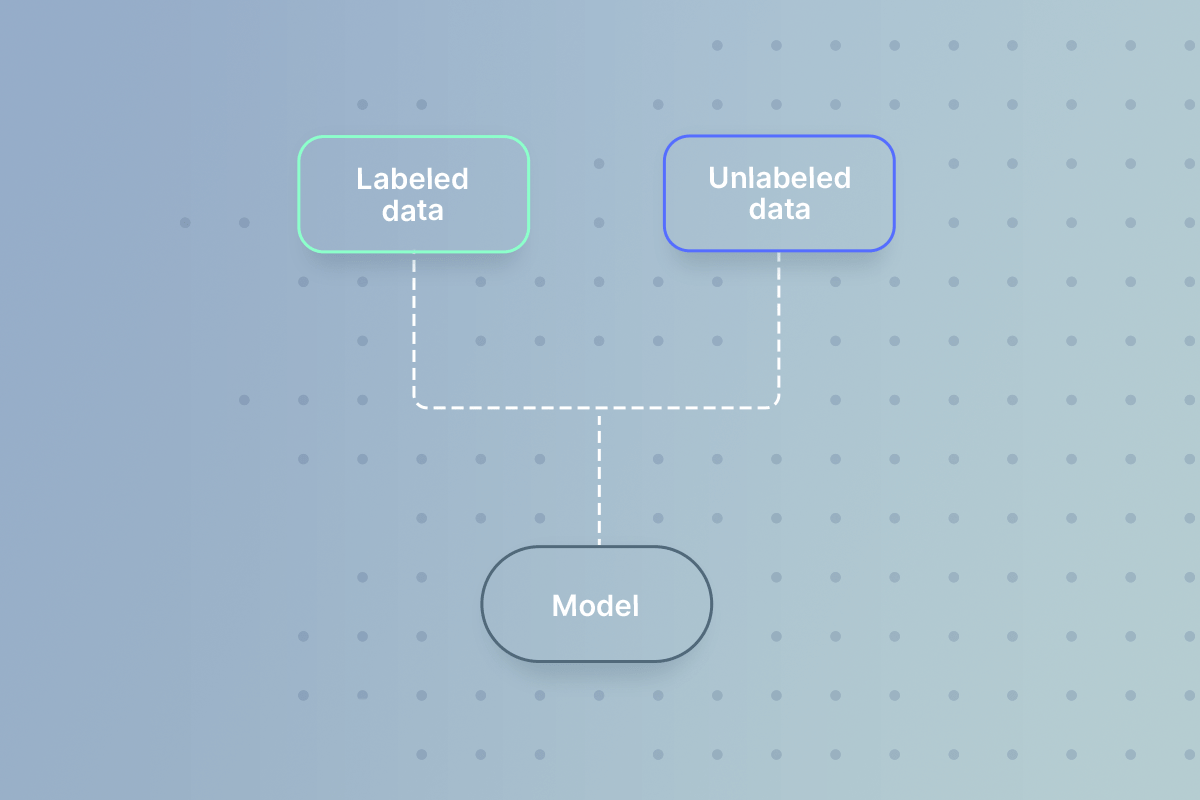

Semi-supervised learning, by contrast, combines elements of both of these approaches. You start by training a model on the subset of your data that is labeled, then apply it to the larger unlabeled part of your data. In theory, this should simultaneously give you a powerful predictive model that is able to generalize to data it hasn’t seen before while saving you from the toil of creating thousands of your own labels.

How Does Semi-Supervised Learning Work?

We’ve covered a lot of ground, so let’s review. Two of the most common forms of machine learning are supervised learning and unsupervised learning. The former tends to require a lot of labeled data to produce a useful model, while the latter can soak up a lot of hours in tinkering and yield clusters that are hard to understand. By training a model on a labeled subset of data and then applying it to the unlabeled data, you can save yourself tremendous amounts of effort.

But what’s actually happening under the hood?

Three main variants of semi-supervised learning are self-training, co-training, and graph-based label propagation, and we’ll discuss each of these in turn.

Self-training

Self-training is the simplest kind of semi-supervised learning, and it works like this.

A small subset of your data will have labels while the rest won’t have any, so you’ll begin by using supervised learning to train a model on the labeled data. With this model, you’ll go over the unlabeled data to generate pseudo-labels, so-called because they are machine-generated and not human-generated.

Now, you have a new dataset; a fraction of it has human-generated labels while the rest contains machine-generated pseudo-labels, but all the data points now have some kind of label and a model can be trained on them.

Co-training

Co-training has the same basic flavor as self-training, but it has more moving parts. With co-training you’re going to train two models on the labeled data, each on a different set of features (in the literature these are called “views”.)

If we’re still working on that plant classifier from before, one model might be trained on the number of leaves or petals, while another might be trained on their color.

At any rate, now you have a pair of models trained on different views of the labeled data. These models will then generate pseudo-labels for all the unlabeled datasets. When one of the models is very confident in its pseudo-label (i.e., when the probability it assigns to its prediction is very high), that pseudo-label will be used to update the prediction of the other model, and vice versa.

Let’s say both models come to an image of a rose. The first model thinks it’s a rose with 95% probability, while the other thinks it’s a tulip with a 68% probability. Since the first model seems really sure of itself, its label is used to change the label on the other model.

Think of it like studying a complex subject with a friend. Sometimes a given topic will make more sense to you, and you’ll have to explain it to your friend. Other times they’ll have a better handle on it, and you’ll have to learn from them.

In the end, you’ll both have made each other stronger, and you’ll get more done together than you would’ve done alone. Co-training attempts to utilize the same basic dynamic with ML models.

Graph-based semi-supervised learning

Another way to apply labels to unlabeled data is by utilizing a graph data structure. A graph is a set of nodes (in graph theory we call them “vertices”) which are linked together through “edges.” The cities on a map would be vertices, and the highways linking them would be edges.

If you put your labeled and unlabeled data on a graph, you can propagate the labels throughout by counting the number of pathways from a given unlabeled node to the labeled nodes.

Imagine that we’ve got our fern and rose images in a graph, together with a bunch of other unlabeled plant images. We can choose one of those unlabeled nodes and count up how many ways we can reach all the “rose” nodes and all the “fern” nodes. If there are more paths to a rose node than a fern node, we classify the unlabeled node as a “rose”, and vice versa. This gives us a powerful alternative means by which to algorithmically generate labels for unlabeled data.

Semi-Supervised Learning Examples

The amount of data in the world is increasing at a staggering rate, while the number of human-hours available for labeling it all is increasing at a much less impressive clip. This presents a problem because there’s no end to the places where we want to apply machine learning.

Semi-supervised learning presents a possible solution to this dilemma, and in the next few sections, we’ll describe semi-supervised learning examples in real life.

- Identifying cases of fraud: In finance, semi-supervised learning can be used to train systems for identifying cases of fraud or extortion. Rather than hand-labeling thousands of individual instances, engineers can start with a few labeled examples and proceed with one of the semi-supervised learning approaches described above.

- Classifying content on the web: The internet is a big place, and new websites are put up all the time. In order to serve useful search results it’s necessary to classify huge amounts of this web content, which can be done with semi-supervised learning.

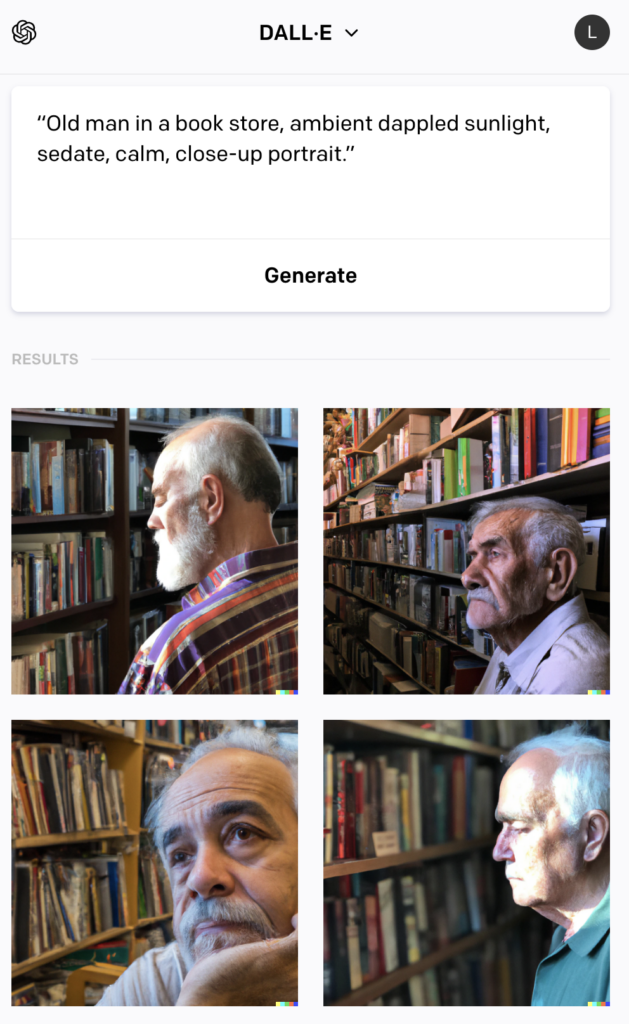

- Analyzing audio and images: This is perhaps the most popular use of semi-supervised learning. When audio files or image files are generated they’re often not labeled, which makes it difficult to use them for machine learning. Beginning with a small subset of human-labeled data, however, this problem can be overcome.

How Is Semi-Supervised Learning Different From…?

With all the different approaches to machine learning, it can be easy to confuse them. To make sure you fully understand semi-supervised learning, let’s take a moment to distinguish it from similar techniques.

Semi-Supervised Learning vs Self-Supervised Learning

With semi-supervised learning you’re training a model on a subset of labeled data and then using this model to process the unlabeled data. Self-supervised learning is different in that it’s showing an algorithm some fraction of the data (say the first 80 words in a paragraph) and then having it predict the remainder (the other 20 words in a paragraph.)

Self-supervised learning is how LLMs like GPT-4 are trained.

Semi-Supervised Learning vs Reinforcement Learning

One interesting subcategory of ML we haven’t discussed yet is reinforcement learning (RL). RL involves leveraging the mathematics of sequential decision theory (usually a Markov Decision Process) to train an agent to interact with its environment in a dynamic, open-ended way.

It bears little resemblance to semi-supervised learning, and the two should not be confused.

Semi-Supervised Learning vs Active Learning

Active learning is a type of semi-supervised learning. The big difference is that, with active learning, the algorithm will send its lowest-confidence pseudo-labels to a human for correction.

When Should You Use Semi-Supervised Learning?

Semi-supervised learning is a way of training ML models when you only have a small amount of labeled data. By training the model on just the labeled subset of data and using it in a clever way to label the rest, you can avoid the difficulty of having a human being label everything.

There are many situations in which semi-supervised learning can help you make use of more of your data. That’s why it has found widespread use in domains as diverse as document classification, fraud, and image identification.

So long as you’re considering ways of using advanced AI systems to take your business to the next level, check out our generative AI resource hub to go even deeper. This technology is changing everything, and if you don’t want to be left behind, set up a time to talk with us.