The release of ChatGPT was one of the first times an extremely powerful AI system was broadly available, and it has ignited a firestorm of controversy and conversation.

Proponents believe current and future AI tools will revolutionize productivity in almost every domain.

Skeptics wonder whether advanced systems like GPT-4 will even end up being all that useful.

And a third group believes they’re the first sparks of artificial general intelligence and could be as transformative for life on Earth as the emergence of homo sapiens.

Frankly, it’s enough to make a person’s head spin. One of the difficulties in making sense of this rapidly-evolving space is the fact that many terms, like “generative AI” and “large language models” (LLMs), are thrown around very casually.

In this piece, our goal is to disambiguate these two terms by discussing the differences between generative AI vs. large language models. Whether you’re pondering deep questions about the nature of machine intelligence, or just trying to decide whether the time is right to use conversational AI in customer-facing applications, this context will help.

Let’s get going!

What Is Generative AI?

Of the two terms, “generative AI” is broader, referring to any machine learning model capable of dynamically creating output after it has been trained.

This ability to generate complex forms of output, like sonnets or code, is what distinguishes generative AI from linear regression, k-means clustering, or other types of machine learning.

Besides being much simpler, these models can only “generate” output in the sense that they can make a prediction on a new data point.

Once a linear regression model has been trained to predict test scores based on number of hours studied, for example, it can generate a new prediction when you feed it the hours a new student spent studying.

But you couldn’t use prompt engineering to have it help you brainstorm the way these two values are connected, which you can do with ChatGPT.

There are many types of generative AI, so let’s spend a few minutes discussing the major categories: image generation, music generation, code generation, and a few others.

How Is Generative AI Used To Make Images?

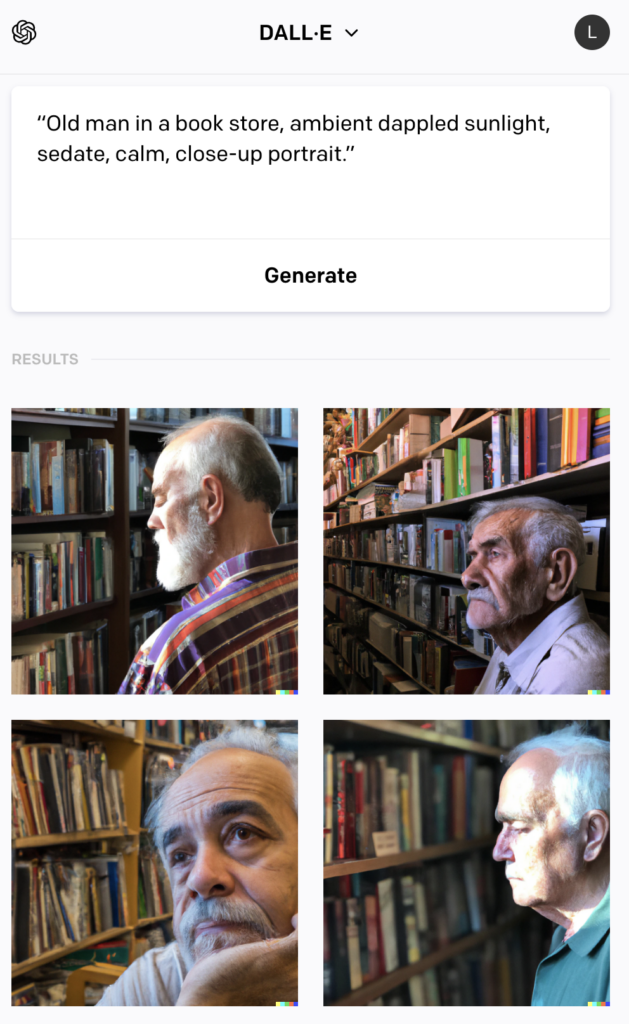

One of the first “wow” moments in generative AI came fairly recently when it was discovered that tools like Midjourney, DALL-E, and Stable Diffusion could create absolutely stunning images based on simple prompts like:

“Old man in a book store, ambient dappled sunlight, sedate, calm, close-up portrait.”

Depending on the wording you use, these images might be whimsical and futuristic, they might look like paintings from world-class artists, or they might look so photo-realistic you’d be convinced they’re about to start talking.

Each of these tools is suited to specific applications. Midjourney seems to be best at capturing different artistic approaches and generating images that accurately capture an aesthetic. DALL-E tends to do better at depicting human figures, including faces and eyes. Stable Diffusion seems to do well at generating highly-detailed outputs, capturing subtleties like the way light reflects on a rain-soaked street.

(Note: these are all general impressions, it’s difficult to know how the tools will compare on any specific prompt.)

Broadly, this is known as “image synthesis”. And since we’re talking specifically about making images from text, this sub-domain is known as “text-to-image.”

A variant of this technique is text-to-video (alternatively: “text-to-4d”), which produces short clips or scenes based on text prompts. While text-to-video is still much more primitive than text-to-image, it will get better very quickly if recent progress in AI is any guide.

One interesting wrinkle in this story is that generative algorithms have generated something else along with images and animations: legal battles.

Earlier this year, Getty Images filed a lawsuit against the creators of Stable Diffusion, alleging that they trained their algorithm on millions of images from the Getty collection without getting permission first or compensating Getty in any way.

This has raised many profound questions about data rights, privacy, and how (or whether) people should be paid when their work is used to train a model that might eventually automate them out of a job.

We’re still in the early days of grappling with these issues, but they’re sure to make for fascinating case law in the years ahead.

How Is Generative AI Used To Make Music?

Given how successful advanced models have been in generating text (more on that shortly), it’s only natural to wonder whether similar models could also prove useful in generating music.

This is especially true because, on the surface, text and music share many obvious similarities (both are sequential, for example.) It would make sense, therefore, that the technical advances that have allowed coherent text production might also allow for coherent music production.

And they have! There are now a number of different tools, such as MusicLM, which are able to generate fairly high-quality audio tracks from prompts like:

“The main soundtrack of an arcade game. It is fast-paced and upbeat, with a catchy electric guitar riff. The music is repetitive and easy to remember, but with unexpected sounds, like cymbal crashes or drum rolls.”

As with using generative AI in images, creating artificial musical tracks in the style of popular artists has already sparked legal controversies. A particularly memorable example occurred just recently when a TikTok user supposedly created an AI-generated collaboration between Drake and The Weeknd, which then promptly went viral.

The track was removed from all major streaming services in response to backlash from artists and record labels, but it’s clear that ai music generators are going to change the way art is created in a major way.

How Is Generative AI Used For Coding?

It’s long been the dream of both programmers and non-programmers to simply be able to provide a computer with natural-language instructions (“build me a cool website”) and have the machine handle the rest. It would be hard to overstate the explosion in creativity and productivity this would initiate.

With the advent of code-generation models such as Replit’s Ghostwriter and GitHub Copilot, we’ve taken one more step towards that halcyon world.

As is the case with other generative models, code-generation tools are usually trained on massive amounts of data, after which point they’re able to take simple prompts and produce code from them.

You might ask it to write a function that converts between several different coordinate systems, create a web app that measures BMI, or translate from Python to Javascript.

As things stand now, the code is often incomplete in small ways. It might produce a function that takes an argument as input that is never used, for example, or which lacks a return function. Still, it is remarkable what has already been accomplished.

There are now software developers who are using models like ChatGPT all day long to automate substantial portions of their work, to understand new codebases with which they’re unfamiliar, or to write comments and unit tests.

What Are Large Language Models?

Now that we’ve covered generative AI, let’s turn our attention to large language models (LLMs).

LLMs are a particular type of generative AI.

Unlike with MusicLM or DALL-E, LLMs are trained on textual data and then used to output new text, whether that be a sales email or an ongoing dialogue with a customer.

(A technical note: though people are mostly using GPT-4 for text generation, it is an example of a “multimodal” LLM because it has also been trained on images. According to OpenAI’s documentation, image input functionality is currently being tested, and is expected to roll out to the broader public soon.)

What Are Examples of Large Language Models?

By far the most well-known example of an LLM is OpenAI’s “GPT” series, the latest of which is GPT-4. The acronym “GPT” stands for “Generative Pre-Trained Transformer”, and it hints at many underlying details about the model.

GPT models are based on the transformer architecture, for example, and they are pre-trained on a huge corpus of textual data taken predominately from the internet.

GPT, however, is not the only example of an LLM.

The BigScience Large Open-science Open-access Multilingual Language Model – known more commonly by its mercifully-short nickname, “BLOOM” – was built by more than 1,000 AI researchers as an open-source alternative to GPT.

BLOOM is capable of generating text in almost 50 natural languages, and more than a dozen programming languages. Being open-sourced means that its code is freely available, and no doubt there will be many who experiment with it in the future.

In March, Google announced Bard, a generative language model built atop its Language Model for Dialogue Applications (LaMDA) transformer technology.

As with ChatGPT, Bard is able to work across a wide variety of different domains, offering help with planning baby showers, explaining scientific concepts to children, or helping you make lunch based on what you already have in your fridge.

How Are Large Language Models Trained?

A full discussion of how large language models are trained is beyond the scope of this piece, but it’s easy enough to get a high-level view of the process. In essence, an LLM like GPT-4 is fed a huge amount of textual data from the internet. It then samples this dataset and learns to predict what words will follow given what words it has already seen.

At first, its performance will be terrible, but over time it will learn that a sentence like “I sat down on the _____” probably ends with a word like “floor” or “chair”, and probably not a word like “cactus” (at least, we hope you’re not sitting down on a cactus!)

When a model has been trained for long enough on a large enough dataset, you get the remarkable performance seen with tools like ChatGPT.

Is ChatGPT A Large Language Model?

Speaking of ChatGPT, you might be wondering whether it’s a large language model. ChatGPT is a special-purpose application built on top of GPT-3, which is a large language model. GPT-3 was fine-tuned to be especially good at conversational dialogue, and the result is ChatGPT.

Are All Large Language Models Generative AI?

Yes. To the best of our knowledge, all existing large language models are generative AI. “Generative AI” is an umbrella term for algorithms that generate novel output, and the current set of models is built for that purpose.

Utilizing Generative AI In Your Business

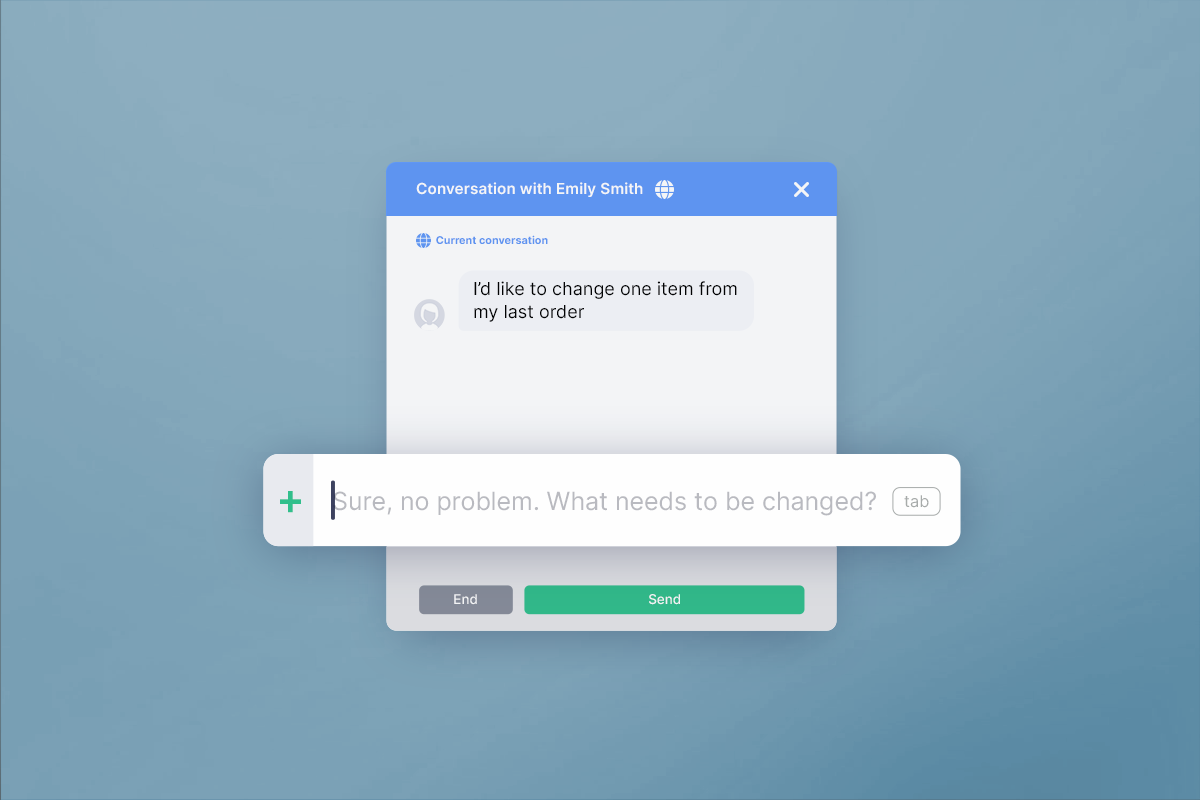

Though truly powerful generative AI language models are less than a year old, they’re already being integrated into numerous business applications. Quiq Compose, for example, is able to study past interactions with customers to better tailor its future conversations to their particular needs.

From generating fake viral rap songs to generating photos that are hard to distinguish from real life, these powerful tools have already proven that they can dramatically speed up marketing, software development, and many other crucial business functions.

If you’re an enterprise wondering how you can use advanced AI technologies such as generative AI language models for applications like customer service, schedule a demo to see what the Quiq platform can offer you!