Key Takeaways

- AI performance starts with evaluation. Metrics and human insight work together to keep models accurate, reliable, and bias-free.

- Use the right tools for the job. Regression relies on MSE or RMSE; classification leans on accuracy, precision, and recall.

- Generative AI needs extra care. Scores like BLEU and BERT help, but human review ensures outputs sound natural and on-brand.

- Trust is built through testing. Continuous evaluation keeps AI aligned with real-world performance and customer expectations.

Machine learning is an incredibly powerful technology. That’s why it’s being used in everything from autonomous vehicles to medical diagnoses to the sophisticated, dynamic AI Assistants that are handling customer interactions in modern contact centers.

But for all this, it isn’t magic. The engineers who build these systems must know a great deal about how to evaluate them. How do you know when a model is performing as expected, or when it has begun to overfit the data? How can you tell when one model is better than another?

That’s where AI model evaluation comes in. At its core, AI model evaluation is the process of systematically measuring and assessing an AI system’s performance, accuracy, reliability, and fairness. This includes using quantitative metrics (like accuracy or BLEU), testing with unseen data, and incorporating human review to check for issues such as bias or coherence. It’s a critical step for determining a model’s readiness for real-world deployment, ensuring trustworthiness, and guiding continuous improvement.

This subject will be our focus today. We’ll cover the basics of evaluating a machine learning model with metrics like mean squared error and accuracy, then turn our attention to the more specialized task of evaluating the generated text of a large language model like ChatGPT.

How to Measure the Performance of a Machine Learning Model?

A machine learning model is always aimed at some task. It might be predicting sales, grouping topics, or generating text.

How does the model know when it’s gotten the optimal line or discovered the best way to cluster documents?

In the next few sections, we’ll talk about a few common ways of evaluating the performance of a machine-learning model. If you’re an engineer this will help you create better models yourself, and if you’re a layperson, it’ll help you better understand how the machine-learning pipeline works.

To answer that, evaluation must assess multiple dimensions: performance (does it predict accurately?), weaknesses (does it generalize to unseen data or overfit?), trustworthiness (can it be explained and trusted?), and fairness (is it biased toward certain groups?). Together, these components give a complete picture of model quality.

Evaluation Metrics for Regression Models

Regression is one of the two big types of basic machine learning, with the other being classification.

In tech-speak, we say that the purpose of a regression model is to learn a function that maps a set of input features to a real value (where “real” just means “real numbers”). This is not as scary as it sounds; you might try to create a regression model that predicts the number of sales you can expect given that you’ve spent a certain amount on advertising, or you might try to predict how long a person will live on the basis of their daily exercise, water intake, and diet.

In each case, you’ve got a set of input features (advertising spend or daily habits), and you’re trying to predict a target variable (sales, life expectancy).

The relationship between the two is captured by a model, and a model’s quality is evaluated with a metric. Popular metrics for regression models include the mean squared error, the root mean squared error, and the mean absolute error (though there are plenty of others if you feel like going down a nerdy rabbit hole).

Common regression metrics include Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and Mean Absolute Error (MAE). MSE measures average squared error, RMSE converts it to the original units, and MAE reduces the effect of outliers.

Evaluation Metrics for Classification Models

People tend to struggle less with understanding classification models because it’s more intuitive: you’re building something that can take a data point (the price of an item) and sort it into one of a number of different categories (i.e., “cheap”, “somewhat expensive”, “expensive”, “very expensive”).

Regardless, it’s just as essential to evaluate the performance of a classification model as it is to evaluate the performance of a regression model. Some common evaluation metrics for classification models are accuracy, precision, and recall.

Accuracy is simple, and it’s exactly what it sounds like. You find the accuracy of a classification model by dividing the number of correct predictions it made by the total number of predictions it made altogether. If your classification model made 1,000 predictions and got 941 of them right, that’s an accuracy rate of 94.1% (not bad!)

Both precision and recall are subtler variants of this same idea. The precision is the number of true positives (correct classifications) divided by the sum of true positives and false positives (incorrect positive classifications). It says, in effect, “When your model thought it had identified a needle in a haystack, this is how often it was correct.”

The recall is the number of true positives divided by the sum of true positives and false negatives (incorrect negative classifications). It says, in effect, “There were 200 needles in this haystack, and your model found 72% of them.”

Accuracy tells you how well your model performed overall, precision tells you how confident you can be in its positive classifications, and recall tells you how often it found the positive classifications.

How Can I Assess the Performance of a Generative AI Model?

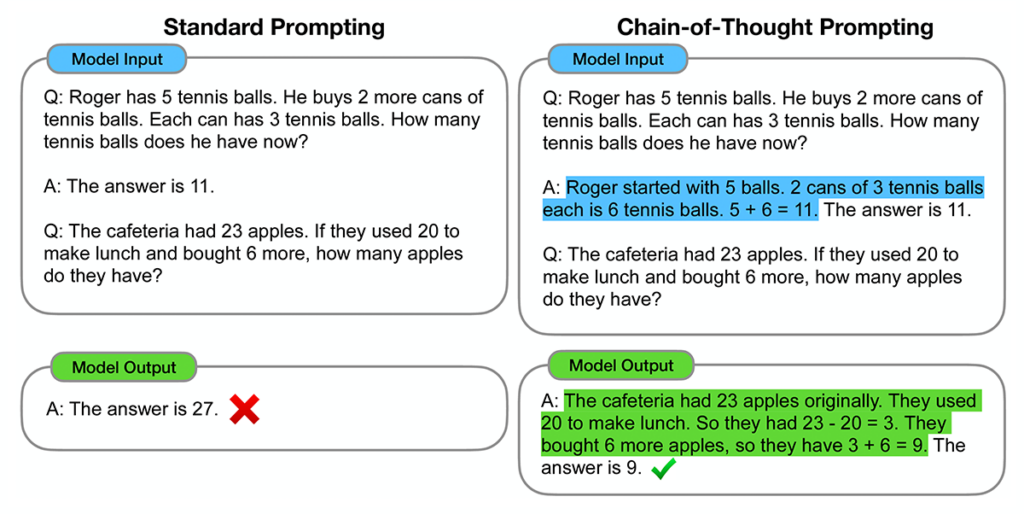

Now, we arrive at the center of this article. Everything up to now has been background context that hopefully has given you a feel for how models are evaluated, because from here on out it’s a bit more abstract.

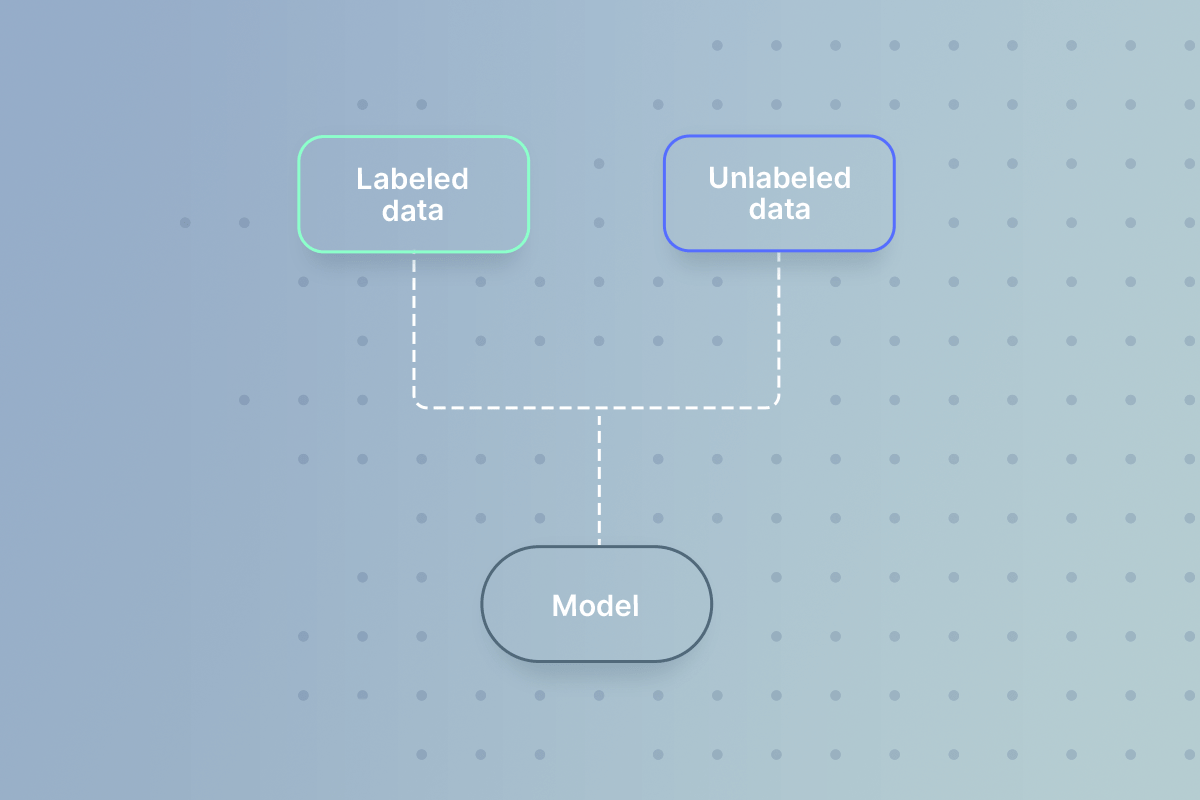

Using Reference Text for Evaluating Generative Models

When we wanted to evaluate a regression model, we started by looking at how far its predictions were from actual data points.

Well, we do essentially the same thing with generative language models. To assess the quality of text generated by a model, we’ll compare it against high-quality text that’s been selected by domain experts.

The Bilingual Evaluation Understudy (BLEU) Score

The BLEU score can be used to actually quantify the distance between the generated and reference text. It does this by comparing the amount of overlap in the n-grams [1] between the two using a series of weighted precision scores.

The BLEU score varies from 0 to 1. A score of “0” indicates that there is no n-gram overlap between the generated and reference text, and the model’s output is considered to be of low quality. A score of “1”, conversely, indicates that there is total overlap between the generated and reference text, and the model’s output is considered to be of high quality.

Comparing BLEU scores across different sets of reference texts or different natural languages is so tricky that it’s considered best to avoid it altogether.

Also, be aware that the BLEU score contains a “brevity penalty” which discourages the model from being too concise. If the model’s output is too much shorter than the reference text, this counts as a strike against it.

The Recall-Oriented Understudy for Gisting Evaluation (ROGUE) Score

Like the BLEU score, the ROGUE score is examines the n-gram overlap between an output text and a reference text. Unlike the BLEU score, however, it uses recall instead of precision.

There are three types of ROGUE scores:

- rogue-n: Rogue-n is the most common type of ROGUE score, and it simply looks at n-gram overlap, as described above.

- rogue-l: Rogue-l looks at the “Longest Common Subsequence” (LCS), or the longest chain of tokens that the reference and output text share. The longer the LCS, of course, the more the two have in common.

- rogue-s: This is the least commonly-used variant of the ROGUE score, but it’s worth hearing about. Rogue-s concentrates on the “skip-grams” [2] that the two texts have in common. Rogue-s would count “He bought the house” and “He bought the blue house” as overlapping because they have the same words in the same order, despite the fact that the second sentence does have an additional adjective.

The Metric for Evaluation of Translation with Explicit Ordering (METEOR) Score

The METEOR Score takes the harmonic mean of the precision and recall scores for 1-gram overlap between the output and reference text. It puts more weight on recall than on precision, and it’s intended to address some of the deficiencies of the BLEU and ROGUE scores while maintaining a pretty close match to how expert humans assess the quality of model-generated output.

BERT Score

At this point, it may have occurred to you to wonder whether the BLEU and ROGUE scores are actually doing a good job of evaluating the performance of a generative language model. They look at exact n-gram overlaps, and most of the time, we don’t really care that the model’s output is exactly the same as the reference text – it needs to be at least as good, without having to be the same.

The BERT score is meant to address this concern through contextual embeddings. By looking at the embeddings behind the sentences and comparing those, the BERT score is able to see that “He quickly ate the treats” and “He rapidly consumed the goodies” are expressing basically the same idea, while both the BLEU and ROGUE scores would completely miss this.

Why AI Model Evaluation is Critical

Agentic AI is redefining how businesses operate – automating reasoning, decision-making, and task execution across fields like engineering and CX. But with that autonomy comes risk. Every AI agent must be carefully evaluated, monitored, and fine-tuned to ensure it performs reliably and aligns with your brand’s goals. Otherwise, even a small model error can compound into major consequences for your brand.

If you’re enchanted by the potential of using agentic AI in your contact center but are daunted by the challenge of putting together an engineering team, reach out to us for a demo of the Quiq agentic AI platform. We can help you put this cutting-edge technology to work without having to worry about all the finer details and resourcing issues.

***

Footnotes

[1] An n-gram is just a sequence of characters, words, or entire sentences. A 1-gram is usually single words, a 2-gram is usually two words, etc.

[2] Skip-grams are a rather involved subdomain of natural language processing. You can read more about them in this article, but frankly, most of it is irrelevant to this article. All you need to know is that the rogue-s score is set up to be less concerned with exact n-gram overlaps than the alternatives.

Frequently Asked Questions (FAQs)

What does AI model evaluation mean?

It’s how teams measure whether an AI system is performing as intended, accurate, fair, and ready for real-world use.

Why does AI model evaluation matter?

Evaluation exposes blind spots early and helps build confidence that the model can be trusted with customer-facing tasks.

How are generative models evaluated?

Metrics like BLEU, ROUGE, and BERT gauge quality, while human reviewers check tone, clarity, and usefulness.

Can metrics replace human judgment?

Not yet. Automated scores quantify performance, but humans still define what “good” sounds like.

How do I know if my model is ready?

When it performs consistently across test data, aligns with business goals, and earns trust through transparent evaluation.