Voice AI has come a long way from its humble beginnings, evolving into a powerful tool that’s reshaping customer service. In this blog, we’ll explore how Voice AI has grown to address its early limitations, delivering impactful changes that CX leaders can no longer ignore. Learn how these advancements create better customer experiences, and why staying informed is essential to staying competitive.

The Voice AI Journey

Customer expectations have evolved rapidly, demanding faster and more personalized service. Over the years, voice interactions have transformed from rigid, rules-based AI chatbot with voice systems to today’s sophisticated AI-driven solutions. For CX leaders, Voice AI has emerged as a crucial tool for driving service quality, streamlining operations, and meeting customer needs more effectively.

Key Concepts

Before diving into this topic, readers, especially CX leaders, should be familiar with the following key terms to better understand the technology and its impact. The following is not a comprehensive list, but should provide the background to clarify terminology and identify the key aspects that have contributed to this evolution.

Speech-enabled systems vs. chatbots vs. AI agents

- Speech-enabled systems: Speech-enabled systems are basic tools that convert spoken language into text, but do not include advanced features like contextual understanding or decision-making capabilities.

- Chatbots: Chatbots are systems that interact with users through text, answering questions, and completing tasks using either set rules or AI to understand user inputs.

- AI agents: AI agents are smart conversational systems that help with complex tasks, learn from interactions, and adjust their responses to offer more personalized and relevant assistance over time.

Rules-based (previous generation) vs. Large Language Models or LLMs (next generation)

- Previous gen: Lacks adaptability, struggles with natural language nuances, and fails to offer a personalized experience.

- Next-gen (LLM-based): Uses LLMs to understand intent, generate responses, and evolve based on context, improving accuracy and depth of interaction.

Agent Escalation: A process in which the Voice AI system hands off the conversation to a human agent, often seamlessly.

AI Agent: A software program that autonomously performs tasks, makes decisions, and interacts with users or systems using artificial intelligence. It can learn and adapt over time to improve its performance, commonly used in customer service, automation, and data analysis.

Depending on their purpose, AI agents can be customer-facing or assist human agents by providing intelligent support during interactions. They function based on algorithms, machine learning, and natural language processing to analyze inputs, predict outcomes, and respond in real-time.

Automated Speech Recognition (ASR): The technology that enables machines to understand and process human speech. It’s a core component of Voice AI systems, helping them identify spoken words accurately.

Context Awareness: Voice AI’s ability to remember previous interactions or conversations, allowing it to maintain a flow of dialogue and provide relevant, contextually appropriate responses.

Conversational AI: Conversational AI refers to technologies that allow machines to interact naturally with users through text or speech, using tools like LLMs, NLU, speech recognition, and context awareness.

Conversation Flow: The logical structure of a conversation, including how the Voice AI chatbot guides interactions, asks follow-up questions, and handles different branches of user input.

Generative AI: A type of artificial intelligence that creates new content, such as text, images, audio, or video, by learning patterns from existing data. It uses advanced models, like LLMs, to generate outputs that resemble human-made content. Generative AI is commonly used in creative fields, automation, and problem-solving, producing original results based on the data it has been trained on.

Intent Recognition: The process by which a Voice AI system identifies the user’s goal or purpose behind their speech input. Understanding intent is critical to delivering appropriate and relevant responses.

LLMs: LLMs are sophisticated machine learning systems trained on extensive text data, enabling them to understand context, generate nuanced responses, and adapt to the conversational flow dynamically.

Machine Learning (ML): A type of AI that allows systems to automatically learn and improve from experience without being explicitly programmed. ML helps voice AI chatbots adapt and improve their responses based on user interactions.

Multimodal: The ability of a system or platform to support multiple modes of communication, allowing customers and agents to interact seamlessly across various channels.

Multi-Turn Conversations: This refers to the ability of Voice AI systems to engage in extended dialogues with users across multiple steps. Unlike simple one-question, one-response setups, multi-turn conversations handle complex interactions.

Natural Language Processing (NLP): Consists of a branch of AI that helps computers understand and interpret human language. It is the key technology behind voice and text-based AI interactions.

Omnichannel Experience: A seamless customer experience that integrates multiple channels (such as voice, text, and chat) into one unified system, allowing customers to seamlessly transition between them.

Rules-based approach: This approach uses predefined scripts and decision trees to respond to user inputs. These systems are rigid, with limited conversational abilities, and struggle to handle complex or unexpected interactions, leading to a less flexible and often frustrating user experience.

Sentiment Analysis: A feature of AI that interprets the emotional tone of a user’s input. Sentiment analysis helps Voice AI determine the customer’s mood (e.g., frustrated or satisfied) and tailor responses accordingly.

Speech Recognition / Speech-to-Text (STT): Speech Recognition, or Speech-to-Text (STT), converts spoken language into text, allowing the system to process it. It’s a key step in making voice-based AI interactions possible.

Text-to-Speech (TTS): The opposite of STT, TTS refers to the process of converting text data into spoken language, allowing digital solutions to “speak” responses back to users in natural language.

Voice AI: Voice AI is a technology that uses artificial intelligence to understand and respond to spoken language, allowing machines to have more natural and intuitive conversations with people.

Voice User Interface (VUI): Voice User Interface (VUI) is the system that enables voice-based interactions between users and machines, determining how naturally and effectively users can communicate with Voice AI systems.

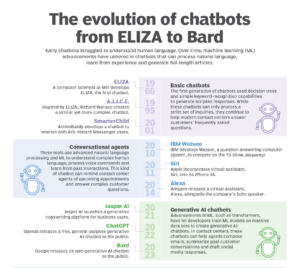

The humble beginnings of rules-based voice systems

Voice AI has been nearly 20 years in the making, starting with basic rules-based systems that followed predefined scripts. These early systems could automate simple tasks, but if customers asked anything outside the programmed flow, the system fell short. It couldn’t handle natural language or adapt to the unexpected, leading to frustration for both customers and CX teams.

For CX leaders, these systems posed more challenges than solutions. Robotic interactions often required human intervention, negating the efficiency benefits. It became clear that something more flexible and intelligent was needed to truly transform customer service.

The rise of AI and speech-enabled systems

As businesses encountered the limitations of rules-based systems, the next chapter in the evolution of Voice AI introduced speech-enabled systems. These systems were a step forward, as they allowed customers to interact more naturally with technology by transcribing spoken language into text. However, while they could accurately convert speech to text which solved one issue, they still struggled with a critical challenge—they couldn’t grasp the underlying meaning or the sentiment behind the words.

This gap led to the emergence of the first generation of AI, which represented a significant improvement over simple chatbots. This intelligence improved more helpful for customer interactions, but they still fell short in providing the seamless, human-like conversations that CX leaders envisioned. While customers could speak to AI-powered systems, the experience was often inconsistent, especially when dealing with complex queries. The advancement of AI was another improvement, but it was still limited by the rules-based logic it evolved from.

The challenge stemmed from the inherent complexity of language. People express themselves in diverse ways, using different accents, phrasing, and expressions. Language rarely follows a single, rigid pattern, which made it difficult for early speech systems to interpret accurately.

These AI systems were a huge leap in progress and created hope for CX leaders. Intelligent systems that can adapt and respond to users’ speech were powerful, but not enough to make a full transformation in the CX world.

The AI revolution: From rules-based to next-gen LLMs

The real breakthrough came with the rise of LLMs. Unlike rigid rules-based systems, LLMs use neural networks to understand context and intent, creating true natural, fluid human-like conversations. Now, AI could respond intelligently, adapt to the flow of interaction, and provide accurate answers.

For CX leaders, this was a pivotal moment. No more frustrating dead ends or rigid scripts—Voice AI became a tool that could offer context-aware services, helping businesses cut costs while enhancing customer satisfaction. The ability to deliver meaningful, efficient service marked a turning point in customer engagement.

What makes Voice AI work today?

Today’s Voice AI systems combine several advanced technologies:

- Speech-to-Text (STT): Converts spoken language into text with high accuracy.

- AI Intelligence: Powered by NLU and LLMs, the AI deciphers customer intent and delivers contextually relevant responses.

- Text-to-Speech (TTS): Translates the AI’s output back into natural-sounding speech for smooth and realistic communication.

These technologies work together to enable smarter, faster service, reduce the load on human agents and provide an intuitive customer experience.

The transformation: What changed with next-gen Voice AI?

With advancements in NLP, ML, and omnichannel integration, Voice AI has evolved into a dynamic, intelligent system capable of delivering personalized, empathetic responses. Machine Learning ensures that the system learns from every interaction, continuously improving its performance. Omnichannel integration allows Voice AI to operate seamlessly across multiple platforms, providing a unified customer experience. This is crucial for the transformation of customer service.

Rather than simply enhancing voice interactions, omnichannel solutions select the best communication channel within the same interaction, ensuring customers receive a complete answer and any necessary documentation to resolve their issue – via email or SMS.

For CX leaders, this transformation enables them to offer real-time, personalized service, with fewer human touchpoints and greater customer satisfaction.

The four big benefits of next-gen Voice AI for CX leaders

The rise of next-gen Voice AI from previous-gen Voice AI chatbots offers CX leaders powerful benefits, transforming how they manage customer interactions. These advancements not only enhance the customer experience, but also streamline operations and improve business efficiency.

1. Enhanced customer experience

With faster, more accurate, and context-aware responses, Voice AI can handle complex queries with ease. Customers no longer face frustrating dead ends or robotic answers. Instead, they get intelligent, conversational interactions that leave them feeling heard and understood.

2. 24/7 availability

Voice AI is always on, providing customers with support at any time, day or night. Whether it’s handling routine inquiries or resolving issues, Voice AI ensures customers are never left waiting for help. This around-the-clock service not only boosts customer satisfaction, but also reduces the strain on human agents.

3. Operational efficiency

By automating high volumes of customer interactions, Voice AI significantly reduces human intervention, cutting costs. Agents can focus on more complex tasks, while Voice AI handles repetitive, time-consuming queries—making customer service teams more productive and focused.

4. Personalization at scale

By learning from each interaction, the system can continuously improve and deliver tailored responses to individual customers, offering a more personalized experience for every user. This level of personalization, once achievable only through human agents, is now possible on a much larger scale.

However, while machine learning plays a critical role in making these advancements possible, it is not a “magical” solution. The improvements happen over time, as the system processes more data and refines its understanding. Although this may sound simplified, the gradual and ongoing development of machine learning can indeed lead to highly effective and powerful outcomes in the long run.

The future of Voice AI: Next-gen experience in action

Voice AI’s future is already here, and it’s evolving faster than ever. Today’s systems are almost indistinguishable from human interactions, with conversations flowing naturally and seamlessly. But the leap forward doesn’t stop at just sounding more human—Voice AI is becoming smarter and more intuitive, capable of anticipating customer needs before they even ask. With AI-driven predictions, Voice AI can now suggest solutions, recommend next steps, and provide highly relevant information, all in real time.

Imagine a world where Voice AI understands customer’s speech and then anticipates what is needed next. Whether it’s guiding them through a purchase, solving a complex issue, or offering personalized recommendations, technology is moving toward a future where customer interactions are smooth, proactive, and entirely customer-centric.

For CX leaders, this opens up incredible opportunities to stay ahead of customer expectations. Those adopting next-gen Voice AI now are leading the charge in customer service innovation, offering cutting-edge experiences that set them apart from competitors. And as this technology continues to evolve, it will only get more powerful, more intuitive, and more essential for delivering world-class service.

The new CX frontier with Voice AI

As Voice AI continues to evolve from the simple Voice AI chatbot of yesteryear, we are entering a new frontier in customer experience. What started as a rigid, rules-based system has transformed into a dynamic, intelligent agent capable of revolutionizing how businesses engage with their customers. For CX leaders, this new era means greater personalization, enhanced efficiency, and the ability to meet customers where they are—whether it’s through voice, chat, or other digital channels.

We’ve made more progress in this development, but it is far from over. Voice AI is expanding, from deeper integrations with emerging technologies to more advanced predictive capabilities that can elevate customer experiences to new heights. The future holds more exciting developments, and staying ahead will require ongoing adaptation and willingness to embrace change.

Omnichannel capabilities is just the beginning

One fascinating capability of Voice AI is its ability to seamlessly integrate across multiple platforms, making it a truly omnichannel experience. For example, imagine you’re on a phone call with an AI agent, but due to background noise, it becomes difficult to hear. You could effortlessly switch to texting, and the conversation would pick up exactly where it left off in your text messages, without losing any context.

Similarly, if you’re on a call and need to share a photo, you can text the image to the AI agent, which can interpret the content of the photo and respond to it—all while continuing the voice conversation.

Another example of this multi-modal functionality is when you’re on a call and need to spell out something complex, like your last name. Rather than struggle to spell it verbally, you can simply text your name, and the Voice AI system will incorporate the information without disrupting the flow of the interaction. These types of seamless transitions between different modes of communication (voice, text, images) are what make multi-modal Voice AI truly revolutionary.

Voice AI’s future is already here, and it’s evolving rapidly. Today’s systems are approaching a level where they are almost indistinguishable from human interactions, with conversations flowing naturally and effortlessly. But the advancements go beyond merely sounding human—Voice AI is becoming smarter and more intuitive, capable of anticipating customer needs before they even express them. With AI-driven predictions, these systems can now suggest solutions, recommend next steps, and provide highly relevant information in real-time.

Imagine a scenario where Voice AI not only understands what a customer says, but also predicts what they might need next. Whether it’s guiding them through a purchase, solving a complex problem, or offering personalized product recommendations, this technology is leading the way toward a future where customer interactions are smooth, proactive, and deeply personalized.

For CX leaders, these capabilities open up unprecedented opportunities to exceed customer expectations. Those adopting next-generation Voice AI are positioning themselves at the forefront of customer service innovation, offering cutting-edge experiences that differentiate them from competitors. As this technology continues to advance, it will become even more powerful, more intuitive, and essential for delivering exceptional, customer-centric service.

Voice AI’s exciting road ahead

From the original Voice AI chatbot to today, Voice AI’s evolution has already transformed the customer experience—and the future promises continued innovation. From intelligent human-like conversations to predictive capabilities that anticipate needs, Voice AI is destined to change the way businesses interact with their customers in profound ways.

The exciting thing is that this is just the beginning.

The next wave of Voice AI advancements will open up new possibilities that we can only imagine. As a CX leader, the opportunity to harness this technology and stay ahead of customer expectations is within reach. It could be the most exciting time to be at the forefront of these changes.

At Quiq, we are here to guide you through this journey. If you’re curious about our Voice AI offering, we encourage you to watch our recent webinar on how we harness this incredible technology.

One thing is for sure, though: As the landscape continues to evolve, we’ll be right alongside you, helping you adapt, innovate, and lead in this new era of customer experience. Stay tuned, because the future of Voice AI is just getting started, and we’ll continue to share insights and strategies to ensure you stay ahead in this rapidly changing world.