Key Takeaways

- Synchronous messaging happens in real time, requires both parties to be present, and is best for urgent issues, quick answers, or troubleshooting.

- Asynchronous messaging allows participants to communicate at different times, supports multiple conversations in parallel, and is best for complex, non-urgent cases, ongoing relationships, or cross-time-zone collaboration.

- Both approaches are complementary – a balanced mix, often enhanced with AI assistants, creates a cost-effective customer experience strategy.

- Beyond CX – the synchronous vs. asynchronous distinction also shapes programming, education, and teamwork, influencing how we build, learn, and collaborate.

Text messaging has become more and more important with each successive generation of customers, and CX directors have responded by gradually making it an ever-higher priority.

But text messaging isn’t a one-size-fits-all solution; there are different ways to approach messaging interactions, and they each have their own use cases.

We’ve talked a lot, for example, about the distinction between rich messaging and plain text messaging, but another key divide is around “synchronous” and “asynchronous” messaging.

That will be our focus today. We’ll define synchronous and asynchronous messaging, explain how each applies to your messaging strategy, and provide the information you need to decide when to use one or the other.

Asynchronous vs Synchronous Messaging: What’s the difference?

In this section, we’ll define asynchronous vs synchronous messaging in simple and clear terms before we discuss their differences.

At their core:

- Synchronous = real-time, dependent, sequential, and blocking.

- Asynchronous = flexible, independent, non-blocking, and staggered.

This distinction is broader than customer service; it also shows up in programming, learning, and teamwork. For example, a phone call (synchronous) vs. an email (asynchronous).

What is Synchronous Messaging?

Synchronous messaging is part of a real-time conversation with a clearly defined beginning and end. Both parties must actively engage in the conversation at the same time, whether on their phones or on their keyboards.

You’ve no doubt heard of synchronized swimming or synchronized skating, and the principle is the same with synchronized messaging—everyone must participate at the same time.

Key Characteristics:

- Real-time interaction

- Sequential execution: each step depends on the last

- Blocking: progress pauses until the current exchange is complete

Examples Beyond CX:

- Communication: Phone calls, video conferences, in-person meetings

- Programming: Waiting for a database query before moving on

- Learning: Live webinars or classroom sessions

What is Asynchronous Messaging?

Asynchronous messaging occurs when two parties have a conversation but don’t have to be present at the same time; what’s more, with asynchronous messaging, there’s generally not a clearly defined end to the conversation.

If you’re like many of us, text messaging with your friends and family occurs asynchronously. When both of you are available, the conversation might go back and forth seamlessly, but you could also have the same conversation over a longer period of time while you’re both working or running errands.

Key Characteristics:

- Flexible interaction: participants don’t need to be present at the same time

- Parallel execution: multiple conversations or tasks can happen at once

- Non-blocking: progress continues without waiting for an immediate response

Examples Beyond CX:

- Communication: Emails, text messages, WhatsApp threads

- Programming: Non-blocking I/O, callbacks, message queues

- Learning: Pre-recorded lectures, discussion boards, self-paced online courses

An Overview of Synchronous Messaging

Above, we took time to define synchronous messaging as any interaction that occurs in real-time when two or more participants are actively engaged. All of today’s channels support synchronous messaging, but web chat and many kinds of in-app messaging are particularly associated with this style of communication.

Pros of Synchronous Messaging.

For a number of reasons, synchronous messaging has an important place in customer service. A non-exhaustive list of its benefits includes the fact that:

- Customers feel more connected: Since conversations are happening in real-time, customers instantly feel more engaged and connected to your contact center agents. They know there’s a real person on the other side of the screen helping them at this very moment, and that can change how they perceive the whole conversation.

- It’s easy to track performance: Since synchronous messages have a defined beginning and end, it’s easier to track metrics like average resolution time to see whether you’re performance is trending up or down.

- Resolutions are faster: Simple problems can be resolved faster over synchronous messaging. Customers are able to immediately get answers to their questions, so small issues don’t get dragged out.

Cons of Synchronous Messaging.

Despite this, synchronous messaging nevertheless has challenges. Here are some problems your team can face when relying solely on this type of messaging.

- Customers spend more time waiting: During busy periods, agents cannot handle multiple conversations simultaneously, and wait times can increase.

- Agents can only handle one conversation at a time: The key factor in a synchronous conversation is that both parties are there chatting at the same time. But doing so means your agents won’t be able to juggle multiple conversations at once, making them slower overall. The alternative, of course, is to help your agents quickly serve customers by equipping them with an AI-powered agent response tool, helping them handle more conversations in the same amount of time.

- It’s harder to solve complex problems: Synchronous messaging may be less than ideal for situations where your agents don’t have the expertise to solve a particular problem. Customers may have to repeat themselves if they’re being passed from one agent to the next, and they’ll likely also spend more time on hold, none of which is optimal.

- Customers can’t get answers outside of business hours: Customers are used to getting what they want when they want it. Since agents must be present for synchronous conversations, customers can only chat during business hours. The alternative, of course, is to hire more agents to work shifts throughout the day or to invest in an AI assistant that is always present.

- It can cost more money: Since agents can’t handle as many conversations at once, you’ll likely need to hire more agents to cover the same number of calls.

An Overview of Asynchronous Messaging

All of today’s messaging options allow for asynchronous messaging, which means that the parties involved don’t have to be present at the same time to hold a conversation.

Interactions that occur over basic SMS, Apple Messages for Business, Instagram, WhatsApp, Twitter Direct Messages, and RCS Business Messaging can be dropped and picked back up again when convenient.

Pros of Asynchronous Messaging.

When compared to synchronous messaging, asynchronous messaging comes out ahead by providing benefits to your customers and your contact center team.

Here are some of the benefits for your customers:

- They can multitask: Since conversations happen at the customer’s convenience, customers can go about their lives while receiving help from your team. They’re not locked into a phone conversation or waiting on hold while your agents find answers, making the experience much more pleasurable.

- Customers don’t have to repeat information: The big draw of asynchronous messaging is that it creates an ongoing conversation, meaning that your agents will have access to the conversation history. For this reason, customers won’t have to repeat themselves every time they contact customer service because their information is already there.

Here are just a few ways it improves your contact center teams’ workflows over synchronous messaging:

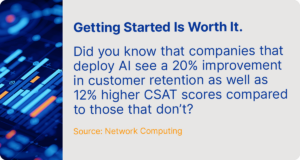

- Agents can manage several conversations at once: Since conversations happen at a slower pace, agents can engage in more than one at a time–up to eight at once with a conversational AI platform like Quiq.

- Agents show improved efficiency: Since agents can manage 3-5 simultaneous conversations, they can move between customers to improve their overall efficiency by a considerable amount.

- Lower costs for your customer service center: Since agents are working faster and helping multiple customers at once, you need fewer agents. Instead, you can spend money on better training, higher-quality tools, or expanding services.

- It’s friendly to AI assistants: With asynchronous messaging, it’s relatively easy to integrate AI assistants powered by large language models. These assistants can welcome customers, gather information, and answer many basic queries, thus streamlining agents and freeing them up to focus on higher-priority tasks.

Cons of Asynchronous Messaging.

That said, asynchronous messaging does come with a few challenges:

- It can turn short conversations into long ones: There can be situations in which a customer reaches out with a simple question, your agent has their own follow-up question, and the customer responds hours or even days later. One of the traps of asynchronous messaging is that people tend to be less urgent in replying, which could be reflected in longer resolution times and an increase in the number of open tickets your agents have on their dockets.

- It can be harder to track: Asynchronous messaging often doesn’t have a clear beginning or end, making it difficult to measure. This issue is ameliorated to a considerable extent if you partner with a purpose-built conversational AI platform able to measure tricky, nebulous metrics like concurrent average handle time.

- Agents have to be able to multitask: Having multiple conversations at the same time, and switching seamlessly between them, is a skill. If not trained properly, agents can get overwhelmed, which can show in their customer communications.

Implementing Synchronous and Asynchronous Messaging.

Despite their differences (or because of them), both synchronous and asynchronous messaging have a place in your customer service strategy.

When to use Synchronous Messaging.

We’ve already answered “what is synchronous messaging?” Now let’s look at the situations in which synchronous messaging is the better approach, including:

- When customers need quick answers: There’s no better reason to use synchronous messaging than when customers need quick, immediate answers. In such cases, it will often not be worth stretching a conversation out over an asynchronous communication.

- When diffusing difficult situations: As much effort as we expend trying to address customer service challenges, they inevitably happen. Upset customers don’t want to wait for replies while they go about their day; they want immediate responses so they can get their needs met, and that requires synchronous messaging.

- When troubleshooting issues with customers: It’s much easier to walk customers through troubleshooting in real-time, instead of stretching out the conversation over hours or days.

When to Use Asynchronous Messaging.

Asynchronous messaging is best used when customer issues aren’t immediate, such as:

- When resolving (certain) complex issues: When customers come to your service team with complex issues that can be solved more slowly, asynchronous messaging really shines. It enables multiple agents and experts to jump in and out of the chat without requiring customers to wait on hold or repeat their information. (Note, however, that there’s a tension between this point and the last point from the previous section, which counseled using synchronous messaging for exactly this purpose. To clarify, urgent issues should probably be handled with synchronous messaging, but if an issue is complex, it’s a good candidate for asynchronous communication, especially if it’s relatively non-urgent and only resolvable with help from experts in multiple areas. Use your judgment.)

- When building relationships: Asynchronous messaging is a great way to build customer relationships. Since there’s no clear ending, customers can continue to go back to the same chat and have conversations throughout their customer journey.

- When work is especially busy: When your customer service team is overwhelmed, asynchronous messaging allows them to prioritize customer issues and handle the most timely ones first. The tools provided by a conversational AI platform like Quiq can help by e.g. gauging customer sentiment to determine who needs immediate attention and who can wait for a response.

Embrace Asynchronous and Synchronous Messaging.

We covered a lot of ground in this article! We defined synchronous and asynchronous messaging, discussed the pros and cons of each, and provided invaluable guidance on when to utilize one over the other.

Another subject we’ve touched on repeatedly is the value that agentic AI can bring to organizations focused on customer experience. Check out this whitepaper for more details. Our research has convinced us that agentic AI is one of the next big trends shaping our industry, and you don’t want to be left behind.

Frequently Asked Questions (FAQs)

What is the main difference between synchronous and asynchronous messaging?

Synchronous messaging requires both parties to be present and responding in real-time, while asynchronous messaging allows people to respond at different times, making it more flexible.

Is asynchronous messaging always better than synchronous?

Not necessarily. Asynchronous is ideal for flexibility, multitasking, and managing multiple conversations, but synchronous is best when quick answers or real-time troubleshooting are required.

Can companies use both synchronous and asynchronous messaging together?

Yes. The most effective customer service strategies blend both. Urgent inquiries can be handled synchronously, while complex, non-urgent issues or relationship-building can happen asynchronously.

How do synchronous and asynchronous communication apply outside of customer service?

The distinction applies across many fields – like programming (blocking vs. non-blocking tasks), education (live classes vs. self-paced courses), and teamwork (video meetings vs. Slack or project boards).

How does AI support synchronous and asynchronous messaging?

AI assistants can support synchronous conversations with faster responses and help scale asynchronous channels by handling FAQs, collecting customer feedback, and routing issues efficiently.