Large Language Models play a pivotal role in automating conversations, enhancing customer experiences, and scaling support capabilities. However, delivering on these promises goes beyond simply deploying powerful models; it involves utilizing a comprehensive LLM, or generative AI, toolkit that enables effective integration, orchestration, and monitoring of agentic AI workflows.

In this article, I’ll touch on a few time-tested software practices that have helped me bridge the gap between traditional software development and agentic AI engineering.

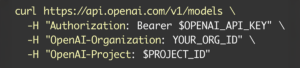

1. API Discoverability and Graph-Based RESTful APIs

Data access is crucial for AI agents tasked with understanding and responding to complex customer inquiries. Modern LLM developer tools should facilitate understanding and access through APIs that are well defined with JSON-LD, GraphQL, or OpenAPI spec. These API protocols enable AI agents to dynamically query and interpret interconnected data structures. The more discoverable your APIs, the easier it becomes for your AI to provide personalized and accurate service.

Similar to human agents onboarding to your support team, AI agents need access and understanding of your system data to provide relevant and accurate customer service.

2. Design by Contract with AI Function Calling

Ensuring reliable AI-to-system interactions requires strict compliance with well-defined operational rules. This is where the practice of design by contract proves invaluable. The best LLM tools should establish clear contracts for AI functions, ensuring that each interaction occurs within its designated boundaries and yields the expected outcomes. This structured approach minimizes errors and enhances the reliability of AI agents by mandating validation checks when reading or writing data.

Your LLM toolkit should promote and enforce a defined data schema for your AI agents. For more insights, refer to our exploration of this topic in our LLM Function Calling post.

3. Functional and Aspect-Oriented Programming

Functional programming emphasizes pure functions and immutability, and when combined with aspect-oriented programming, which tackles cross-cutting concerns, it establishes robust and scalable frameworks ideal for AI development.

Modern LLM toolkits that embrace these paradigms offer sophisticated tools for constructing more resilient cognitive architectures. These components can be independently developed, tested, and reused, making them ideal for assembling complex AI agents, including agent swarms. Agent swarms, consisting of multiple AI agents working in concert, benefit particularly from an atomic, yet cohesive approach to decision making. Your design choices will become crucial as the demands of customer interactions grow more complex over time.

4. Observability: Ensuring Transparency and Performance

Your LLM toolkit should offer comprehensive monitoring capabilities that allow developers and business operators to track how AI agents make decisions. These tools should enable high level and deep dive analysis that clearly shows how inputs are processed and decisions are formulated. This level of transparency is crucial for troubleshooting and optimizing performance.

By offering detailed insights into AI performance and behavior, modern LLM toolkits play a critical role in helping businesses maintain high service quality and build trust in their AI-driven solutions. The ability to trace how and why a message was delivered or an action taken has never been so important, and top LLM dev tools provide it. Traditional logging and APM software won’t cut it in the era of stochastic AI. Please see Quiq’s LLM Observability post for a deeper discussion on the topic.

5. Continuous Integration

Continuous integration (CI) systems within LLM toolkits play an important role in development, testing, integration, and deployment of AI agents. Your toolkit should ensure agents adapt correctly to changes in models, data, logic or your system at large. LLM toolkits that oversee the lifecycle of AI agents will need to be resilient to updates and iterative improvements based on real-world scenarios and emerging capabilities of the models.

Additionally, modern LLM toolkits, such as those highlighted in Quiq’s AI Studio Debug Workbench, should provide an environment for running a wide range of scenarios. This includes allowing developers to closely inspect, recreate and replay AI behavior on-demand or test-time. You will need to be well informed and react quickly and confidently across the lifecycle of your project.

Remaining Skeptical in the Era of AI

As a software developer with 20 years of experience, I’ve found that a healthy dose of skepticism and reliance on time-tested practices have helped me remain focused on building robust solutions. Not only has this experience proven effective over the years, but it has also laid a strong foundation for my journey as an Applied AI Engineer.

However, LLMs present new challenges that traditional tools and techniques alone can’t fully address. To unlock the potential of these models, we must remain adaptable and open to integrating new tools, techniques and tactics. While I still often use Emacs for editing, I’ve also come to fully embrace the LLM toolkit equipped with a visual procode interface that promotes solid engineering practices. An LLM toolkit will not erase the need for your software engineering practices, but it does provide me, my team and our customers with the tools necessary to unlock the power of AI in an enterprise environment.

Finally, tools like AI Studio offer a surface where we can collaborate with our counterparts across the business to help grow AI that is well understood, reliable, and impactful. Without collaboration, an AI initiative will likely grind to a halt. You will need some new tools to help you bridge the gap.

To learn more about how Quiq is helping software engineers, operational teams and business leaders find the intersection of AI in 2025, learn more about AI Studio.