AI chat applications powered by Large Language Models (LLMs) have helped us reimagine what is possible in a new generation of AI computing.

Along with this excitement, there is also a fair share of concern and fear about the potential risks. Recent media coverage, such as this article from the New York Times, highlights how the safety measures of ChatGPT can be circumvented to produce harmful information.

To better understand the security risks of LLMs in customer service, it’s important we add some context and differentiate between “Broad AI” versus “Scoped AI”. In this article, we’ll discuss some of the tactics used to safely deploy scoped AI assistants in a customer service context.

Broad AI vs. Scoped AI: Understanding the Distinction

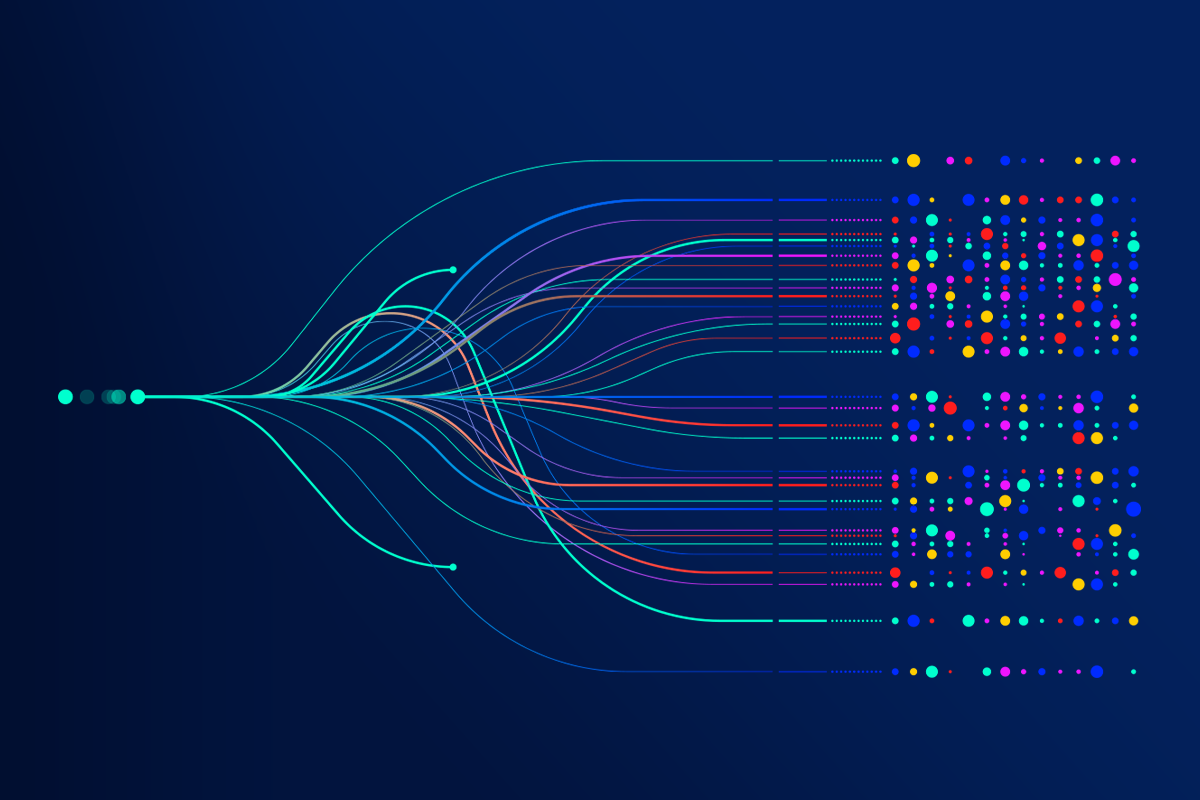

Scoped AI is designed to excel in a well-defined domain, guided and limited by a software layer that maintains its behavior within pre-set boundaries. This is in contrast to broad AI, which is designed to perform a wide range of tasks across virtually all domains.

Scoped AI and Broad AI answer questions fundamentally differently. With Scoped AI the LLM is not used to determine the answer, it is used to compose a response from the resources given to it. Conversely, answers to questions in Broad AI are determined by the LLM and cannot be verified.

Broad AI simply takes a user message and generates a response from the LLM; there is no control layer outside of the LLM itself. Scoped AI is a software layer that applies many steps to control the interaction and enforce safety measures applicable to your company.

In the following sections, we’ll dig into a more detailed explanation of the steps.

Ensuring the Safety of Scoped AI in Customer Service

1. Inbound Message Filtering

Your AI should perform a semantic similarity search to recognize in-scope vs out-of-scope messages from a customer. Malicious characters and known prompt injections should be identified and rejected with a static response. Inbound message filtering is an important step in limiting the surface area to the messages expected from your customers.

2. Classifying Scope

LLMs possess strong Natural Language Understanding and Reasoning skills (NLU & NLR). An AI assistant should perform a number of classifications. Common classifications include the topic, user type, sentiment, and sensitivity of the message. These classifications should be specific to your company and the jobs of your AI assistant. A data model and rules engine should be used to apply your safety controls.

3. Resource Integration

Once an inbound message is determined to be in-scope, company-approved resources should be retrieved for the LLM to consult. Common resources include knowledge articles, brand facts, product catalogs, buying guides, user-specific data, or defined conversational flows and steps.

Your AI assistant should support non-LLM-based interactions to securely authenticate the end user or access sensitive resources. Authenticating users and validating data are important safety measures in many conversational flows.

4. Verifying Responses

With a response in hand, the AI should verify the answer is in scope and on brand. Fact-checking and corroboration techniques should be used to ensure the information is derived from the resource material. An outbound message should never be delivered to a customer if it cannot be verified by the context your AI has on hand.

5. Outbound Message Filtering

Outbound message filtering tactics include: conducting prompt leakage analysis, semantic similarity checks, consulting keyword blacklists, and ensuring all links and contact information are in-scope of your company.

6. Safety Monitoring and Analysis

Deploying AI safely also requires that you have mechanisms to capture and retrospect on the completed conversations. Collecting user feedback, tracking resource usage, reviewing state changes, and clustering conversations should be available to help you identify and reinforce the safety measures of your AI.

In addition, performing full conversation classifications will also allow you to identify emerging topics, confirm resolution rates, produce safety reports, and understand the knowledge gaps of your AI.

Other Resources

At Quiq, we actively monitor and endorse the OWASP Top 10 for Large Language Model Applications. This guide is provided to help promote secure and reliable AI practices when working with LLMs. We recommend companies exploring LLMs and evaluating AI safety consult this list to help navigate their projects.

Final Thoughts

By safely leveraging LLM technology through a Scoped AI software layer, CX leaders can:

1. Elevate Customer Experience

2. Boost Operational Efficiency

3. Enhance Decision Making

4. Ensure Consistency and Compliance

Reach out to sales@quiq.com to learn how Quiq is helping companies improve customer satisfaction and drive efficiency at the same.