Generative AI customer service promises.

Unprecedented performance and efficiency.

Implementing Generative AI customer service by using LLMs can result in a marked increase in response times. These AI-enabled systems can process and comprehend customer inquiries much faster than human agents, providing swift responses to a wide range of questions. This enhanced speed compared to human agents translates to minimized wait times for customers and increased efficiency for support teams.

Further, AI-driven systems can manage unlimited customer interactions simultaneously, effectively eradicating queue times and ensuring immediate attention to every customer. This functionality allows businesses to scale their customer service operations without a proportional increase in their workforce, leading to significant cost savings and improved customer satisfaction.

Personalized customer experiences throughout the customer journey.

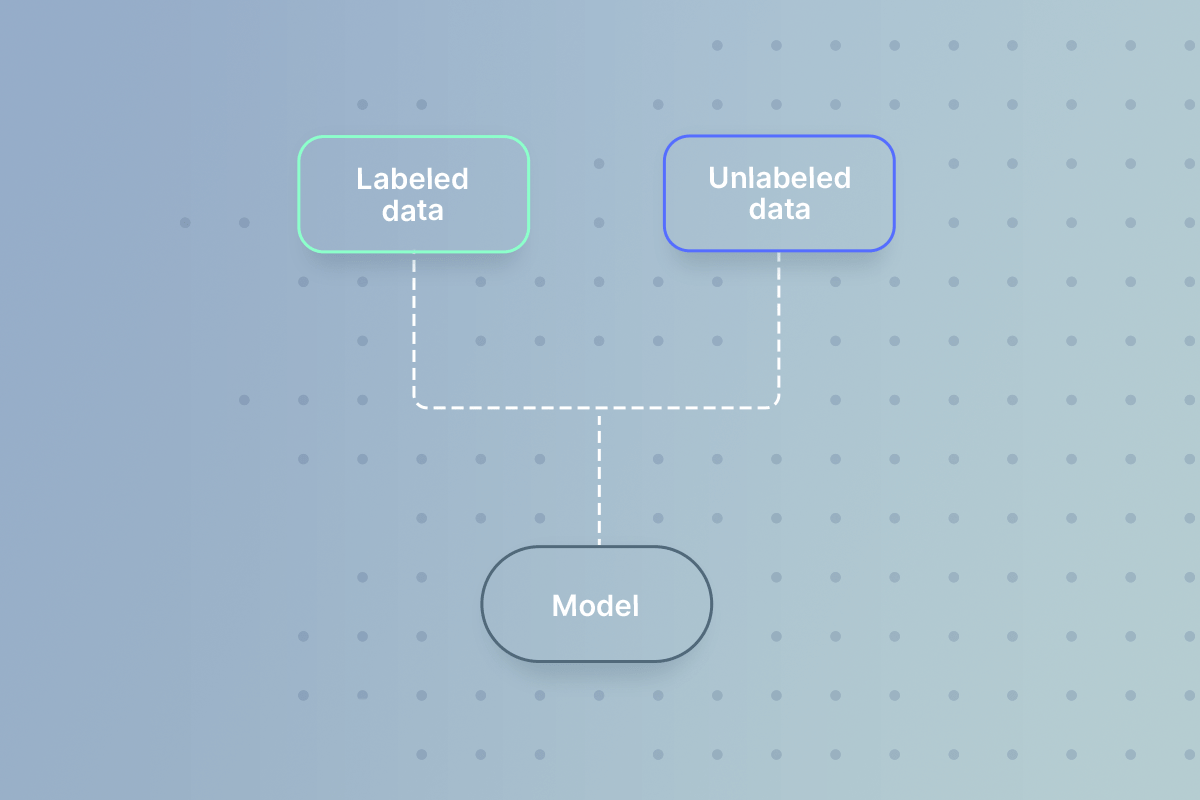

Generative AI and LLMs are exceptionally proficient at understanding the context and subtlety in language, enabling them to provide highly customized responses to customer inquiries throughout customer journeys—including pre- and post-sales. By analyzing extensive data and learning from previous interactions, these AI systems can adapt their communication style and content to align with individual customer preferences and needs—all while matching a brand’s voice and tone.

This heightened level of customization extends beyond merely addressing customers by name. AI-powered customer service can recall past interactions, anticipate customer needs, read and write information from external systems like CRMs, knowledge bases, and more—and offer proactive solutions, creating a truly personalized experience that promotes customer loyalty and satisfaction.

24/7 availability and consistency.

Unlike human agents, AI-powered customer service systems can operate around the clock without fatigue or breaks. Constant availability ensures that customers can receive support whenever needed, regardless of time zones or holidays.

The consistency in service quality is another significant advantage, as AI systems maintain the same level of knowledge and politeness throughout all interactions, eliminating the variability that can occur with human agents.

Multilingual support.

Large Language Models possess the remarkable ability to comprehend and generate text in multiple languages. This feature enables businesses to offer seamless multilingual support without requiring separate teams for each language. As a result, businesses can expand their global reach and provide consistent high-quality customer service to a diverse, international customer base.

Gain insights so you can iteratively improve CX.

You can use generative AI agents to review all customer interactions and provide feedback on them. This allows you to get new insight into your customer conversations, identify areas for improvement, and pinpoint trends and topics—so you can continually improve your CX.