Modern AI agents, powered by Large Language Models (LLMs), are transforming how businesses engage with users through natural, context-aware interactions. This marks a decisive shift away from traditional chatbot building platforms with their rigid decision trees and limited understanding. For both AI assistant builders focused on creating agent-facing experiences and those creating customer-facing AI agents, this evolution brings both opportunity and challenge. While LLMs have dramatically expanded what’s possible, they’ve also introduced new complexities in development, testing, and deployment.

In Part One of this technical guide, we’ll focus on the foundational principles and architecture needed to build production-ready AI agents. We’ll explore purpose definition, cognitive architecture, model selection, and data preparation. Drawing from real-world experience, we’ll examine key concepts like atomic prompting, disambiguation strategies, and the critical role of observability in managing the inherently probabilistic nature of LLM-based systems.

Rather than treating LLMs as black boxes, we’ll dive deep into the structural elements that make agentic AI exceptional – from cognitive architecture design to sophisticated response generation. Our approach balances practical implementation with technical rigor, emphasizing methods that scale effectively and produce consistent results.

Then, in Part Two, we’ll explore implementation details, observability patterns, and advanced features that take your AI agents from functional to exceptional.

Whether you’re looking to build AI assistants for your customer service agents, public-facing AI agents for your customers, internal tools, or specialized applications, these principles will help you create more capable, reliable, and maintainable systems. Ready? Let’s get started.

Section 1: Understanding the Purpose and Scope

When you set out to design an AI agent, the first and most crucial step is establishing a clear understanding of its purpose and scope. The probabilistic nature of Large Language Models means we need to be particularly thoughtful about how we define success and measure progress. An agent that works perfectly in testing might struggle with real-world interactions if we haven’t properly defined its boundaries and capabilities.

Defining Clear Objectives

The key to successful AI agent development lies in specificity. Vague objectives like “provide customer support” or “help users find information” leave too much room for interpretation and make it difficult to measure success. Instead, focus on concrete, measurable goals that acknowledge both the capabilities and limitations of your AI agent.

For example, rather than aiming to “answer all customer questions,” a better objective might be to “resolve specific categories of customer inquiries without human intervention.” This provides clear development guidance while establishing appropriate guardrails.

Requirements Analysis and Success Metrics

Success in AI agent development requires careful consideration of both quantitative and qualitative metrics. Response quality encompasses not just accuracy, but also relevance and consistency. An agent might provide factually correct information that fails to address the user’s actual need, or deliver inconsistent responses to similar queries.

Tracking both completion rates and solution paths helps us understand how our agent handles complex interactions. Knowledge attribution is critical – responses must be traceable to verified sources to maintain system trust and accountability.

Designing for Reality

Real-world interactions rarely follow ideal paths. Users are often vague, change topics mid-conversation, or ask questions that fall outside the agent’s scope. Successful AI agents need effective strategies for handling these situations gracefully.

Rather than trying to account for every possible scenario, focus on building flexible response frameworks. Your agent should be able to:

- Identify requests that need clarification

- Maintain conversation flow during topic changes

- Identify and appropriately handle out-of-scope requests

- Operate within defined security and compliance boundaries

Anticipating these real-world challenges during planning helps build the necessary foundations for handling uncertainty throughout development.

Section 2: Cognitive Architecture Fundamentals

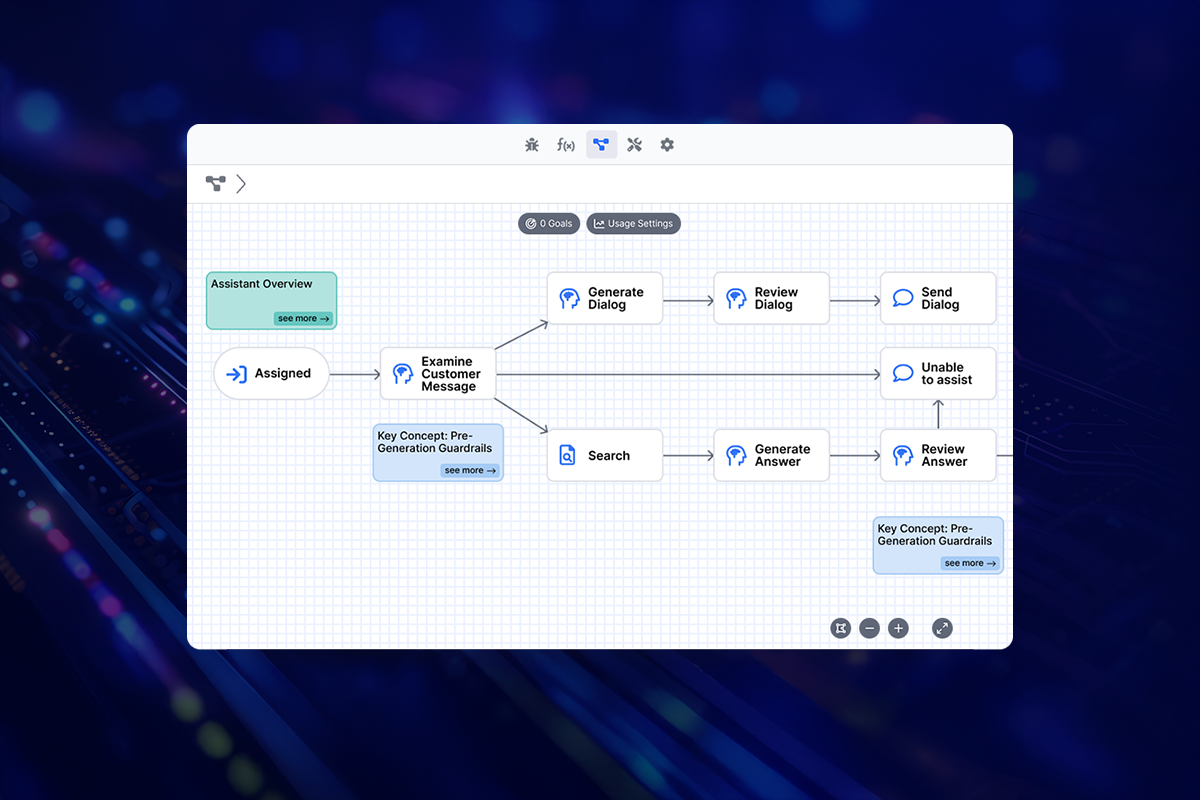

The cognitive architecture of an AI agent defines how it processes information, makes decisions, and maintains state. This fundamental aspect of agent design in AI must handle the complexities of natural language interaction while maintaining consistent, reliable behavior across conversations.

Knowledge Representation Systems

An AI agent needs clear access to its knowledge sources to provide accurate, reliable responses. This means understanding what information is available and how to access it effectively. Your agent should seamlessly navigate reference materials and documentation while accessing real-time data through APIs when needed. The knowledge system must maintain conversation context while operating within defined business rules and constraints.

Memory Management

AI agents require sophisticated memory management to handle both immediate interactions and longer-term context. Working memory serves as the agent’s active workspace, tracking conversation state, immediate goals, and temporary task variables. Think of it like a customer service representative’s notepad during a call – holding important details for the current interaction without getting overwhelmed by unnecessary information.

Beyond immediate conversation needs, agents must also efficiently handle longer-term context through API interactions. This could mean pulling customer data, retrieving order information, or accessing account details. The key is maintaining just enough state to inform current decisions, while keeping the working memory focused and efficient.

Decision-Making Frameworks

Decision making in AI agents should be both systematic and transparent. An effective framework begins with careful input analysis to understand the true intent behind user queries. This understanding combines with context evaluation – assessing both current state and relevant history – to determine the most appropriate action.

Execution monitoring is crucial as decisions are made. Every action should be traceable and adjustable, allowing for continuous improvement based on real-world performance. This transparency enables both debugging when issues arise and systematic enhancement of the agent’s capabilities over time.

Atomic Prompting Architecture

Atomic prompting is fundamental to building reliable AI agents. Rather than creating complex, multi-task prompts, we break down operations into their smallest meaningful units. This approach significantly improves reliability and predictability – single-purpose prompts are more likely to produce consistent results and are easier to validate.

A key advantage of atomic prompting is efficient parallel processing. Instead of sequential task handling, independent prompts can run simultaneously, reducing overall response time. While one prompt classifies an inquiry type, another can extract relevant entities, and a third can assess user emotion. These parallel operations improve efficiency while providing multiple perspectives for better decision-making.

The atomic nature of these prompts makes parallel processing more reliable. Each prompt’s single, well-defined responsibility allows multiple operations without context contamination or conflicting outputs. This approach simplifies testing and validation, providing clear success criteria for each prompt and making it easier to identify and fix issues when they arise.

For example, handling a customer order inquiry might involve separate prompts to:

- Classify the inquiry type

- Extract relevant identifiers

- Determine needed information

- Format the response appropriately

Each step has a clear, single responsibility, making the system more maintainable and reliable.

When issues do occur, atomic prompting enables precise identification of where things went wrong and provides clear paths for recovery. This granular approach allows graceful degradation when needed, maintaining an optimal user experience even when perfect execution isn’t possible.

Section 3: Model Selection and Optimization

Choosing the right language models for your AI agent is a critical architectural decision that impacts everything from response quality to operational costs. Rather than defaulting to the most powerful (and expensive) model for all tasks, consider a strategic approach to model selection.

Different components of your agent’s cognitive pipeline may require different models. While using the latest, most sophisticated model for everything might seem appealing, it’s rarely the most efficient approach. Balance response quality with resource usage – inference speed and cost per token significantly impact your agent’s practicality and scalability.

Task-specific optimization means matching different models to different pipeline components based on task complexity. This strategic selection creates a more efficient and cost-effective system while maintaining high-quality interactions.

Language models evolve rapidly, with new versions and capabilities frequently emerging. Design your architecture with this evolution in mind, enabling model version flexibility and clear testing protocols for updates. This approach ensures your agent can leverage improvements in the field while maintaining reliable performance.

Model selection is crucial, but models are only as good as their input data. Let’s examine how to prepare and organize your data to maximize your agent’s effectiveness.

Section 4: Data Collection and Preparation

Success with AI agents depends heavily on data quality and organization. While LLMs provide powerful baseline capabilities, your agent’s effectiveness relies on well-structured organizational knowledge. Data organization, though typically one of the most challenging and time-consuming aspects of AI development, can be streamlined with the right tools and approach. This allows you to focus on building exceptional AI experiences rather than getting bogged down in manual processes.

Dataset Curation Best Practices

When preparing data for your AI agent, prioritize quality over quantity. Start by identifying content that directly supports your agent’s objectives – product documentation, support articles, FAQs, and procedural guides. Focus on materials that address common user queries, explain key processes, and outline important policies or limitations.

Data Cleaning and Preprocessing

Raw documentation rarely comes in a format that’s immediately useful for an AI agent. Think of this stage as translation work – you’re taking content written for human consumption and preparing it for effective AI use. Long documents must be chunked while maintaining context, key information extracted from dense text, and formatting standardized.

Information should be presented in direct, unambiguous terms, which could mean rewriting complex technical explanations or breaking down complicated processes into clearer steps. Consistent terminology becomes crucial throughout your knowledge base. During this process, watch for:

- Outdated information that needs updating

- Contradictions between different sources

- Technical details that need validation

- Coverage gaps in key areas

Automated Data Transformation and Enrichment

Manual data preparation quickly becomes unsustainable as your knowledge base grows. The challenge isn’t just handling large volumes of content – it’s maintaining quality and consistency while keeping information current. This is where automated transformation and enrichment processes become essential.

Effective automation starts with smart content processing. Tools that understand semantic structure can automatically segment documents while preserving context and relationships, eliminating the need for manual chunking decisions.

Enrichment goes beyond basic processing. Modern tools can identify connections between information, generate additional context, and add appropriate classifications. This creates a richer, more interconnected knowledge base for your AI agent.

Perhaps most importantly, automated processes streamline ongoing maintenance. When new content arrives – whether product information, policy changes, or updated procedures – your transformation pipeline processes these updates consistently. This ensures your AI agent works with current, accurate information without constant manual intervention.

Establishing these automated processes early lets your team focus on improving agent behavior and user experience rather than data management. The key is balancing automation with oversight to ensure both efficiency and reliability.

What’s Next?

The foundational elements we’ve covered – from cognitive architecture to knowledge management – are essential building blocks for production-ready AI agents. But understanding architecture is just the beginning.

In Part Two, we’ll move from principles to practice, exploring implementation patterns, observability systems, and advanced features that separate exceptional AI agents from basic chatbots. Whether you’re building customer service assistants, internal tools, or specialized AI applications, these practical insights will help you create more capable, reliable, and sophisticated systems.

Read the next installment of this guide: Engineering Excellence: How to Build Your Own AI Assistant – Part 2