Key Takeaways

- Guardrails’ Role: Ensure AI operates responsibly, aligns with business goals, and meets compliance standards.

- Three Intervention Levels: Pre-generation (input checks), in-generation (real-time monitoring), and post-generation (response review).

- Implementation Techniques: Use prompt templating, moderation APIs, response scoring, and consensus modeling for safety and accuracy.

- CX Use Cases: Guardrails enable AI to handle simple tasks autonomously while escalating complex issues to humans.

- Balancing Risks: Testing ensures guardrails are neither too strict nor too loose, maintaining effectiveness.

- Human Oversight: Guardrails highlight when human intervention is needed for high-stakes or ambiguous situations.

- Emerging Tools: Tools like GuardrailsAI and PromptLayer, plus standards from NIST and IEEE, support responsible AI.

Large language models (LLMs) are rapidly becoming core components of enterprise systems, spanning from customer support to content creation. As adoption matures, the conversation is shifting from experimentation to deployment at scale with reliability and safeguards in place. That shift makes it essential to establish guardrails, practical systems that help AI behave in ways that reflect business priorities, meet compliance standards, and respect user expectations.

Guardrails aren’t constraints on innovation; they’re the structure that allows AI to operate responsibly and consistently, especially in customer-facing or high-stakes environments.

In this article, we’ll explore:

- The types of LLM guardrails

- Techniques for implementation

- Use cases and limitations

- How Quiq applies guardrails to support responsible automation in customer experience

Why Guardrails Matter for Enterprise-Grade LLMs

Enterprise AI adoption doesn’t occur in a vacuum; it takes place in environments where accuracy, privacy, and accountability are non-negotiable. Whether you’re in healthcare, finance, or customer service, the cost of an AI misstep can be high: misinformation, privacy breaches, regulatory violations, or reputational damage.

The key is knowing what your AI is allowed to do, and just as importantly, what it isn’t. That’s not just a technical problem; it’s a trust-building exercise for your customers, teams, and brand.

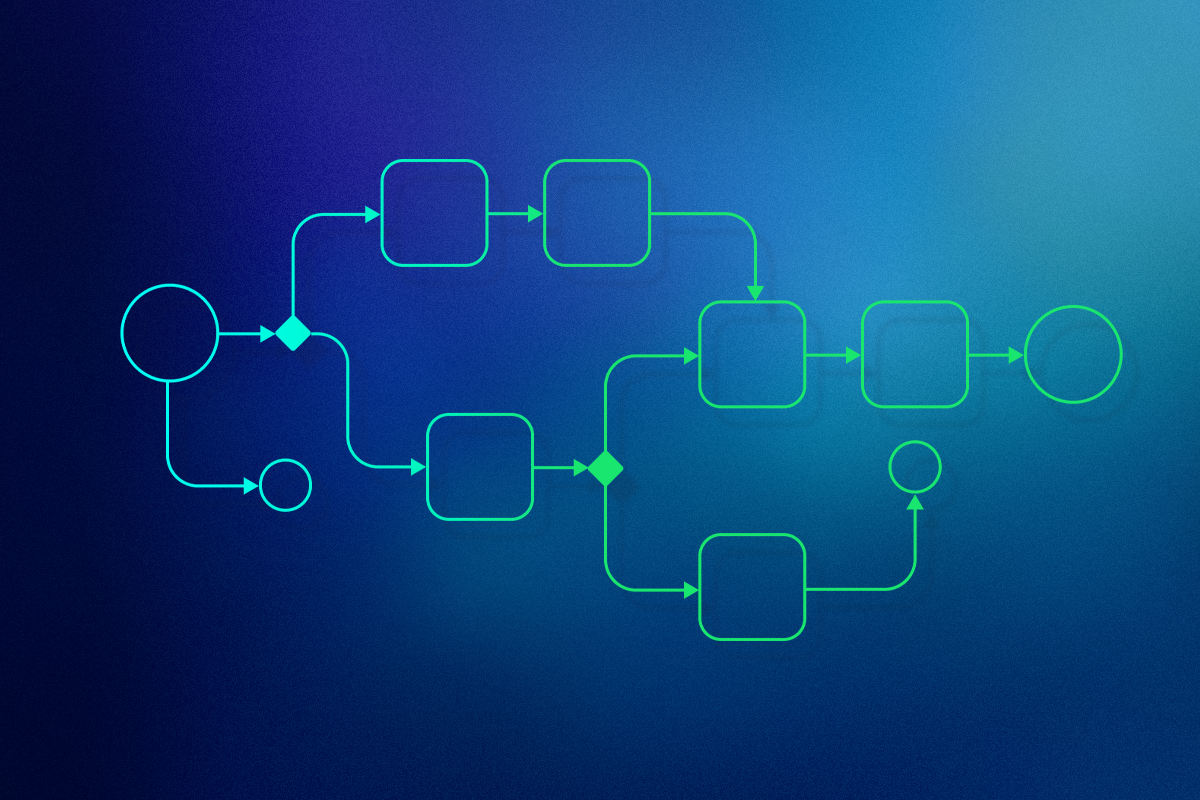

The Three Levels of LLM Intervention

Think of guardrails the way you’d think about safety features in a car; you don’t add them all at once. You install them where they’ll do the most good. When it comes to AI, most teams approach guardrails in three parts, each with a specific role to play.

1. Pre-generation

Guardrails begin before the model even sees an input. Before anything reaches the model, inputs are reviewed for red flags. That could mean something as simple as an odd format or as serious as a request for personal information. If the system identifies something out of scope—such as a task the AI isn’t designed to handle—it can halt the process, redirect the request to a human, or direct the user elsewhere.

2. In-generation

As the model generates its response, real-time checks help prevent inappropriate or out-of-scope output. Token-level monitoring with safety classifiers or stop sequences can interrupt generation if it begins to veer into restricted territory. This layer is instrumental in high-sensitivity environments where brand voice, compliance, or factual precision must be tightly controlled.

3. Post-generation

Once a response is generated, it doesn’t go straight to the user. Quiq applies a final layer of review, ranking the output based on quality, clarity, and confidence. If something appears to be off, such as unclear phrasing or questionable accuracy, the system can revise it, flag it, or send it to a human before it is delivered.

Quiq employs techniques across all three stages. On the input side, messages are scanned for prompt injection attempts and sensitive topics that a person, not a machine, should handle. On the output side, responses are evaluated against business-specific criteria, such as tone, factual grounding, and user expectations.

Common Techniques for Implementing LLM Guardrails

Quiq leverages a range of techniques to implement and reinforce guardrails for LLMs, a practice often referred to as “guardrails LLM,” that maintain safety, consistency, and customer trust across AI-powered conversations:

Prompt templating and input validation

Before a model can respond reliably, it has to understand what it’s being asked. That’s why Quiq puts structure around the prompt itself and makes sure inputs are clear, complete, and within scope, so the AI isn’t working off something vague or off-target. It’s a simple step, but it keeps things from going sideways early on.

Moderation APIs

Integrated services from OpenAI, Google, AWS, and other providers help flag potentially unsafe, toxic, or inappropriate content before it’s processed. These APIs act as a first line of defense, especially useful in public-facing or high-volume channels.

Response scoring and reranking

Quiq doesn’t just generate a single response. Some responses need more scrutiny than others. If a reply doesn’t align with the context or falls short, it is filtered out or sent for review. Only those that align with the task and tone move forward.

Natural language inference (NLI) models

Even the most advanced LLMs can produce answers that sound right but aren’t. To help catch these errors, Quiq uses NLI techniques that compare the AI’s response with known facts before anything reaches the customer. Quiq applies NLI techniques to assess whether a generated response is supported by evidence or contradicts known facts.

Consensus modeling

When the stakes are high or the input is ambiguous, Quiq consults multiple models or higher-performance variants to build consensus. If the models disagree or produce borderline results, the response may be regenerated, adjusted, or handed off to a human.

Beyond these techniques, context setting and disambiguation are essential. The most effective guardrails sometimes look like good conversation design. If the AI doesn’t have enough information to answer confidently or if the request is too vague, it doesn’t guess. It asks a follow-up question, just like a human agent would. This not only prevents hallucinations but also improves the overall experience by clarifying intent before continuing.

As Pablo Arredondo, VP at CoCounsel at Thomson Reuters, explains to Wired, “Rather than just answering based on the memories encoded during the initial training of the model, you utilize the search engine to pull in real documents…and then anchor the response of the model to those documents.”

Key Use Cases in CX and Business Messaging

“National Furniture Retailer Reduces Escalations to Human Agents by 33%”

This case study highlights how a leading national furniture brand implemented Quiq’s AI platform to reduce support escalations by 33%, boost chat sales on their largest-ever high‑volume sales day, and handle proactive upsell guidance via product recommendations.

Customer-facing interactions are where the value of LLM guardrails becomes especially clear. They help AI stay on-message, support the brand voice, and avoid costly missteps.

Here are a few ways they come to life:

- An AI assistant working with a furniture retailer can handle delivery rescheduling without issue. But when it comes to refund requests, it pauses and escalates to a human before taking action.

- A CX agent assist tool can score conversations for tone and sentiment, but flags negative interactions for human quality review before any performance feedback is shared.

- An AI agent focused on sales can recommend products or services, but is limited to upselling only when relevant customer signals are detected.

In customer interactions, timing matters. When someone stops mid-conversation, such as during a product order, Quiq’s AI doesn’t prematurely close the loop. Instead, it triggers a check-in to help move things forward and avoid dropped requests.

Risks of Over-Reliance or Misconfiguration

While guardrails are essential, they aren’t foolproof. When configured too tightly, they can over-censor AI responses, blocking helpful or harmless content because it resembles something risky. This often results in replies that are vague, overly cautious, or simply not helpful to the customer.

On the other hand, loosening the guardrails too much can introduce risk. For example, hallucination filtering is designed to catch confident but incorrect answers. However, if tuned too conservatively, it can allow false negatives to slip through, enabling misleading or factually incorrect content to reach the customer. In some cases, that could result in an agent (human or AI) making an unauthorized refund or offering incorrect information.

Quiq addresses these tradeoffs through rigorous adversarial testing and curated test sets designed to surface both false positives and false negatives. This helps teams tune the system for real-world conditions, ensuring guardrails support, not obstruct, effective customer interactions.

The Role of Human-in-the-Loop

Guardrails don’t eliminate the need for human oversight. They clarify where it’s needed most. By identifying the limits of what an AI should handle, guardrails create clear decision points for when to escalate an issue to a person.

Scenarios involving high emotional stakes, ambiguous intent, or potential risk to the user or brand are common triggers. If a customer expresses frustration, makes a complaint that doesn’t match standard workflows, or raises a concern with legal, health, or safety implications, the AI defers to human judgment.

For example, if a user reports that a Wi-Fi device has caught fire, the system won’t attempt a scripted response. The system doesn’t try to solve everything on its own. When something serious arises, such as a safety concern or a conversation that’s clearly going off course, it flags the issue and hands it over. That way, a human agent can step in and handle it with the judgment and context it deserves.

Not every situation should be left to automation. In cases where the stakes are high or the details are unclear, it’s better to bring in a human. Quiq builds in natural points of escalation so agents can step in when it matters most.

This approach helps prevent missteps and keeps the experience grounded. It’s not about slowing things down. It’s about knowing when a conversation needs a person, not a model.

Emerging Tools and Standards in Guardrails AI

A growing ecosystem of tools and frameworks is helping teams enforce guardrails AI, which are platforms and libraries specifically designed to monitor and manage large language model behavior:

- GuardrailsAI: a Python framework for validation and output control

- PromptLayer and Rebuff: tooling for monitoring prompt history and security

- Moderation APIs: from OpenAI and other model providers

Industry groups, such as NIST and IEEE, are also working to formalize standards surrounding risk, explainability, and auditability.

Expert Insight

“AI guardrails are mechanisms that help ensure organizational standards, policies, and values are consistently reflected by enterprise AI systems.”

— McKinsey & Company

Quiq combines trusted third-party tools with in-house methods developed specifically for the complexities of enterprise customer experience. Let’s say someone wants to reschedule a delivery. As it’s a simple request, the system handles it using a quick, low-friction process. But if the question is about getting a refund, it gets a closer look. In those cases, the platform checks the details against the company’s policy. If it detects something outside the usual parameters, it alerts a human agent to intervene.

To help responses stay consistent with brand voice and customer expectations, Quiq uses adaptive prompt chains. These allow the AI to adjust its communication based on what’s already known about the customer, as well as the company’s tone and seasonal context. The result is a more relevant and human-like interaction that evolves with the conversation.

How Quiq Implements Responsible AI with Guardrails

At Quiq, guardrails are built into every layer of the LLM deployment pipeline:

- Secure inputs, where prompts are structured and validated

- Filtered outputs, using scoring and consensus models

- Confidence scoring, to assess and rerank replies before delivery

Internal frameworks are designed to align outputs with each client’s brand voice and policies. Any response below a confidence threshold is either escalated or regenerated.

Designing Guardrails for Scalable, Safe LLMs

Effective guardrails start early. Teams should build and test safety mechanisms during development, not after deployment. Guardrails aren’t about censorship, they’re about clarity. They help teams set expectations, limit AI agency appropriately, and maintain consistency at scale.

It’s not about what the AI can do. It’s about what it should do.

Frequently Asked Questions (FAQs)

What techniques implement guardrails?

Prompt templating, moderation APIs, response scoring, and consensus modeling.

How do guardrails improve CX?

They help AI stay on-brand, avoid errors, and escalate sensitive issues to humans.

What are LLM guardrails?

Systems ensuring AI operates responsibly, aligns with business goals, and meets compliance standards.

What are the three levels of guardrails?

Pre-generation (input checks), in-generation (real-time monitoring), and post-generation (response review).

Why is human oversight needed?

It ensures high-stakes or ambiguous issues are handled with care and judgment.