This is the second post in a three-part series clarifying the biggest misconceptions holding CX leaders like you back from integrating GenAI into their CX strategies. Our goal? To assuage your fears and help you start getting real about adding an AI Assistant to your contact center — all in a fun “two truths and a lie” format.

Did you know that the Golden Gate Bridge was transported for the second time across Egypt in October of 2016?

Or that the world record for crossing the English Channel entirely on foot is held by Christof Wandratsch of Germany, who completed the crossing in 14 hours and 51 minutes on August 14, 2020?

Probably not, because GenAI made these “facts” up. They’re called hallucinations, and AI hallucination misconceptions are holding a lot of CX leaders back from getting started with GenAI.

In the first post of our AI Misconceptions series, we discussed why your data is definitely good enough to make GenAI work for your business. In fact, you actually need a lot less data to get started with an AI Assistant than you probably think.

Now, we’re debunking AI hallucination myths and separating some of the biggest AI hallucination facts from fiction. Could adding an AI Assistant to your contact center put your brand at risk? Let’s find out.

Misconception #2: “GenAI will hallucinate and hurt my brand.”

While the example hallucinations provided above are harmless and even a little funny, this isn’t always the case. Unfortunately, there are many examples of times chatbots have cussed out customers or made racist or sexist remarks. This causes a lot of concern among CX leaders looking to use an AI Assistant to represent their brand.

Truth #1: Hallucinations are real (no pun intended).

Understanding AI hallucinations hinges on realizing that GenAI wants to provide answers — whether or not it has the right data. Hallucinations like those in the examples above occur for two common reasons.

AI-Induced Hallucinations Explained:

- The large language model (LLM) simply does not have the correct information it needs to answer a given question. This is what causes GenAI to get overly creative and start making up stories that it presents as truth.

- The LLM has been given an overly broad and/or contradictory dataset. In other words, the model gets confused and begins to draw conclusions that are not directly supported in the data, much like a human would do if they were inundated with irrelevant and conflicting information on a particular topic.

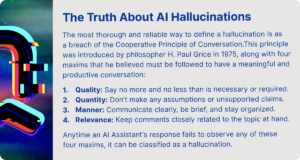

Truth #2: There’s more than one type of hallucination.

Contrary to popular belief, hallucinations aren’t just incorrect answers: They can also be classified as correct answers to the wrong questions. And these types of hallucinations are actually more common and more difficult to control.

For example, imagine a company’s AI Assistant is asked to help troubleshoot a problem that a customer is having with their TV. The Assistant could give the customer correct troubleshooting instructions — but for the wrong television model. In this case, GenAI isn’t wrong, it just didn’t fully understand the context of the question.

The Lie: There’s no way to prevent your AI Assistant from hallucinating.

Many GenAI “bot” vendors attempt to fine-tune an LLM, connect clients’ knowledge bases, and then trust it to generate responses to their customers’ questions. This approach will always result in hallucinations. A common workaround is to pre-program “canned” responses to specific questions. However, this leads to unhelpful and unnatural-sounding answers even to basic questions, which then wind up being escalated to live agents.

In contrast, true AI Assistants powered by the latest Conversational CX Platforms leverage LLMs as a tool to understand and generate language — but there’s a lot more going on under the hood.

First of all, preventing hallucinations is not just a technical task. It requires a layer of business logic that controls the flow of the conversation by providing a framework for how the Assistant should respond to users’ questions.

This framework guides a user down a specific path that enables the Assistant to gather the information the LLM needs to give the right answer to the right question. This is very similar to how you would train a human agent to ask a specific series of questions before diagnosing an issue and offering a solution. Meanwhile, in addition to understanding what the intent of the customer’s question is, the LLM can be used to extract additional information from the question.

Referred to as “pre-generation checks,” these filters are used to determine attributes such as whether the question was from an existing customer or prospect, which of the company’s products or services the question is about, and more. These checks happen in the background in mere seconds and can be used to select the right information to answer the question. Only once the Assistant understands the context of the client’s question and knows that it’s within scope of what it’s allowed to talk about does it ask the LLM to craft a response.

But the checks and balances don’t end there: The LLM is only allowed to generate responses using information from specific, trusted sources that have been pre-approved, and not from the dataset it was trained on.

In other words, humans are responsible for providing the LLM with a source of truth that it must “ground” its response in. In technical terms, this is called Retrieval Augmented Generation, or RAG — and if you want to get nerdy, you can read all about it here!

Last but not least, once a response has been crafted, a series of “post- generation checks” happens in the background before returning it to the user. You can check out the end-to-end process in the diagram below:

Give Hallucination Concerns the Heave-Ho

To sum it up: Yes, hallucinations happen. In fact, there’s more than one type of hallucination that CX leaders should be aware of.

However, now that you understand the reality of AI hallucination, you know that it’s totally preventable. All you need are the proper checks, balances, and guardrails in place, both from a technical and a business logic standpoint.

Now that you’ve had your biggest misconceptions about AI hallucination debunked, keep an eye out for the next blog in our series, all about GenAI data leaks. Or, learn the truth about all three of CX leaders’ biggest GenAI misconceptions now when you download our guide, Two Truths and a Lie: Breaking Down the Major GenAI Misconceptions Holding CX Leaders Back.